The EU's AI Act Falls Short. Heads Up to the Rest of the World

The European Union Artificial Intelligence Act of 2024 (the Act) leaves gaping holes in the treatment of AI policies and legislative frameworks. First conceptualized in 2021 and finally enacted in 2024, the Act has attracted commentary from all sectors with a stake in the current and future development and deployment of AI. Thinking in a binary fashion, the Act is either too little too late or too much too soon.

The Act is a bold attempt to legislate AI that may cause member states and others to fall further behind in their AI innovation advances. In a European Union context, the EU risks losing ground to non-regulated countries. These countries include the United States, China, and Russia. From a Silicon Valley perspective, new technology firms open for business at a rate not seen since the first Internet boom of the late 1990s. Tech firms engaged in AI projects receive much attention from the press, risk capital providers, and established corporations seeking growth through innovation.

In the U.S., the State Assembly (SB 1047) presented and passed an AI safety bill in California in 2024. The bill targeted larger AI models and the companies developing and deploying those models, such as generative artificial intelligence from Open AI, Google, Microsoft, and others.

California Governor Gavin Newsome vetoed the bill and stated, “Smaller, specialized (AI) models may emerge as equally or even more dangerous than the models targeted by SB 1047 — at the potential expense of curtailing the very innovation that fuels advancement in favor of the public good.”

Responding to Newsome’s veto, Scott Wiener, a co-author of SB 1047, said, “This veto leaves us with the troubling reality that companies aiming to create an extremely powerful technology face no binding restrictions from U.S. policymakers, particularly given Congress’s continuing paralysis around regulating the tech industry in any meaningful way.”

Unpacking the Artificial Intelligence Lifecycle

Dozens, if not hundreds, of news articles and opinion pieces emerged before, during, and after the EU Artificial Intelligence Act was rolled out to member states in 2024. The world does not need another summary of the Act’s timing, intent, and reach. However, readers need to know that the Act’s scope includes only three of five essential stages in the AI lifecycle.

Unpacking AI’s lifecycle, five stages emerge: (1) basic theory, (2) architecture design, (3) data, (4) algorithmic coding, and (5) usage. The scope of the Act includes stages three, four, and five. These three stages are the most publicly visible to constituents and governing bodies.

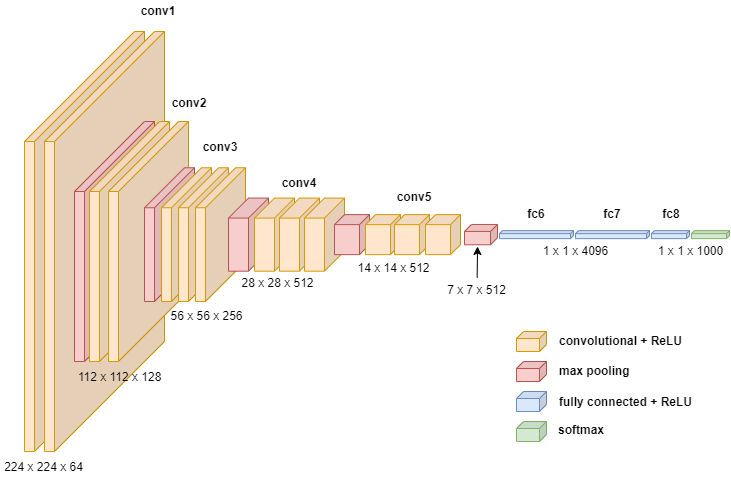

The European Commission has missed the first two hyper-critical stages: (1) the formulation of basic theory and (2) the design of overarching architectures for AI solutions and apps. For example, the basic theory of generative artificial intelligence is the mathematics of statistics, calculus, probability, and the architecture of the neural network (NN).

Granted, neural networks were first conceptualized over a century before the formation of the EU (c. 1850). Any application of AI regulation should reach back to the original conceptualization of the NN, identify sources of bias embedded in the basic theory, and take action to revamp the NN model to remove as much inherent bias as possible.

Several questions remain. What stages of the AI lifecycle should be regulated, and which should not? Should research scientists’ behaviors be legislated and controlled? How can basic theory be regulated without stifling innovation in computer science?

A note to governing bodies worldwide: focus on the scope of regulating AI at the country, state, and local levels. Observe with open minds the EU's experience of implementing the AI Act. Expand the scope of AI legislation to include basic theory and architecture design with caution. Be wary of compliance creep and continuously monitor the progress and regress of AI bias locally and globally.