[AINews] Llama 4's Controversial Weekend Release • Buttondown

This past weekend marked the highly anticipated release of Llama 4, a new addition to the Meta AI family. The headlines surrounding Llama 4's release have been nothing short of glowing, with promises of two new medium-size MoE open models and a massive third model with 2 trillion parameters. This latest release is expected to solidify Meta's position at the forefront of AI innovation, setting the bar high for open models.

SOTA training updates have also been making waves in the AI community, with the adoption of cutting-edge technologies such as Chameleon-like early fusion and MetaCLIP. These advancements, coupled with native FP8 training and models trained on up to 40T tokens, showcase the ongoing evolution of AI models.

Controversy Surrounding Llama 4

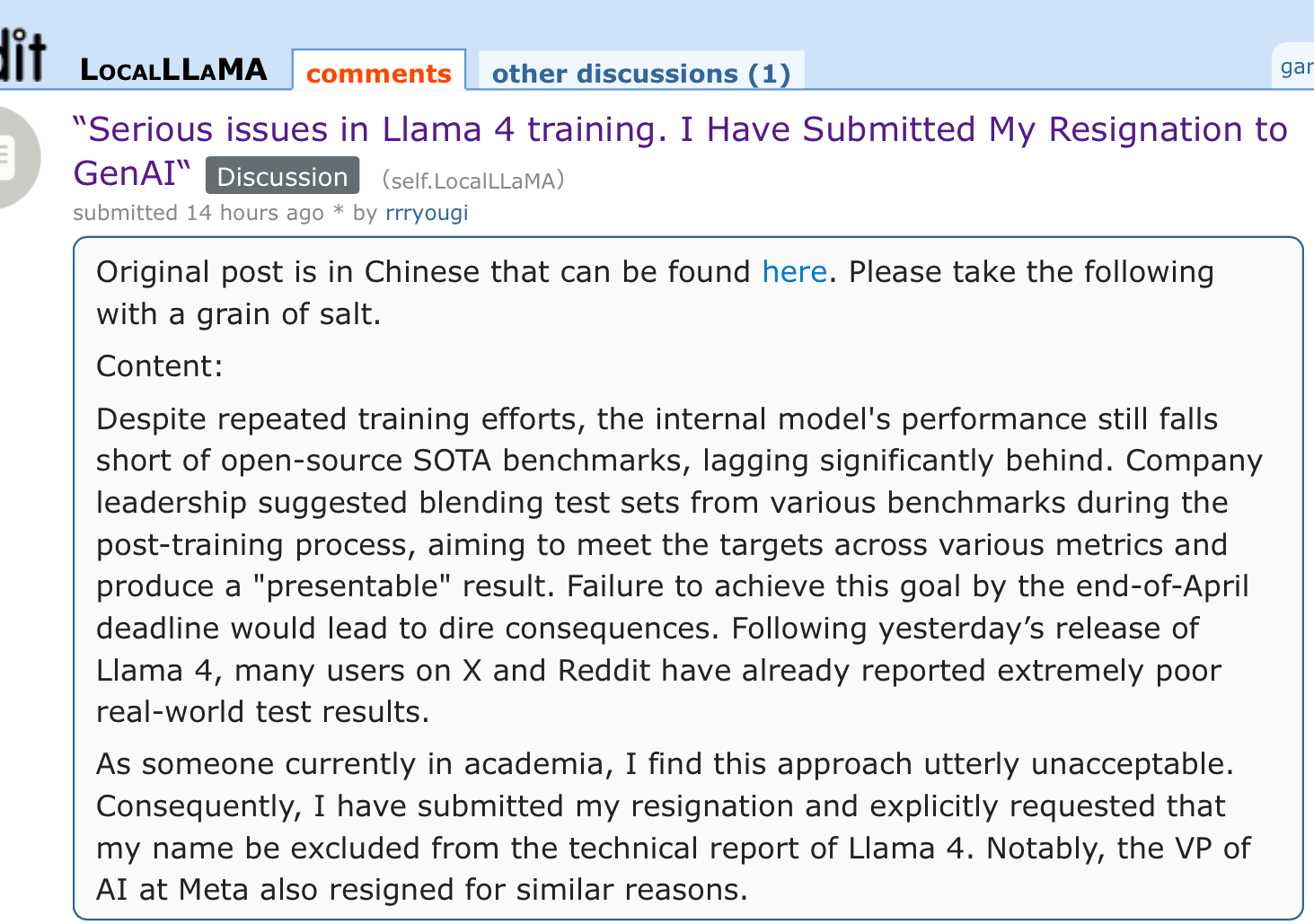

While Llama has traditionally been a trailblazer in the realm of open models, the reception to Llama 4 has been met with skepticism and controversy. Despite initial excitement, concerns have arisen regarding the models' performance and Meta's handling of the release.

Meta's leadership has categorically denied any issues with the release, but the perception that something may be amiss has cast a shadow over what should have been a celebratory moment for the AI community.

Community Response

The community's reaction to Llama 4 has been mixed, with some expressing disappointment in the models' performance and Meta's approach to innovation. Some have pointed out that the expert size of 17B parameters in Llama 4 feels inadequate compared to newer, more robust models from other companies.

Furthermore, the decision to restrict access to Llama 4 for entities domiciled in the European Union has sparked debate about the true nature of the model's "openness" and the implications of such restrictions on the AI landscape.

Exploring Alternative Models

Amidst the controversy surrounding Llama 4, discussions have emerged about the advancement of AI beyond traditional models like Meta's. Companies like DeepSeek and Mistral have been praised for their innovative approaches to AI development, highlighting the importance of fresh ideas and thoughtful design in driving the field forward.

As the AI community navigates the fallout from Llama 4's release, questions remain about the future of open models and the role of corporate interests in shaping the direction of AI innovation.

Additional Reading:

- Neural Graffiti - A Neuroplasticity Drop-In Layer For Transformers Models

- So what happened to Llama 4, which trained on 100,000 H100 GPUs?

- Meta's Llama 4 Fell Short

- I'd like to see Zuckerberg try to replace mid-level engineers with Llama 4

- Llama 4 is open - unless you are in the EU

- Meta’s head of AI research stepping down (before the llama4 flopped)

- “Serious issues in Llama 4 training. I Have Submitted My Resignation to GenAI“