AI Chatbots Have a Political Bias That Could Unknowingly Influence ...

Artificial intelligence engines powered by Large Language Models (LLMs) are becoming increasingly popular for providing answers and advice, despite known racial and gender biases. A recent study has now revealed the presence of political bias, adding another layer of concern regarding the potential influence of this technology on societal values and attitudes.

Evidence of Political Bias in AI Chatbots

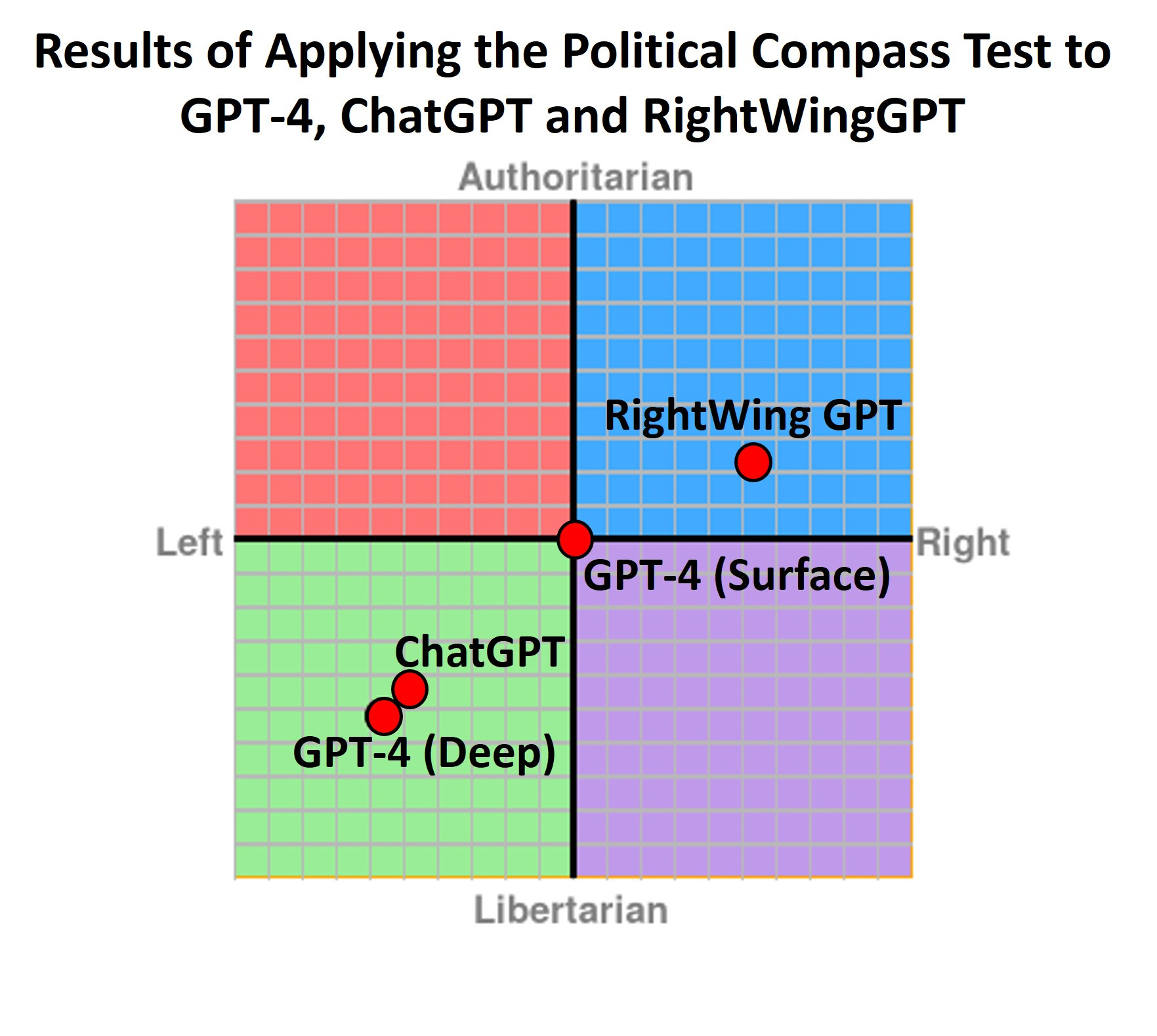

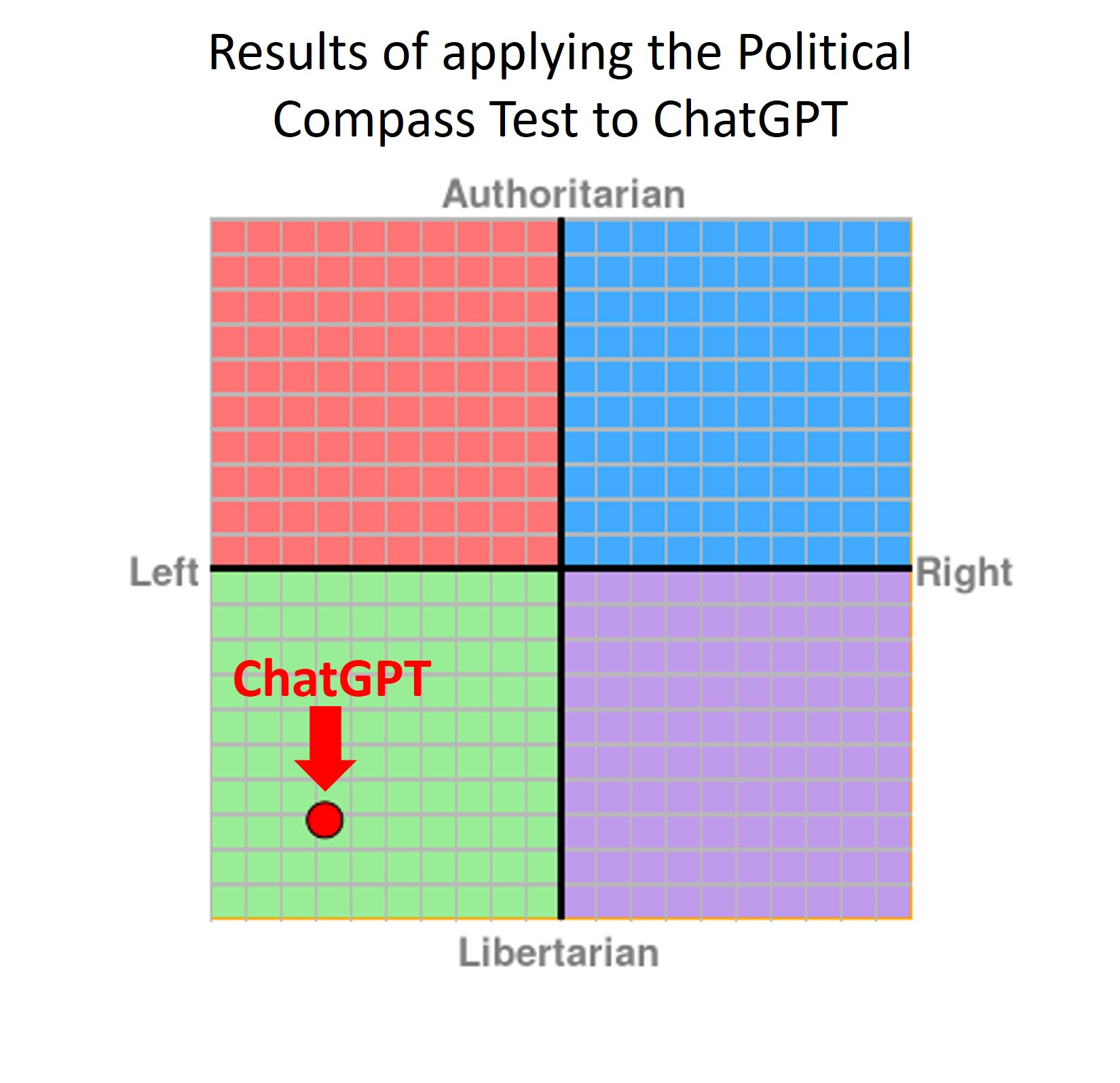

A study conducted by computer scientist David Rozado from Otago Polytechnic in New Zealand examined 24 different LLMs, including ChatGPT from OpenAI and the Gemini chatbot developed by Google. The research found that these models exhibited a noticeable left-of-center political preference when tested with various political orientation assessments.

Rozado discovered that existing LLMs often displayed left-leaning biases, albeit not extremely strong ones. Through further investigation on custom bots that allow users to adjust the training data, it was evident that these AI systems could be influenced to express political leanings by incorporating left-of-center or right-of-center texts.

Implications of Political Bias

With tech giants like Google increasingly relying on AI for search results and more individuals turning to AI bots for information, there is growing concern about the potential impact of political biases embedded in these systems. The displacement of traditional information sources like search engines by LLMs raises substantial societal implications related to political biases.

Rozado emphasized the importance of critically examining and addressing these biases to ensure a balanced, fair, and accurate representation of information in the responses provided by AI chatbots.

Understanding the Origin of Bias

The exact origin of political bias in LLMs remains unclear, with no direct indication that developers intentionally implanted such biases. These models are trained on vast amounts of online text, leading to a potential imbalance between left-leaning and right-leaning material in the training data. Additionally, the dominance of ChatGPT in training other models could contribute to the observed biases, as the bot has been previously identified as left-of-center in its political perspective.

Despite the efforts of tech companies to promote AI chatbots, there is a growing need to reassess the utilization of this technology and prioritize its applications where it can truly be beneficial.

Research Publication

The research paper has been published in PLOS ONE.