Xavier (Xavi) Amatriain on LinkedIn: Very interesting work on Multi-agent LLM Debate systems

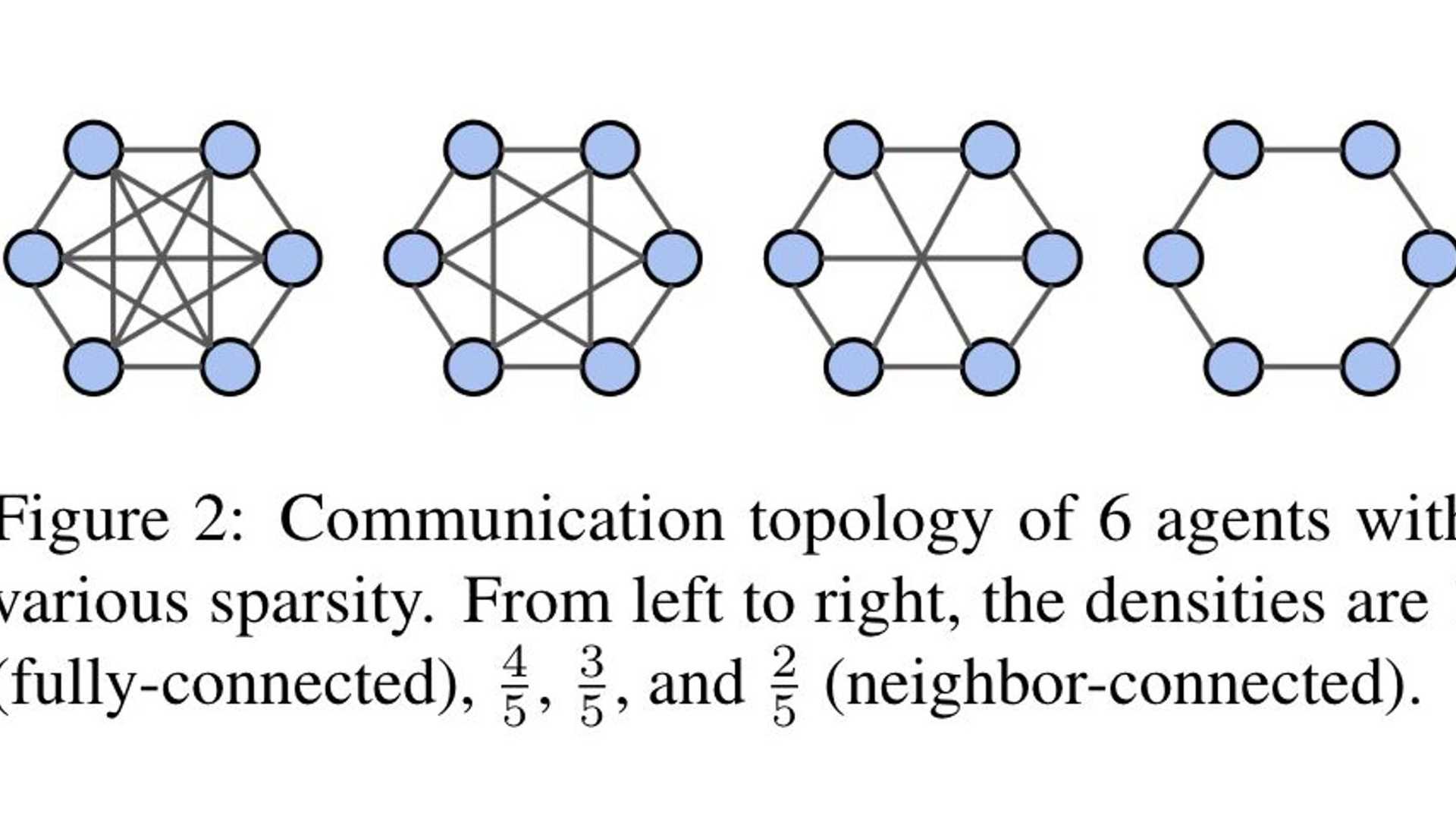

Very interesting work on Multi-agent LLM Debate systems by colleagues from our team at Google in collaboration with Google DeepMind. Sparse topologies facilitate more rounds of debates and result not only in higher accuracy (when compared to chain-of-thought or self-consistency approaches) but also much lower cost.

Increasing Value with AI Agents (Stealth) ✦ As the authors note at the end (under Limitations), the next opportunity is to find the best balance of "connectedness." It seems the same applies to how human teams work. Human-in-the-loop AI | Product-focused Science Some of the multi-expert dialog ideas first explored by Mikio Nakano at Honda Research in the mid 2000s and later in my dissertation work as behavior networks draw a lot of parallel to recent trends in LLM powered multi-agent conversational systems.

Head of Medical AI (Medical Generative Intelligence and Medical Language-Vision Models)

I believe dialectics and debate to be the way forward to AGI. Hegel had a point. Tenured Researcher (IAE - CSIC), Program Director M.Sc. Data Science for Decision Making (BSE), Principal Investigator at Conflict Forecast and EconAI soon this literature will be ready for qualitative voting.

See more details in LMSys: https://lnkd.in/grkwxmA9

Product Manager @ Google Gemini API

We just dropped a game-changer to slash your Gemini API costs 🤯 Yesterday we launched Gemini Context Caching, saving you up to 70% on your bill 💸💸

Here's how it works. Gemini let’s you add powerful AI models to create apps like:

- Email apps that summarize entire threads for you

- Storytelling apps that write the next chapter

- HR chatbots that actually understand your company's policies

To make these apps, you need to repeatedly send Gemini instructions, which can be pricy. But through the integration of research, software and chips came a breakthrough; 🤖 Context Caching

Just tell Google what you want to save money on and we'll take care of the rest. It’s an industry first and it’s going to be a big deal.

I'm so proud of the team for always putting developers first 🙌 Cost is always a huge part of the equation and we keep making it better.

Try Gemini Context Caching now and tell us what you think

#AI #LLM #Gemini

Leading AI Products at Google

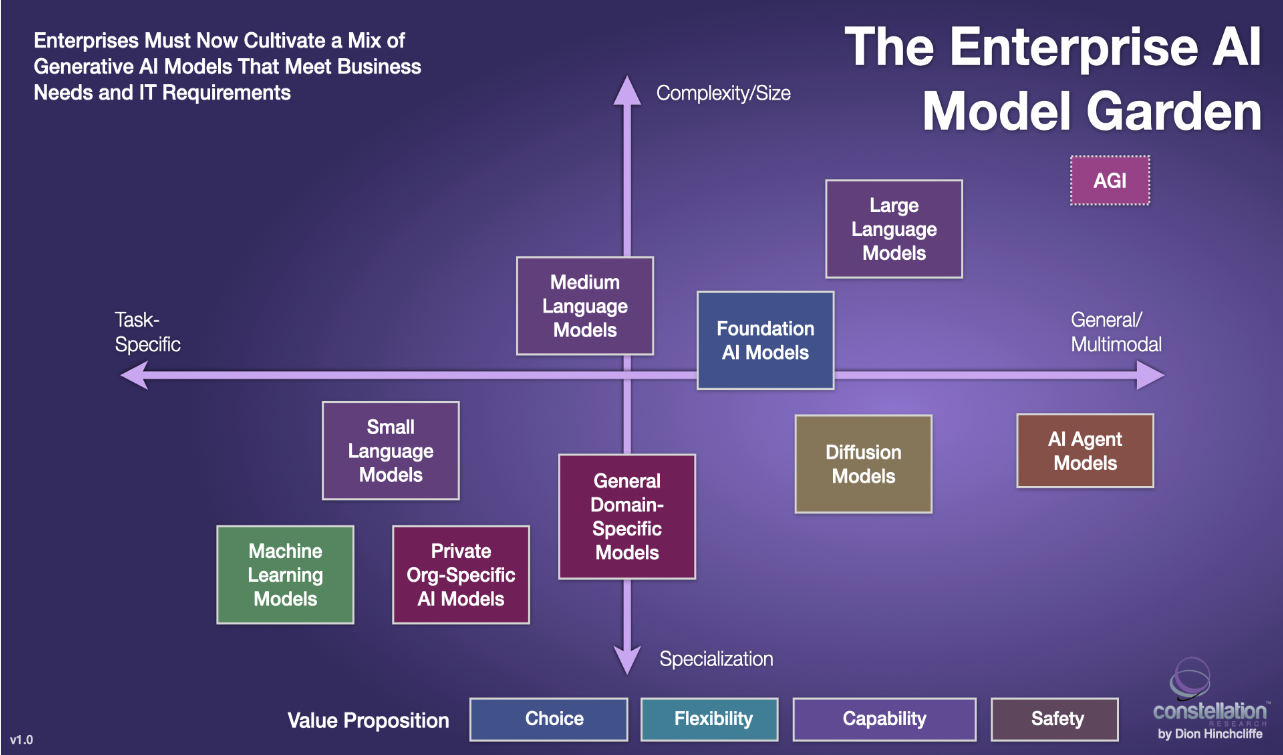

Super interesting research by Together AI where they show that combining agents built on six open source LLM foundation models beats SOTA frontier models like GPT-4o. Agents are combined using an MoE-like approach called Mixture of Agents: https://lnkd.in/g6ApuPhQ

This finding aligns with many of what I have been saying for quite some time. Many years ago, I half jokingly said that the "ensemble is the Master algorithm" since no matter how good your algorithm was, you could always find a way to combine it with another one in an ensemble to make it better. Similarly, more recently I have argued that we should not be chasing AGI, particularly if that is supposed to be obtained by a single model or approach (https://lnkd.in/gFhxJFrV).

Combining specialized agents in smart ways like this one is the new AI frontier.

Leading AI Products at Google

Question for my LinkedIn friends: are we overusing the "that's a great question"?

Look, I get it, I come from a culture where the norm was the opposite: you would usually get the "this is a dumb question". So, I am totally on board with rewarding people for asking questions. However, if we reward *every* question, are we really rewarding anyone? Conversely, we might be inadvertently penalizing that one person we don't reward with the same compliment.

Interestingly, I went down this rabbit hole and it looks like most advice I find online agrees with me that we should not use this tactic (e.g. https://lnkd.in/g42Hcacv).

What do you think? (And, please, don't start your response with "that's a great question" 😂 )