TTT models might be the next frontier in generative AI | TechCrunch

After years of dominance by the form of AI known as the transformer, the hunt is on for new architectures. Transformers underpin OpenAI’s video-generating model Sora, and they’re at the heart of text-generating models like Anthropic’s Claude, Google’s Gemini and GPT-4o. But they’re beginning to run up against technical roadblocks — in particular, computation-related roadblocks.

The Emergence of TTT Models

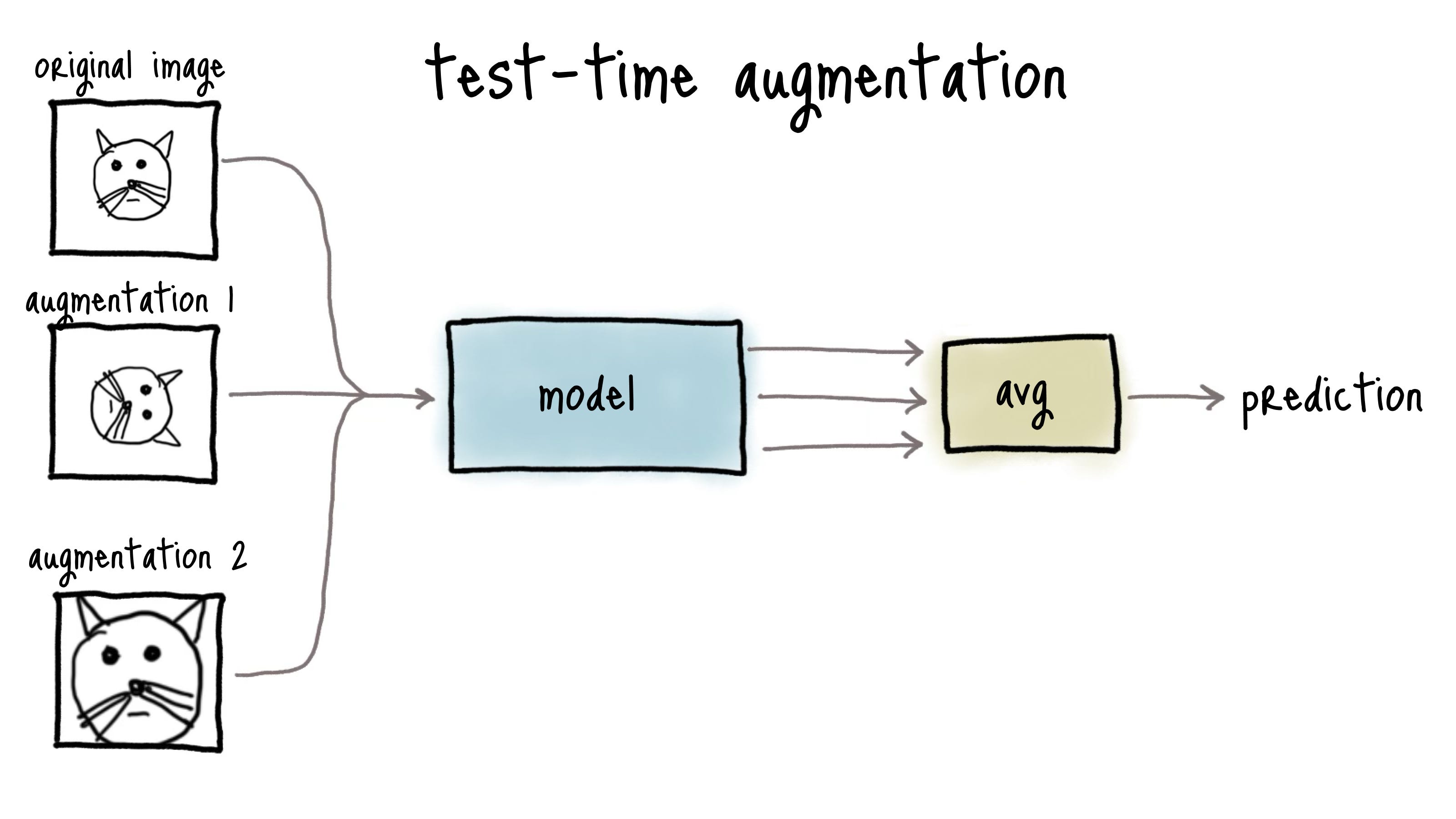

Transformers aren’t especially efficient at processing and analyzing vast amounts of data, at least running on off-the-shelf hardware. A promising architecture proposed this month is test-time training (TTT). Developed by researchers at Stanford, UC San Diego, UC Berkeley, and Meta, TTT models offer a new approach to handling data without consuming excessive compute power.

A fundamental difference in TTT models is the replacement of the traditional "hidden state" with a machine learning model. This novel approach allows TTT models to process vast amounts of data efficiently without the need for extensive compute power.

The Power of TTT Models

TTT models, characterized by their internal machine learning model that encodes data into representative variables called weights, offer high performance without increasing in size as more data is processed. This scalability and efficiency make TTT models capable of handling billions of pieces of data, from words to images to videos, surpassing the limitations of current models.

While TTT models show great promise, their role in superseding transformers is still uncertain. The researchers have developed only small models for study, making direct comparisons challenging at this stage.

Exploring Alternatives in AI

Trends in AI research point to a growing need for breakthrough alternatives to transformers. Companies like AI startup Mistral are exploring models based on state space models (SSMs), which, like TTT models, offer increased computational efficiency and scalability.

Efforts to develop new AI architectures could democratize generative AI, making it more accessible and widespread in various industries.

Stay updated with the latest tech news and insights on TechCrunch.