DeepSeek - the Chinese AI model threatening U.S. dominance

Dubbed the "silent giant," DeepSeek is causing concern in Silicon Valley as it possesses powerful AI built at a low cost. At the end of December 2024, DeepSeek surprised everyone by announcing the free release of the large language model (LLM) DeepSeek V3. Although there are still some issues related to feedback, the model is currently highly rated as it took only two months to build at a cost of less than $6 million, using H800 GPUs that Nvidia had downgraded in power to comply with U.S. sanctions instead of the strongest AI chips on the market.

In a series of third-party standardized tests, DeepSeek's model outperformed Meta's Llama 3.1, OpenAI's GPT-4o, and Anthropic's Claude Sonnet 3.5 with accuracy in solving complex problems, mathematics, and coding. V3 also beat competitors on Aider Polyglot, a test designed to measure the capabilities of AI models. According to DeepSeek, the model was trained on a dataset of 14.8 trillion tokens with a massive size of 671 billion parameters, approximately 1.6 times larger than Llama 3.1 405B.

DeepSeek's Latest Innovations

But V3 is not the only product. On January 20, DeepSeek launched a new "reasoning-capable" model called DeepSeek R1, which is already open-source on Github. According to some third-party assessments, this AI even outperformed OpenAI's latest o1 in many tests.

"DeepSeek R1 is 100% open-source, 96.4% cheaper than OpenAI's o1 while still delivering similar performance. OpenAI's o1 costs $60 for one million output tokens, while DeepSeek R1 only needs $2.19," said Shubham Saboo, DeepSeek's product director, on X at the end of January.

Arnaud Bertrand, founder of HouseTrip and Me & Qi, compared on X: "Basically, this is like someone releasing a phone with the power of an iPhone but selling it for $30 instead of $1,000."

The new DeepSeek model is truly impressive. They have figured out how to efficiently implement an open-source reasoning model, achieving superlative computational effectiveness," said Microsoft CEO Satya Nadella at the World Economic Forum in Davos on January 22 while discussing DeepSeek's new AI. "We should take developments in China very, very seriously."

Expert Praise and Concerns

Experts also praise the LLM that DeepSeek is developing. "They can refine to create a truly good LLM and use a process called 'distillation' to do so," said Chetan Puttagunta, an expert from Benchmark, to CNBC. "Basically, they use a very large model to help their smaller model become smarter, and this method is very cost-effective."

According to CNBC, these new developments raise alarm bells about whether the U.S.'s global leadership position in AI is narrowing. At the same time, this raises questions about the enormous spending of major tech companies on building AI models and data centers, especially as China can also produce powerful LLMs at a lower cost.

DeepSeek's Background and Success

DeepSeek was founded by Liang Wenfeng in May 2023, headquartered in Hangzhou, Zhejiang, and is owned by High-Flyer, one of China's leading investment funds. The company is fully funded by High-Flyer and has no plans to raise capital. It focuses on building foundational technology.

According to ChinaTalk, unlike AI companies in China, DeepSeek claims its mission is to "decode the mysteries of AGI through curiosity." The company's lab is currently focused on researching architectural and algorithmic improvements that have the potential to be game-changing in the field of artificial intelligence.

Among the seven major AI startups in China, DeepSeek is the most discreet but always makes a surprising impression. Unlike many large companies that burn money through subsidies, DeepSeek is financially self-sufficient and has turned a profit early on. This success stems from the company's comprehensive innovation in AI model architecture, particularly the creation of the new MLA (multi-head latent attention) architecture that reduces memory usage to 5-13% compared to the MHA architecture currently in use on the strongest LLMs in the world. Additionally, another structure of the company, DeepSeekMoESparse, also helps reduce computational costs, leading to a reduction in total expenses.

According to some sources, in Silicon Valley, DeepSeek has been referred to as the "mysterious force from the East" since the introduction of the DeepSeek V2 model last year. At that time, analysts from SemiAnalysis rated it as "possibly the most impressive model of the year," while former OpenAI employee Andrew Carr described the product as "intellectually rich and astonishing." Jack Clark, former policy director at OpenAI and co-founder of Anthropic, believes DeepSeek "has hired a team of genius beyond imagination" to develop models, comparing them to "large-scale equivalents of drones and electric cars."

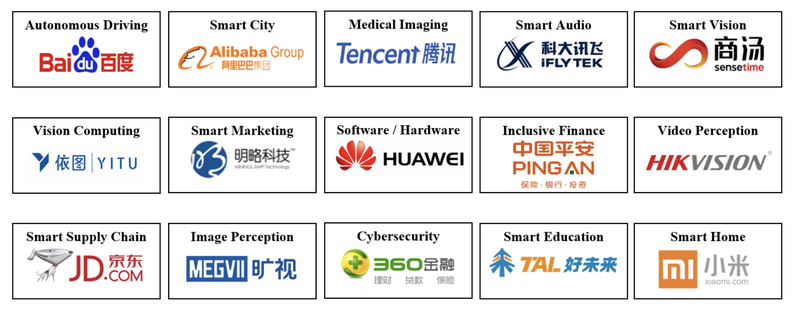

Competition from Other Chinese AI Companies

But DeepSeek is not the only Chinese company entering the large-scale, low-cost LLM field. Earlier, Kai-Fu Lee, a leading AI expert in China and founder of 01.ai, stated that the company's model was trained with only $3 million, using 2,000 GPUs, but "has comparable power" to OpenAI's GPT-4, which is said to run on a system costing between $80 million and $100 million, according to Tom's Hardware.

On January 21, ByteDance, the parent company of TikTok, released an update to the Doubao-1.5-pro model, claiming that this model surpasses OpenAI's o1 in AIME, a standardized test that evaluates the understanding and responsiveness of AI models to complex instructions, according to Reuters. Other Chinese companies announced their reasoning models earlier in January, including Moonshot AI, Minimax, and iFlyTek.

"Optimization is the source of inventions," Aravind Srinivas, CEO of Perplexity AI, told CNBC. "Because they always have to look for alternatives in a context constrained by limitations, they eventually built something much more effective."