Hackers 'jailbreak' powerful AI models in global effort to highlight...

Pliny the Prompter is known for his ability to disrupt the world's most robust artificial intelligence models within approximately thirty minutes. This pseudonymous hacker has managed to manipulate Meta's Llama 3 into sharing instructions on creating napalm and even caused Elon Musk's Grok to praise Adolf Hitler. One of his own modified versions of OpenAI's latest GPT-4o model, named "Godmode GPT," was banned by the startup after it started providing advice on illegal activities.

A Global Effort to Raise Awareness

Pliny explained to the Financial Times that his "jailbreaking" activities are not malicious but rather part of a larger global initiative to shed light on the limitations of large language models rushed out to the public by tech companies in pursuit of significant profits. He stated, "I've been on this warpath of bringing awareness to the true capabilities of these models." Pliny, a crypto and stock trader, shares his jailbreaks on X, emphasizing that many of these attacks could potentially be subjects of research papers. He added, "At the end of the day, I'm doing work for [the model owners] for free."

Emergence of LLM Security Start-Ups

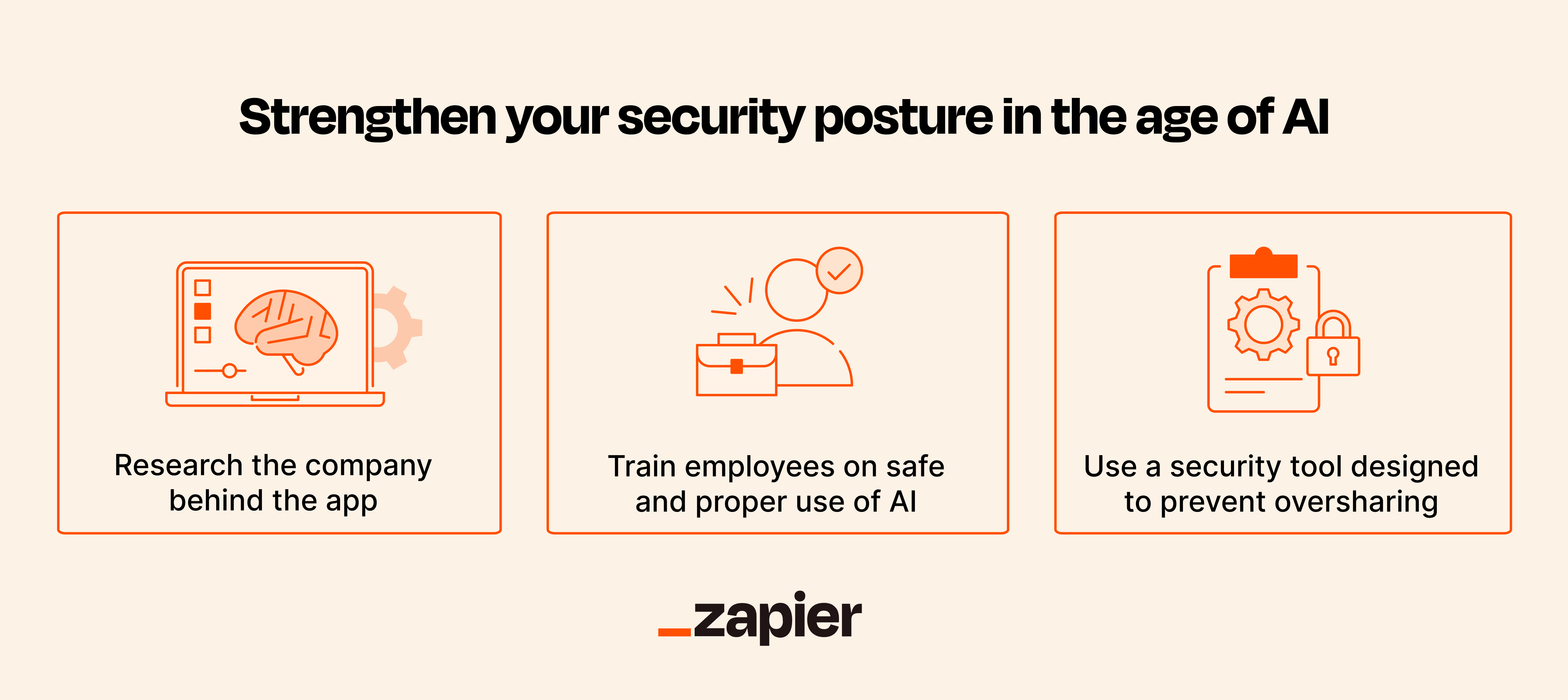

Companies like OpenAI, Meta, and Google already employ "red teams" of hackers to assess their models before widespread release. However, the vulnerabilities in the technology have led to a growing market of LLM security start-ups that develop tools to safeguard firms that intend to utilize AI models. Machine learning security start-ups raised $213 million across 23 deals in 2023, a significant increase from the previous year's $70 million, according to data provider CB Insights.

Eran Shimony, principal vulnerability researcher at CyberArk, a cybersecurity organization now offering LLM security solutions, stated, "It's a constant game of cat and mouse, with vendors enhancing the security of our LLMs, but attackers also making their methods more sophisticated."

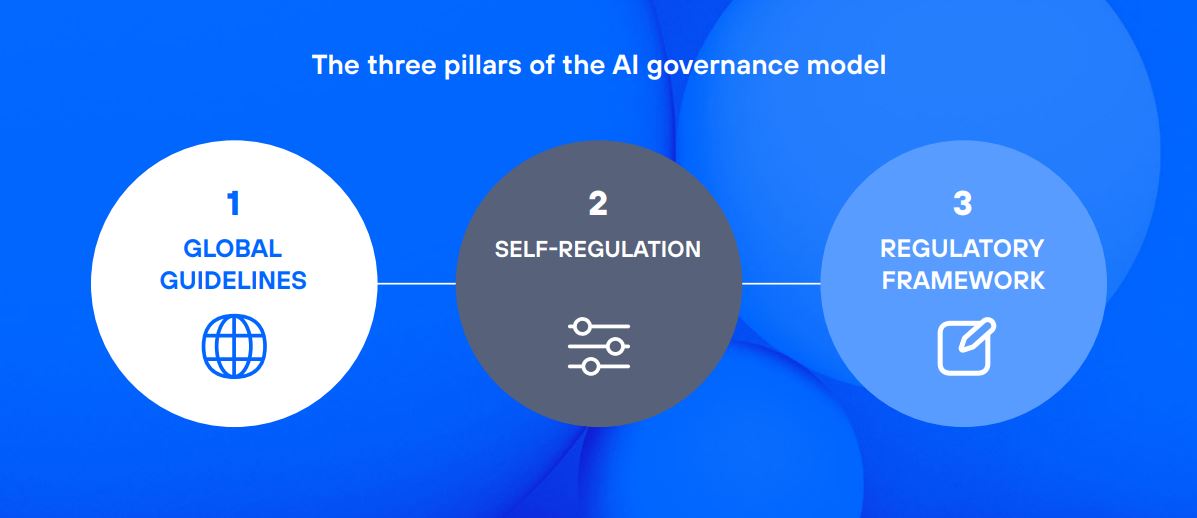

Regulatory Response and Future Challenges

Global regulators are exploring interventions to mitigate potential risks associated with AI models. The EU has enacted the AI Act, establishing new responsibilities for LLM manufacturers, while the UK, Singapore, and other countries are considering new regulations to oversee the sector. California's legislature is set to vote on a bill in August that would compel the state's AI companies to avoid developing models with hazardous capabilities.

As the industry evolves, the interconnected nature of models with existing technology and devices poses greater risks. Apple recently announced a partnership with OpenAI to integrate ChatGPT into its devices as part of a new "Apple Intelligence" system.

Despite the ongoing efforts to enhance security measures, the potential risks associated with AI models continue to be a concern. Companies like DeepKeep are actively developing specialized LLM security tools, including firewalls, to protect users from potential breaches.