TokenSkip: Optimizing Chain-of-Thought Reasoning in LLMs

Large Language Models (LLMs) have made significant progress in handling complex reasoning tasks, thanks to the introduction of Chain-of-Thought (CoT) prompting. However, a major challenge faced by LLMs is the computational overhead associated with longer CoT sequences, impacting inference latency and memory requirements. As CoT sequences grow, there is a proportional increase in processing time and memory usage, particularly in attention layers where costs scale quadratically due to the autoregressive nature of LLM decoding.

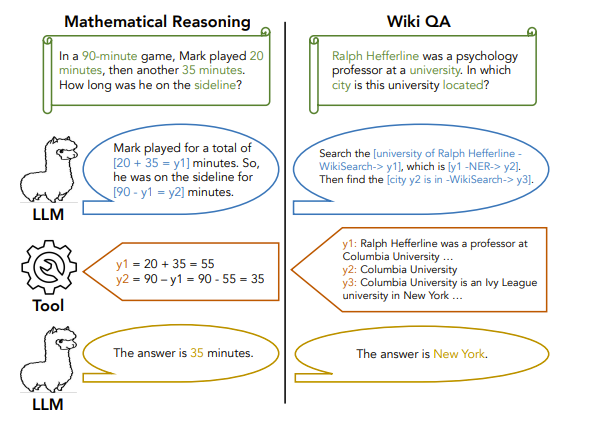

Efforts to strike a balance between reasoning accuracy and computational efficiency have led to the development of various methodologies to address the challenges of CoT reasoning. Some approaches aim to streamline the reasoning process by simplifying or skipping certain steps, while others focus on generating steps in parallel or compressing reasoning into continuous latent representations.

TokenSkip: A Novel Approach

Researchers from The Hong Kong Polytechnic University and the University of Science and Technology of China have introduced TokenSkip, a novel approach to optimize CoT processing in LLMs. TokenSkip allows models to skip less important tokens within CoT sequences while preserving connections between critical reasoning tokens through adjustable compression ratios.

The architecture of TokenSkip is based on the premise that different reasoning tokens contribute varying levels of importance towards reaching the final answer. It consists of two main phases: training data preparation and inference. During the training phase, TokenSkip generates CoT trajectories using the target LLM and prunes each trajectory with a randomly selected compression ratio based on an "importance scoring" mechanism.

One of the key advantages of TokenSkip is its ability to maintain the autoregressive decoding approach during inference while enhancing efficiency by allowing LLMs to skip less important tokens. By separating the question and compression ratio using end-of-sequence tokens in the input format, TokenSkip achieves significant reductions in computational overhead without compromising reasoning capabilities.

Results and Impact

Experimental results demonstrate that larger language models can maintain performance even at higher compression rates. For instance, the Qwen2.5-14B-Instruct model achieves remarkable results with only a 0.4% performance drop while reducing token usage by 40%. TokenSkip outperforms alternative approaches such as prompt-based reduction and truncation by maintaining specified compression ratios without sacrificing reasoning capabilities.

On the MATH-500 dataset, TokenSkip achieves a 30% reduction in token usage with less than a 4% performance drop, showcasing its effectiveness in optimizing CoT processing for LLMs. This novel approach introduces a controllable compression mechanism based on token importance, enabling LLMs to efficiently handle complex reasoning tasks while reducing computational overhead. The success of TokenSkip opens new avenues for advancing efficient reasoning in LLMs and lays the groundwork for future developments in computational efficiency.

For more details, you can check out the paper here.