ChatGPT o3 hallucinations might be a big issue for reasoning AI

As soon as ChatGPT became widely available, it stunned the world with its ability to answer questions in natural language almost immediately. It still does that today, and its performance has improved significantly as well. However, users quickly discovered that chatbots like ChatGPT do not always provide accurate information. They convincingly hallucinate. That’s why it's crucial to ask for sources and fact-check the information provided by AI.

Advancements in AI

ChatGPT and its competitors like OpenAI have made significant progress over the years. These AI firms now provide sources for the claims made by the AI, especially when involving internet searches. Despite these improvements, users are still advised to request clear, working links for all information shared and to correct the AI if inaccurate information is provided.

ChatGPT o3 and o4-mini

ChatGPT o3 and o4-mini are among the most advanced reasoning models, surpassing the performance of ChatGPT o1 in various fields. However, users have reported that these newer models seem to be hallucinating more than their predecessors, a fact that OpenAI has acknowledged. The reason behind this behavior remains unclear.

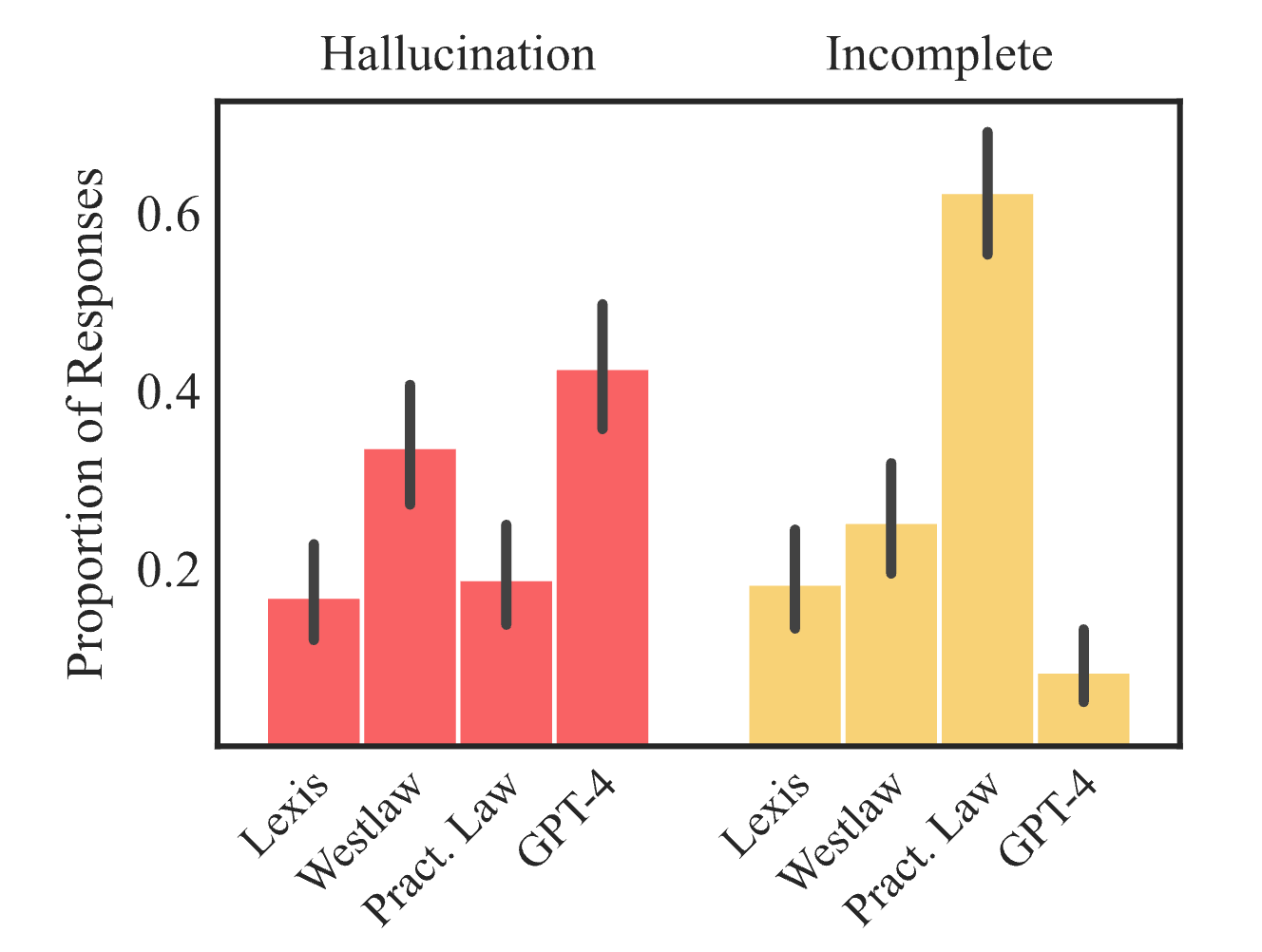

OpenAI provided detailed statistics on the hallucination rates for o3 and o4-mini in the System Card file for these models, indicating a higher frequency of hallucinations than desired.

Research and Observations

Research conducted by OpenAI revealed that while o4-mini underperforms o1 and o3 in some aspects, o3 tends to make more claims overall, leading to both accurate and inaccurate/hallucinated claims. More investigation is required to understand the underlying cause of this discrepancy.

The table published by the OpenAI team showed that ChatGPT o3 is more accurate than o1 but has a higher hallucination rate. On the other hand, the smaller model, o4-mini, produces less accurate responses than o1 and o3 and hallucinates at a higher rate.

The Challenge of Hallucinations

Despite the advancements in AI models that leverage internet searches and image analysis, such as deep analysis of images, hallucinations remain a challenge. While these models can extract information from the web effectively, they still struggle to avoid inventing false facts along the way.

It is intriguing that OpenAI has developed reasoning models capable of impressive tasks, yet the issue of increasing hallucination rates persists without a clear solution.

While encountering hallucinations with o3 and o4-mini models may not be common, it is essential to remain vigilant and verify the claims made by AI models for the foreseeable future.