Most perceive that on-device AI requires a robust NPU to operate correctly, however there are different necessities that get much less consideration, like RAM and storage. And we should face the comprehensible actuality of upsell: Some AI options will likely be restricted to new gadgets solely in order that hardware makers can profit from an exaggerated upgrade cycle.

Will it work?

The non-NPU hardware necessities first got here to mild when Google introduced its Gemini Nano on-device small language mannequin (SML) to the Pixel 8 Professional in late 2023. It explains why Copilot+ PCs have a 16 GB RAM minimal, in comparison with 4 GB for different Home windows 11 PCs, and a 256 GB of (non-HDD) storage minimal, in comparison with 64 GB (which may be HDD). And now we’re seeing this concern once more with Apple Intelligence, which will likely be backported to the iPhone 15 Professional sequence however not the non-Professional iPhone 15s.

Apple Intelligence and Its Hardware Requirements

On its Apple Intelligence web page, Apple explains that its hardware-accelerated, hybrid AI system will likely be made obtainable on all Apple Silicon (M1, M2, M3, M4 sequence) Macs and iPads, and on the iPhone 15 Professional and Professional Max. However these are the only two iPhones supported: The iPhone 15 and iPhone 15 Plus do not make the cut-off. This is not artificial, just like the Home windows 11 hardware requirements. The iPhone 15s fall short in two key areas for on-device AI.

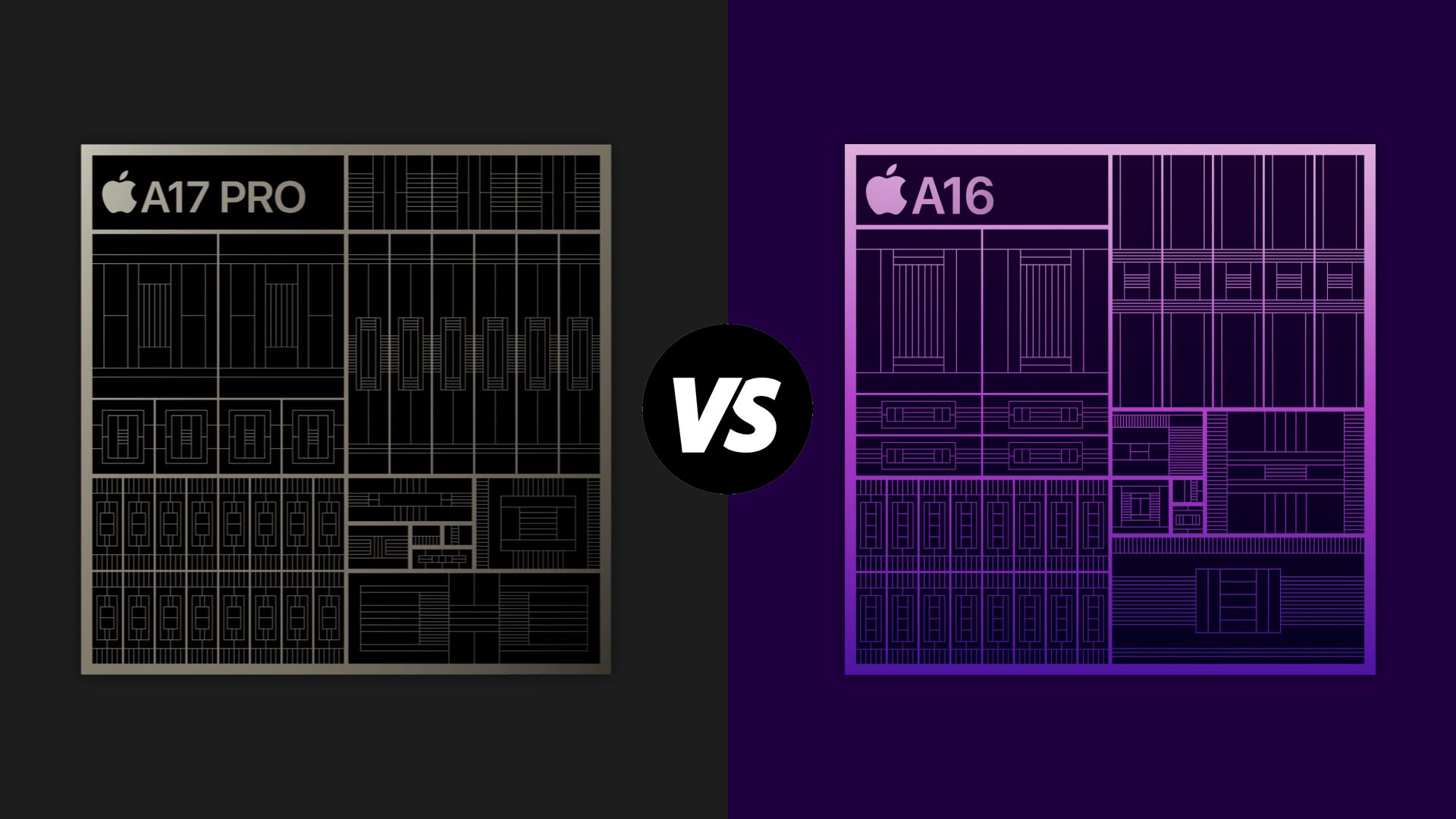

Yes, the first one is the NPU. The base iPhone 15s have a lesser Apple Silicon processor—an A16 Bionic vs. the Professional’s A17 Professional—with a far much less highly effective NPU that delivers just 17 TOPS of hardware accelerated AI performance. The A17 Professional is twice as fast, at 35 TOPS.

However it’s not just the NPU. The iPhone 15s also don’t have enough RAM to handle on-device AI: 6 GB vs. the 8 GB in the Professional models. Due to AI, phone makers will be installing much more RAM in their devices than was the case until now.

Storage may also be a concern—the base iPhone 15s may be had with as little as 128 GB of storage compared to the 256 GB minimum on the Pros—however it looks like Apple will use only a handful of on-device models. It's likely that the processor/NPU and RAM differences are the bigger concern.

And I believe that due to what happened to Google. When Google announced its Pixel 8 family of phones in late 2023, it heavily promoted a range of AI capabilities, as it did with earlier Pixels. But that December, the tech giant announced its Gemini family of AI models, and that the smallest of those models, Gemini Nano, would be used on-device on the Pixel 8 Professional, thanks to a Pixel Feature Drop.

Although this was the first time a phone maker put a modern SLM on a phone, Gemini Nano is still used for just two AI-accelerated features, as was the case at its launch. “As the first smartphone engineered for Gemini Nano, the Pixel 8 Professional uses the power of Google Tensor G3 to deliver two expanded features: Summarize in Recorder and Smart Reply in Gboard,” Google explained.