ChatGPT Is Inaccurate When Answering Computer Programming Questions

A team of computer scientists at Purdue University has conducted a study revealing that ChatGPT, a popular Large Language Model (LLM), displays significant inaccuracies when responding to computer programming queries. The study, documented in the Proceedings of the CHI Conference on Human Factors in Computing Systems, involved the team presenting programming questions sourced from StackOverflow to ChatGPT and evaluating the correctness of its responses.

Research Findings

The team also shared their discoveries at the Conference on Human Factors in Computing Systems (CHI 2024) which took place from May 11 to May 16. Despite the recent surge in popularity of ChatGPT and similar LLMs among the public, these tools have been reported to provide not only valuable information but also a considerable number of inaccuracies.

Many programming students have embraced LLMs not only for code-writing assistance but also for addressing programming-related questions. For instance, students may inquire about concepts like the variance between bubble sort and merge sort or seek an explanation of recursion from ChatGPT. The Purdue team decided to evaluate the accuracy of ChatGPT in answering such queries.

Evaluation Process

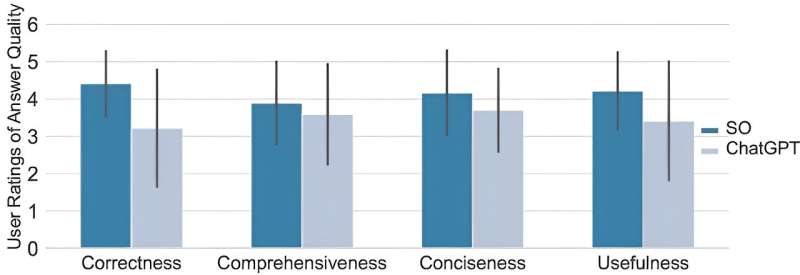

Focusing solely on ChatGPT, the researchers selected 517 questions from StackOverflow to assess the model's performance. Unfortunately, ChatGPT delivered correct answers only 52% of the time, with responses tending to be more elaborate compared to those provided by human experts.

Alarmingly, the study revealed that 35% of the participants preferred ChatGPT responses, even though, upon review, they did not identify errors in the model's answers 39% of the time.

Source: Techxplore