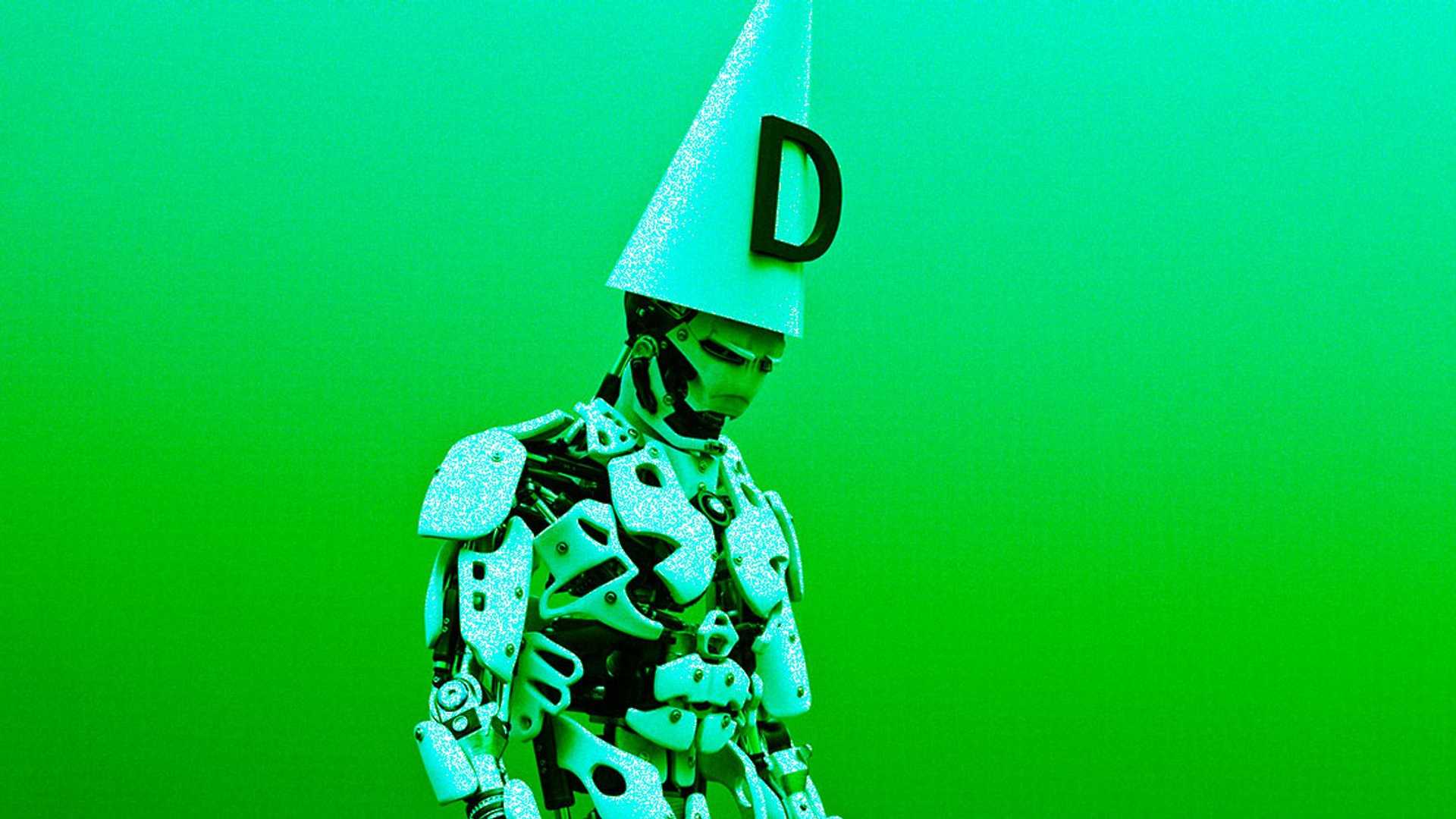

Your Writing is Not Safe with ChatGPT, Says Author's Controversial Advice

If you're an aspiring writer, you might have considered getting feedback on your work from other writers. However, one author, Lauren Kay, has taken an unusual approach by recommending the use of OpenAI's ChatGPT. In a now-deleted TikTok video, Kay suggested that writers could get an "objectively" honest critique of their work using the chatbot.

Kay demonstrated this by calibrating ChatGPT using an acclaimed novel before feeding it the first page of her upcoming book. She then asked the bot to rank her writing based on six different factors, including "world-building." However, the critiques the chatbot provided were "utterly meaningless" and could have easily been written by a high school student.

It's worth noting that ChatGPT is not an objective tool, nor is it designed to be a critic. Rather, it's a large language model that predicts the best way to string together a legible sentence. It's also inherently biased since it's trained largely on sources from the internet, and any text fed into it can become part of its system to be trained on and potentially imitated.

Amazon has even expressed concerns that confidential data was being leaked by employees using ChatGPT. Aside from the risks of data privacy, it's not advisable to use a tool that makes up facts to "objectively" critique your writing.

After receiving overwhelming backlash for her controversial advice, Kay apologized for her mistake, stating that she was unaware of the extent of data usage by ChatGPT.

In conclusion, while using technology to improve your writing is commendable, chatbots like ChatGPT are not the answer. As a writer, it's essential to get meaningful feedback from fellow writers and editors who understand the craft rather than relying on a biased algorithm.