In the past six to eight weeks, the investment logic of AI has ...

As AI large models shift from pre-training to inference, network architecture, chip cluster layout, and the logic that supports a significant portion of the investment world need to be rethought. Meanwhile, over the past six to eight weeks, during this large model platform period, small teams have continuously emerged, utilizing open-source models to develop new models with relatively little funding, competing in performance with cutting-edge models. In this trend, the limitations of GPUs have also diminished.

Shift in Artificial Intelligence Investment Logic

From pre-training to inference, a key shift is occurring in the field of artificial intelligence, which will disrupt the logic of AI investment. Recently, in the well-known business podcast Invest Like The Best, discussions revolved around the current challenges faced by AI models, the rising popularity of open-source models, and the investment implications for primary and secondary markets.

Transition to Test-Time Compute

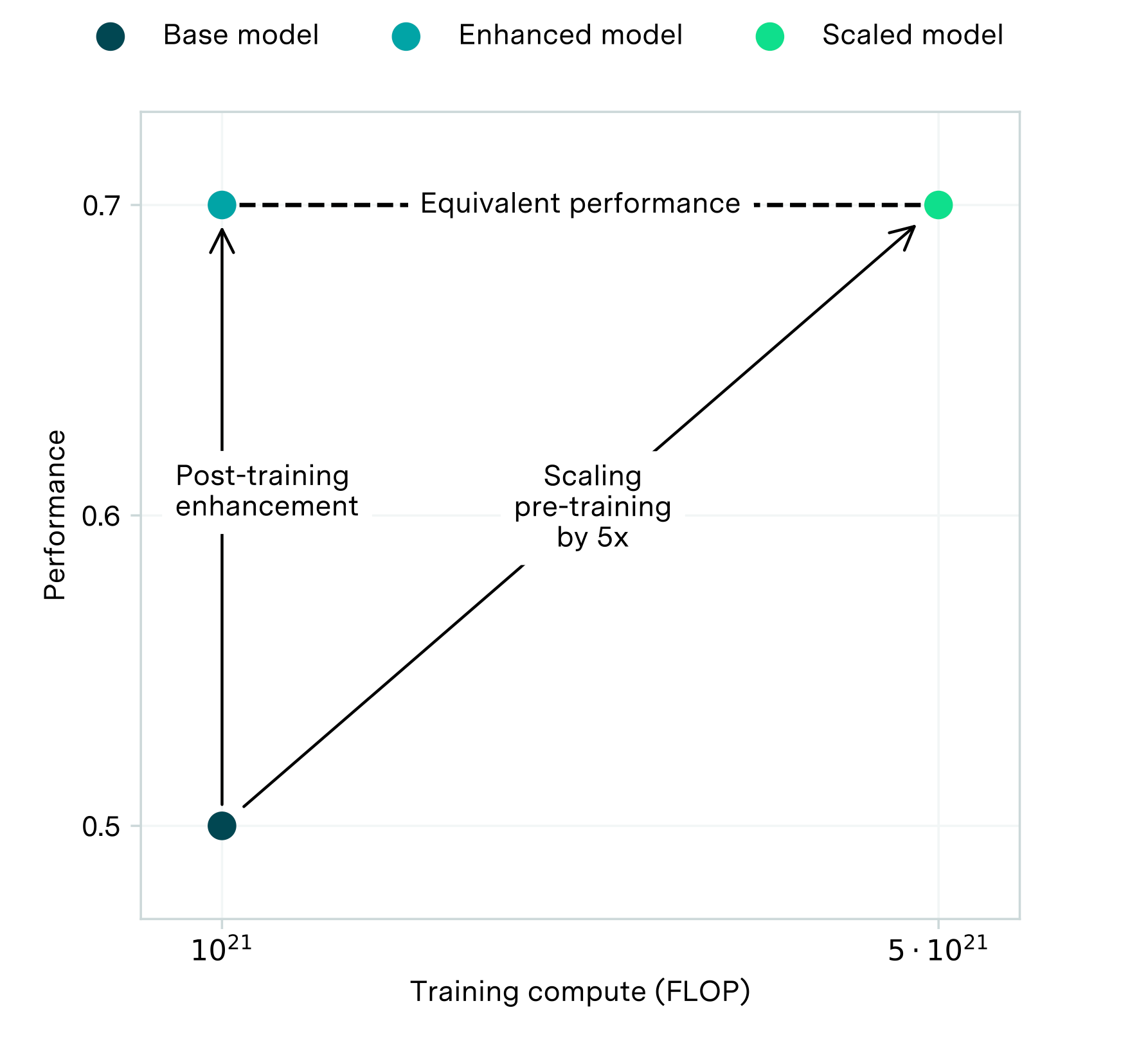

Large model training has transitioned to using synthetic data generated by LLMs due to the depletion of human text data. However, this shift has led to challenges in scaling pre-training, prompting AI large models to move towards a new paradigm—shifting from pre-training to test-time compute. Test-time compute allows models to explore solutions and iterate on potential solutions concurrently, addressing the limitations faced during pre-training.

Implications of the Shift for Venture Capital

This shift from pre-training to inference has significantly impacted the venture capital landscape over the past six to eight weeks. Chetan emphasized the challenges of the test-time inference paradigm, noting the need for improved algorithms, data, and hardware to address these challenges effectively.

Alignment of Revenue Generation and Expenditure

Modest highlighted how the shift towards inference time can better align revenue generation with expenditure, benefiting hyper-scale enterprises in terms of efficiency and financial scalability. This shift also necessitates a reevaluation of network architecture and chip cluster layout to optimize power utilization and grid design.

Emergence of Small Teams and Open-Source Models

Notably, small teams have emerged in the past six to eight weeks, developing innovative models with minimal funding and leveraging open-source models like Meta's LLaMA series. These teams have been able to quickly catch up with cutting-edge models without the need for extensive resources, leading to a decrease in GPU limitations.

Future of Artificial Intelligence

With the ongoing advancements in AI and the shift towards inference time, the prospect of achieving general artificial intelligence by 2025 seems promising. The decreasing costs of inference and the rapid innovation in the field indicate a potential paradigm shift in the investment world.