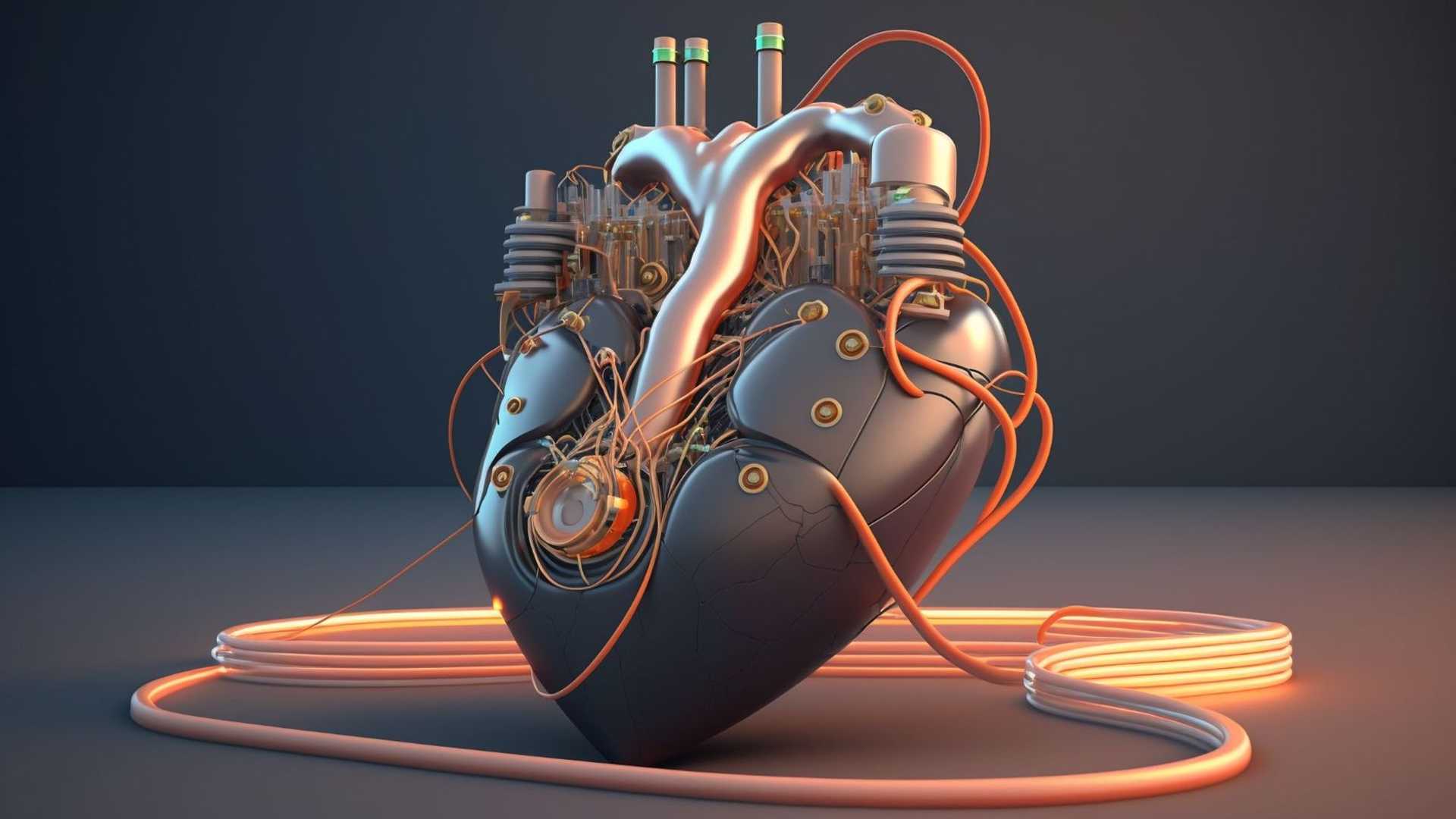

Large Language Models will become the heart of all software

Large Language Models (LLMs) like ChatGPT are starting to replace the logic sections of some software. The LLM is hidden from the user and only interacts with the application. This trend will affect all software in the future.

There has been a lot of news about autonomous agents recently. These programs ask the user to provide a goal and then use that response to prompt a Large Language Model to break down the goal into tasks. The program then takes each task and iteratively prompts the LLM to complete each task or break it down into more manageable tasks. These task completion programs are called agents.

Generative Agents: Interactive Simulacra of Human Behaviour

Generative agents are another example of how complex human-like behaviour can be simulated by using an LLM to plan tasks, form memories and conduct conversations. This is exceptional work that could have interesting implications for AI in games. In the conversation above, Mei immediately asks after her son as he is important to her, despite not having recent memories of him to prompt conversation. This is possible because the LLM has stored the events that happened to Mei as plain text descriptions called the memory stream. The memories are passed directly to the LLM as part of the prompt, with the characters constantly reflecting on their memories which prioritizes memories based on recency, importance, and relevancy.

While this paper is impressive work for AI in games, a broader picture is in the use of an LLM as the computational heart of other non-gaming applications. It's clear that we are seeing the start of a new class of software where LLMs are used by programs to replace entire logic modules of code.

Proof of Concept Projects

Many projects have been circling AI Twitter recently and causing a stir. They are all proofs of concept, using LLMs like ChatGPT or Facebook’s Llama to obviate the need to write planning code.

- Task-driven Autonomous Agent

- AutoGPT

- HyperWrite

These apps again use LLMs like a human by asking it questions in natural language. In this way, the app acts as 'scaffolding' to the LLM acting as a go-between for the user and the LLM.

Apple moved quickly to enable the previous release of Stable Diffusion to run efficiently on Apple’s M series of chips, and I think it is highly likely we will see this model paired down to run on an iPhone. This is important because right now it takes a few seconds to return answers from ChatGPT. When that time is instantaneous it can enable voice-in-your-ear applications that can advise, translate, and inform in real-time.

In conclusion, LLMs are becoming the heart of all software. The projects mentioned here all use LLMs as a foundational beating heart of the application. They are used by programs to replace entire logic modules of code, opening up exciting new possibilities for the future of software development.