A former OpenAI leader says safety has 'taken a backseat to shiny products'

A former leader at OpenAI, Jan Leike, recently resigned from the company and expressed concerns that safety is not being given enough priority compared to the development of new and flashy products in the field of artificial intelligence. Leike, who was in charge of OpenAI's "Superalignment" team, voiced his thoughts on social media, highlighting his disagreements with the company's leadership regarding its core objectives.

Focus on AI Research and Safety

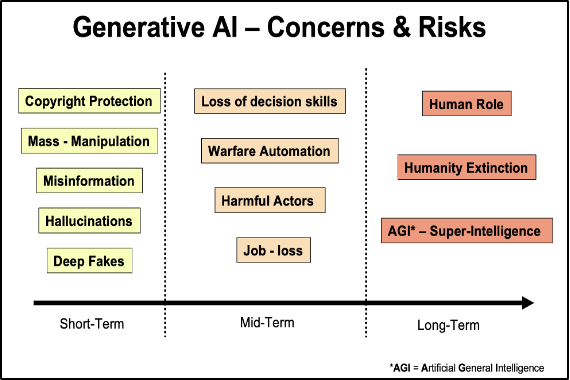

As an AI researcher, Leike emphasized the importance of focusing on preparing for the future generation of AI models. He stressed the need for a strong emphasis on safety measures and the critical analysis of the societal impacts that such advancements may bring. Leike believes that the creation of machines that surpass human intelligence levels is a risky endeavor and that OpenAI carries a significant responsibility on behalf of all humanity.

Leike is an advocate for OpenAI transitioning to become a safety-first AGI (Artificial General Intelligence) company. He believes that prioritizing safety measures is crucial in ensuring that the development of AI benefits everyone involved.

Changes at OpenAI

Following Leike's departure, OpenAI CEO Sam Altman expressed his appreciation for Leike's contributions to the company and acknowledged the need for further advancements in the field. Altman assured that OpenAI is committed to making progress while maintaining a focus on safety. The company disbanded Leike's Superalignment team, which was initially established to address AI risks, and integrated its members into other research departments.

Leike's resignation comes shortly after OpenAI co-founder and chief scientist Ilya Sutskever announced his departure, signaling a period of change within the company's leadership. Sutskever's decision to leave after almost a decade at OpenAI reflects the evolving landscape of the organization.

The Future of OpenAI

As OpenAI moves forward, Jakub Pachocki has been appointed as the new chief scientist, succeeding Sutskever. Altman expressed confidence in Pachocki's abilities and leadership, highlighting his potential to drive rapid and safe progress towards OpenAI's mission of ensuring that AGI benefits society as a whole.

Collaboration and Innovation

OpenAI's recent advancements in artificial intelligence technology, such as its human-like verbal responses and mood detection capabilities, demonstrate the company's commitment to innovation. Through collaborations like the licensing and technology agreement with The Associated Press, OpenAI continues to push boundaries in AI research and development.

Overall, the evolving landscape of OpenAI and the insights shared by former leaders like Leike shed light on the critical need for prioritizing safety in the advancement of artificial intelligence.