Decoding Brain Signals into Text: The Breakthrough of Brain2Qwerty by Meta AI

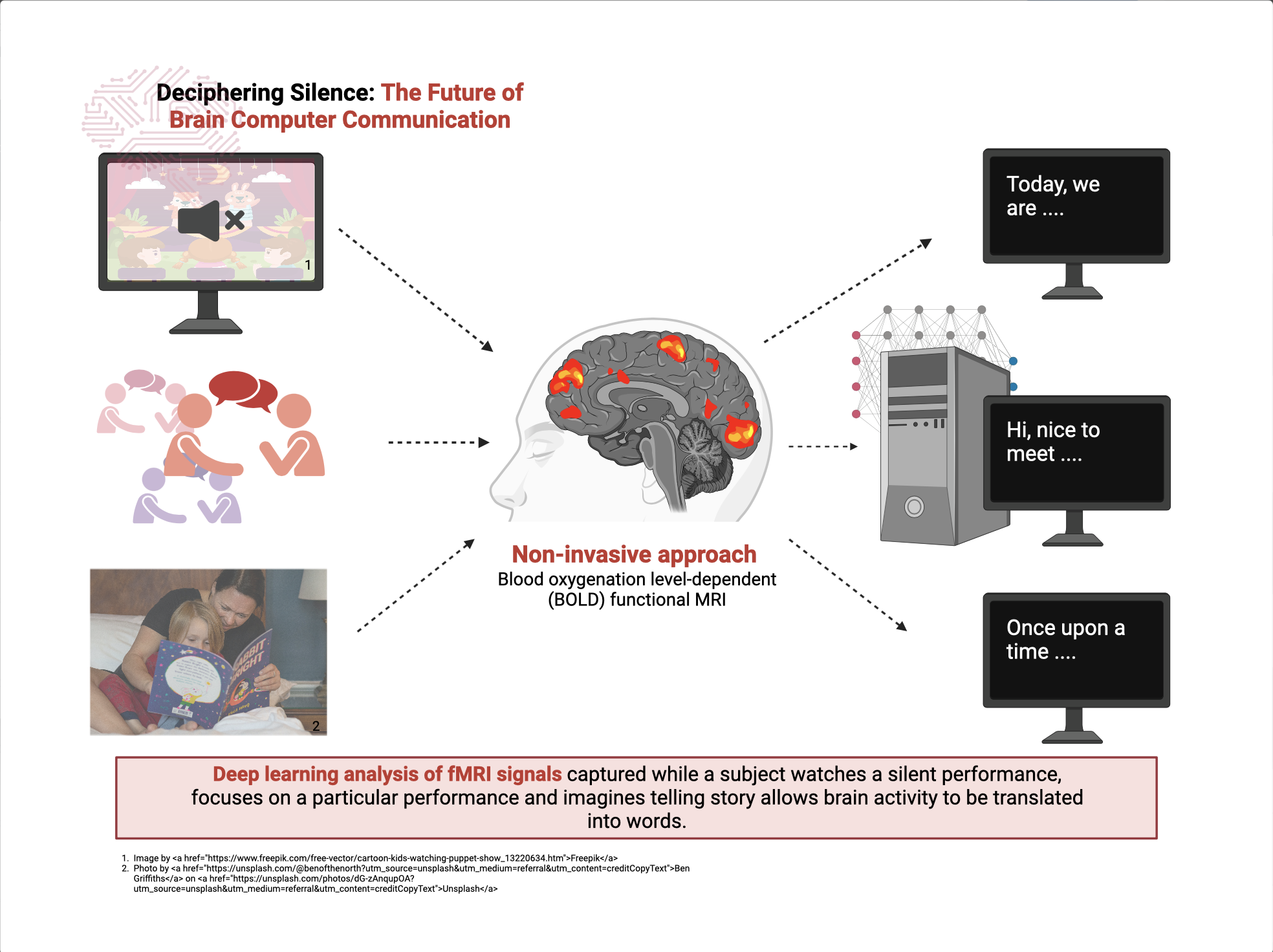

Meta AI has introduced Brain2Qwerty, a revolutionary deep learning model that decodes brain activity into text using non-invasive techniques such as electroencephalography (EEG) and magnetoencephalography (MEG). Unlike traditional invasive brain-computer interfaces (BCIs) that involve implanted electrodes and potential medical risks, this innovation provides a safer alternative.

Innovative Approach to Brain-Computer Interaction

Unlike previous BCIs that require users to focus on external stimuli or imagined movements, Brain2Qwerty utilizes the natural motor processes linked to typing. Study participants typed memorized sentences on a QWERTY keyboard while their brain activity was monitored, offering a more intuitive way to interpret brain signals.

Model Architecture and Performance

The Brain2Qwerty model consists of three key modules. This integrated approach has resulted in enhanced accuracy in translating brain activity into text. During evaluations, MEG-based decoding achieved a Character Error Rate (CER) of 32%, with the most accurate participants achieving a CER of 19%. On the other hand, EEG-based decoding yielded a higher CER of 67%, indicating that while EEG is more accessible, MEG delivers superior performance in this context.

Implications and Future Directions

The development of Brain2Qwerty marks a significant advancement in non-invasive BCI technology, with potential applications in assisting individuals with speech or motor impairments. However, challenges persist, including the necessity for real-time processing capabilities and the accessibility of MEG technology, which currently requires specialized equipment not widely available. Furthermore, the study was conducted with healthy participants, warranting further research to ascertain the model's efficacy for individuals with motor or speech disorders.

Meta AI's endeavor with Brain2Qwerty highlights the synergy of deep learning and advanced brain recording techniques in creating practical solutions to augment human-computer interaction and communication. Ongoing research and development are poised to tackle existing hurdles and broaden the scope of this technology in the future.