Apple aims for on-device user intent understanding with UI-JEPA

Understanding user intentions based on user interface (UI) interactions is a critical challenge in creating intuitive and helpful AI applications. In a new paper, researchers from Apple introduce UI-JEPA, an architecture that significantly reduces the computational requirements of UI understanding while maintaining high performance. UI-JEPA aims to enable lightweight, on-device UI understanding, paving the way for more responsive and privacy-preserving AI assistant applications. This could fit into Apple’s broader strategy of enhancing its on-device AI.

The Innovation Behind UI-JEPA

Understanding user intents from UI interactions requires processing cross-modal features, including images and natural language, to capture the temporal relationships in UI sequences. While advancements in Multimodal Large Language Models (MLLMs) offer pathways for personalized planning, these models demand extensive computational resources and introduce high latency. Current lightweight models that can analyze user intent are still too computationally intensive to run efficiently on user devices.

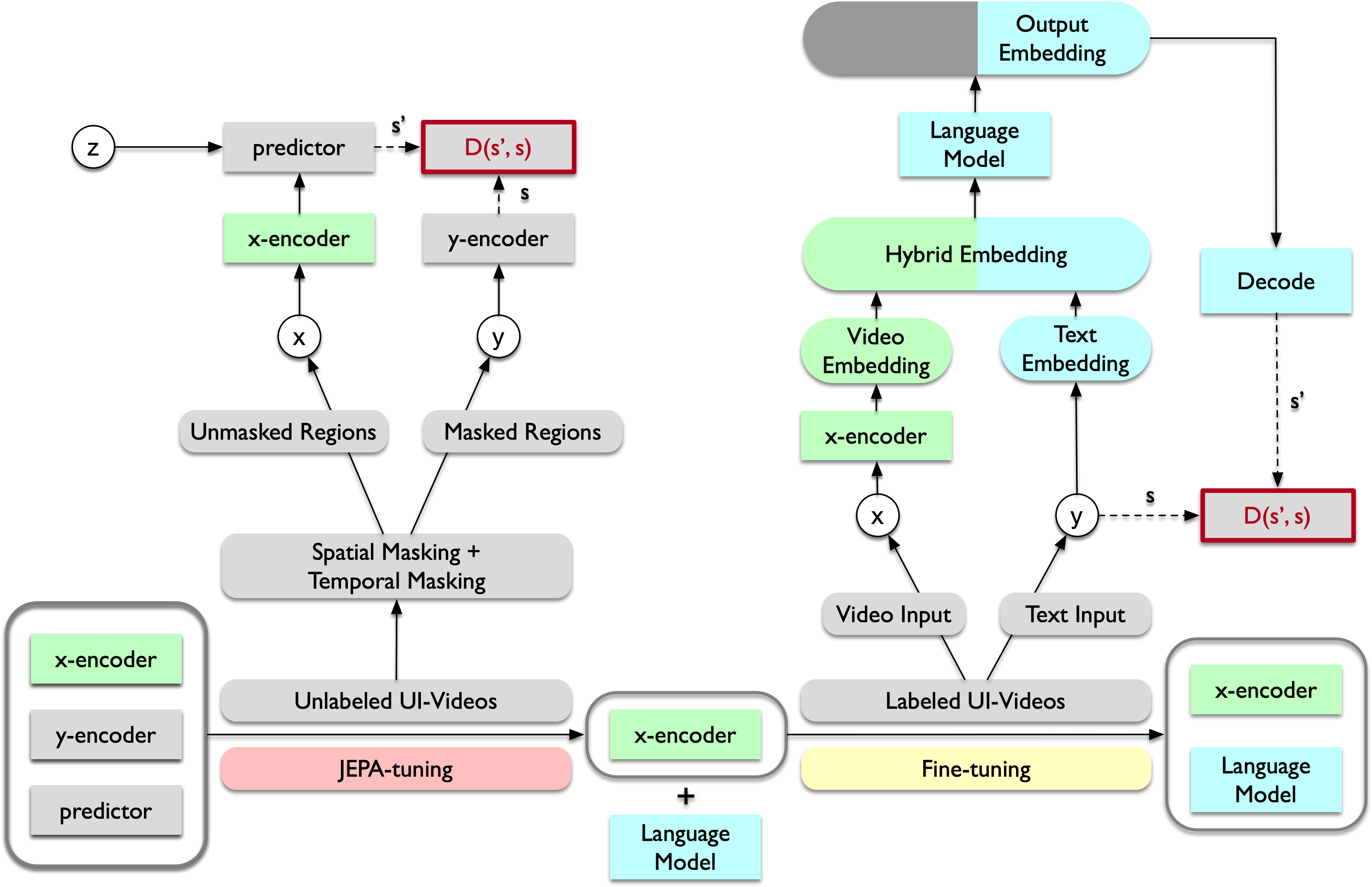

UI-JEPA draws inspiration from the Joint Embedding Predictive Architecture (JEPA), a self-supervised learning approach introduced by Meta AI Chief Scientist Yann LeCun in 2022. JEPA significantly reduces the dimensionality of the problem, allowing smaller models to learn rich representations. It is a self-supervised learning algorithm, which means it can be trained on large amounts of unlabeled data, eliminating the need for costly manual annotation.

Advantages of UI-JEPA

UI-JEPA builds on the strengths of JEPA and adapts it to UI understanding. The framework consists of a video transformer encoder and a decoder-only language model. By combining a JEPA-based encoder and a lightweight LM, UI-JEPA achieves high performance with significantly fewer parameters and computational resources compared to state-of-the-art MLLMs.

To further advance research in UI understanding, the researchers introduced two new multimodal datasets and benchmarks: “Intent in the Wild” (IIW) and “Intent in the Tame” (IIT). These datasets aim to contribute to the development of more powerful and lightweight MLLMs.

Potential Applications of UI-JEPA

Researchers envision several potential uses for UI-JEPA models. One key application is creating automated feedback loops for AI agents, enabling them to learn continuously from interactions without human intervention. UI-JEPA could also be integrated into agentic frameworks to track user intent across different applications and modalities.

UI-JEPA seems to be a good fit for Apple Intelligence, which is a suite of lightweight generative AI tools that aim to make Apple devices smarter and more productive. Given Apple’s focus on privacy, the low cost and added efficiency of UI-JEPA models can give its AI assistants an advantage over others that rely on cloud-based models.