Legal Challenge Launched Against Meta for AI Training with User Data

Privacy organization Noyb has taken legal action against Meta, the tech giant behind Facebook, Instagram, and Threads, for not obtaining user consent before using their posts to train artificial intelligence models.

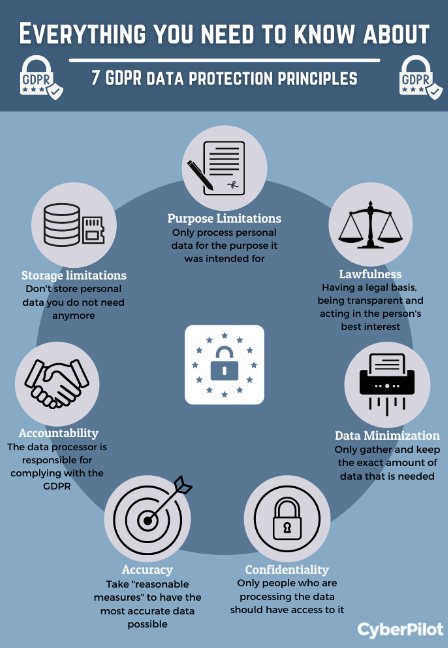

The group filed complaints in eleven European countries, urging immediate action to stop this data usage which they believe violates the European General Data Protection Regulation (GDPR).

Controversy Over Consent

Noyb argues that Meta should seek proactive consent from users rather than just offering an opt-out option. They believe Meta's practices do not align with GDPR requirements, especially concerning the use of posts, photos, captions, and messages for AI training.

While private chat messages are currently excluded from AI utilization, concerns remain about the breadth of data being used by Meta without explicit user consent.

Importance of User Consent in Data Usage

Ensuring ethical use of user data for AI training is crucial in compliance with data protection laws like the GDPR. Consent is a fundamental aspect, requiring that it be freely given, specific, informed, and unambiguous.

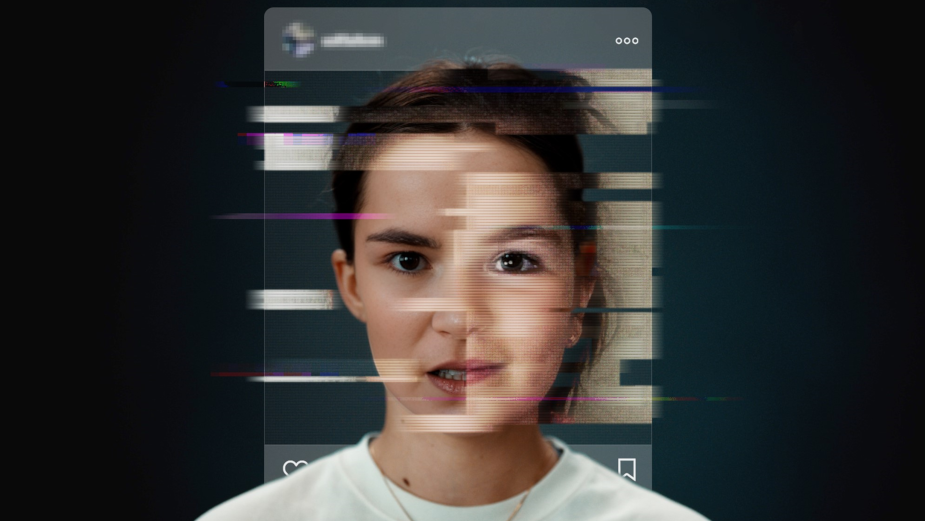

Risks and Potential for Misuse of Data

There are concerns about the misuse of data for AI training, as improper safeguards could lead to exploitation of sensitive information. This raises fears of deepfake generation and other malicious uses of user data without their consent.

Advantages and Disadvantages

Training AI with user data can enhance user experience, improve platform functionalities, and drive technological innovations. However, it also poses risks such as privacy breaches, misuse of personal data, and erosion of user trust if not handled properly.

For more information on data protection and privacy, visit the Noyb website and the GDPR portal.