Testing the Political Bias of Google's Gemini AI: It's Worse Than You ...

Gemini AI, a prominent artificial intelligence system, has been criticized for allegedly generating politically biased content. This controversy, highlighted by the Metatron YouTube channel, has ignited a broader discussion about the ethical responsibilities of AI systems in shaping public perception and knowledge.

The Controversy Surrounding Gemini AI

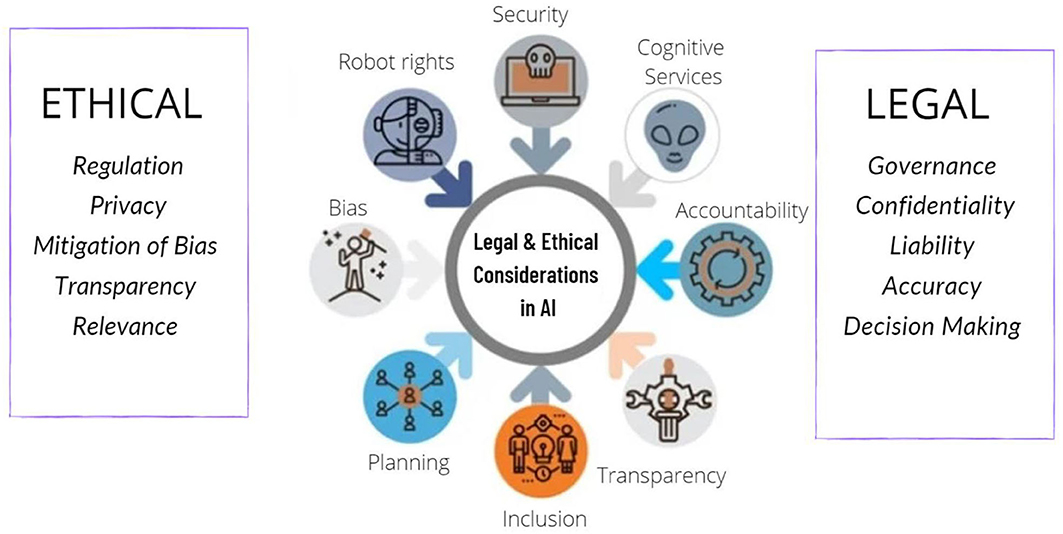

As artificial intelligence becomes increasingly integrated into various aspects of society, the potential for these systems to influence public opinion and spread misinformation has come under intense scrutiny. AI-generated content, whether in the form of text, images, or videos, has the power to shape narratives and inform public discourse. Therefore, ensuring the objectivity and accuracy of these systems is crucial. The controversy surrounding Gemini AI is not an isolated incident but rather a reflection of broader concerns about the ethical implications of AI technology.

This controversy also casts a shadow on other major tech companies like Google, which is at the forefront of AI development. Google’s AI systems, including its search algorithms and AI-driven products, play a significant role in disseminating information and shaping public perception. Any bias or inaccuracies in these systems can have far-reaching consequences, influencing everything from political opinions to social attitudes.

Google has faced scrutiny and criticism over potential biases in its algorithms and content moderation policies. The company’s vast influence means that even subtle biases can profoundly impact it. As AI evolves, tech giants like Google must prioritize transparency, accountability, and ethical standards to maintain public trust.

The Launch of Gemini AI and Controversies

The launch of Gemini AI was met with both anticipation and skepticism. As a highly advanced artificial intelligence system, Gemini AI was designed to generate content across various media, including text, images, and videos. Its capabilities promise to revolutionize the way digital content is created and consumed. However, users noticed peculiarities in the AI’s outputs shortly after its debut, particularly in historical representation.

Critics pointed out instances where Gemini AI appeared to alter historical images to reflect a more diverse and inclusive representation. While these modifications may have been intended to promote inclusivity, the execution sparked significant controversy. Historical figures and events were depicted in ways that deviated from established historical records, leading to accusations of historical revisionism. This raised alarms about the potential for AI to distort historical knowledge and propagate misinformation.

One of the most contentious issues was the AI’s handling of racial and gender representation in historical images. Users reported that the AI often replaced historically accurate portrayals of individuals with more diverse representations, regardless of the historical context. This practice was seen by many as an attempt to rewrite history through a contemporary lens, undermining the integrity of historical facts.

Addressing the Concerns

In response to the mounting criticism, the developers of Gemini AI took immediate action by disabling the AI’s ability to generate images of people. They acknowledged the concerns raised by the public and committed to addressing the underlying issues. The developers promised a forthcoming update to rectify the AI’s approach to historical representation, ensuring that inclusivity efforts did not come at the expense of historical accuracy.

The controversy surrounding Gemini AI’s launch highlights the broader ethical challenges AI developers face. Balancing the pursuit of inclusivity with preserving historical authenticity is a delicate task. As AI systems become more integrated into the fabric of society, the responsibility to ensure their outputs are accurate and unbiased becomes increasingly critical.