Expert reveals the phones AI fans need to push Gemini & ChatGPT

One of the most noticeable trends within the smartphone industry over the past couple of years has been the incessant talk about AI experiences. Silicon warriors, in particular, often touted how their latest mobile processor would enable on-device AI processes such as video generation.

Amidst all the hype show with hit-and-miss AI tricks for smartphone users, the debate barely ever went beyond the glitzy presentations about the new processors and ever-evolving chatbots. It was only when the Gemini Nano’s absence on the Google Pixel 8 raised eyebrows that the masses came to know about the critical importance of RAM capacity for AI on mobile devices. Apple also made it clear that it was keeping Apple Intelligence locked to devices with at least 8GB of RAM.

The Role of RAM and Storage for AI Processes on Smartphones

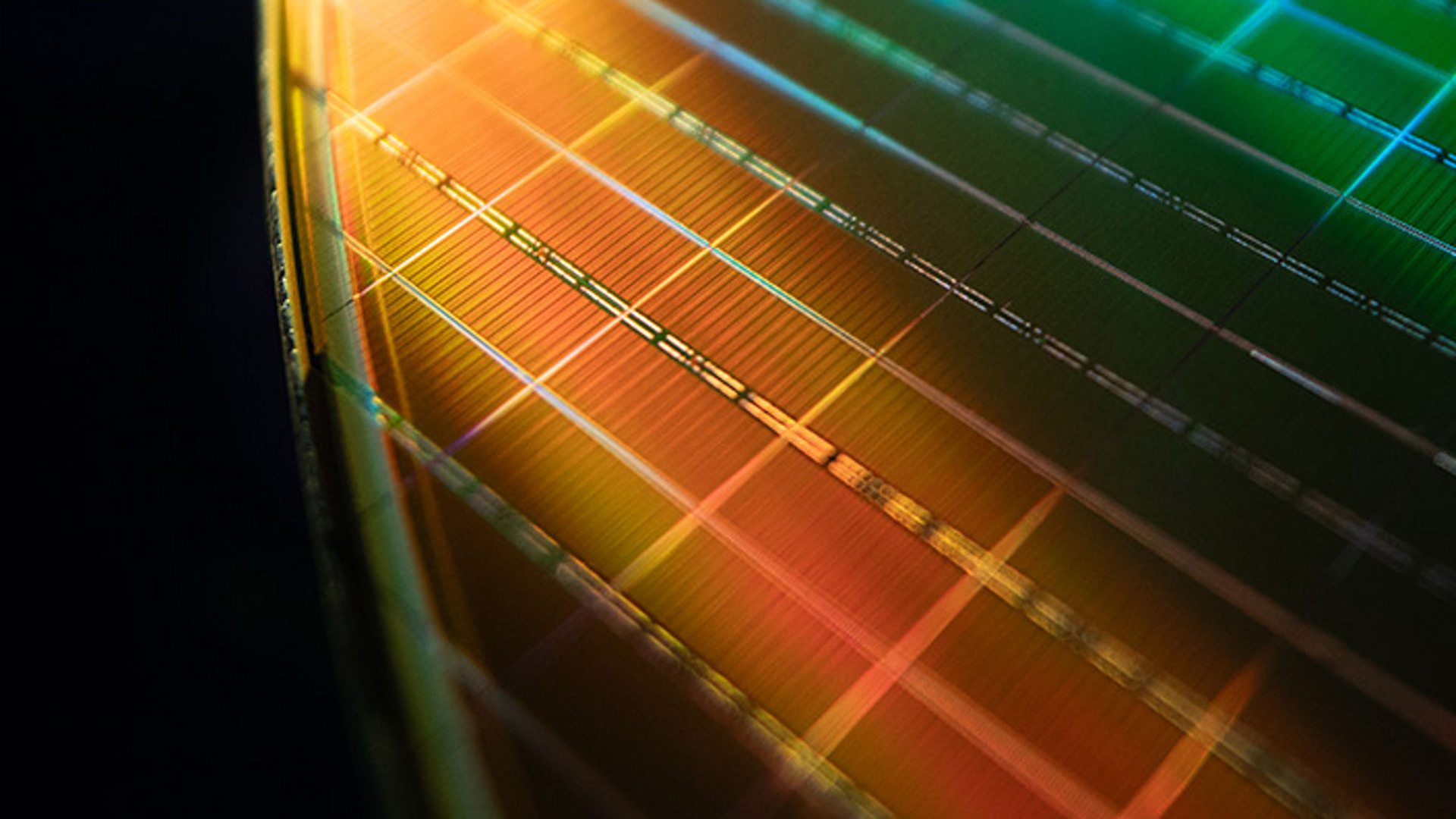

Digital Trends sat with Micron, a global leader in memory and storage solutions, to break down the role of RAM and storage for AI processes on smartphones. The advancements made by Micron should be on your radar the next you go shopping for a top-tier phone. The latest from the Idaho-based company includes the G9 NAND mobile UFS 4.1 storage and 1γ (1-gamma) LPDDR5X RAM modules for flagship smartphones.

Let’s start with the G9 NAND UFS 4.1 storage solution. The overarching promise is frugal power consumption, lower latency, and high bandwidth. Another crucial benefit is that Micron’s next-gen mobile storage modules go all the way up to 2TB capacity. Moreover, Micron has managed to shrink their size, making them an ideal solution for foldable phones and next-gen slim phones such as the Samsung Galaxy S25 Edge.

Moving over to the RAM progress, Micron has developed what it calls 1γ LPDDR5X RAM modules. They deliver a peak speed of 9200 MT/s, can pack 30% more transistors due to size shrinking, and consume 20% lower power while at it.

Enhancements for Faster AI Operations on Mobile Devices

Micron has made four crucial enhancements atop their latest storage solutions to ensure faster AI operations on mobile devices. These enhancements include Zoned UFS, Data Defragmentation, Pinned WriteBooster, and Intelligent Latency Tracker.

Every AI model that seeks to perform on-device tasks needs its own set of instruction files that are stored locally on a mobile device. To perform a task efficiently, data exchange needs to be sped up to ensure that AI tasks are executed without affecting the speed of other important tasks.

Thanks to Pinned WriteBooster, this data exchange is sped up by 30%, ensuring the AI tasks are handled without any delays. Data Defrag, on the other hand, enhances the read speed of data by an impressive 60%, which naturally hastens all kinds of user-machine interactions, including AI workflows.

Intelligence Latency Tracker and Zoned UFS are additional features that optimize the phone’s performance to ensure that regular, as well as AI tasks, don’t run into speed bumps.

The Future of AI on Smartphones

When it comes to AI workflows, a certain amount of RAM is essential. With its next-gen 1γ LPDDR5X RAM solution for smartphones, Micron has managed to reduce the operational voltage of the memory modules and ensure top-notch AI performance. Micron’s next-gen RAM and storage solutions for smartphones are targeted not just at improving the AI performance but also the general pace of day-to-day smartphone chores.

Smartphone makers, as well as AI labs, are increasingly shifting towards local AI processing. This approach ensures faster and more efficient processing without compromising on personal data security. Micron’s next-gen solutions can help with local AI processing and also speed up processes that require a cloud connection.

As per Micron, flagship models launching in late 2025 or early 2026 are expected to be equipped with the latest Micron solutions. The future of AI in smartphones is exciting, and with advancements in memory and storage solutions, users can expect enhanced AI experiences on their devices.