The Economics of ChatGPT: Can Large Language Models Turn a ...

On Wednesday, June 5, something amazing happened on the U.S. stock market. Nvidia (NASDAQ: NVDA) became the first computer "hardware" stock to reach a $3 trillion valuation, largely on the success of its semiconductor chips for artificial intelligence functions. Previously, only computer software makers such as Apple and Microsoft, who ordinarily earn higher profit margins than hardware makers, had hit this mark. But thanks to the astounding profit margins Nvidia has been able to earn on its AI chips, it has joined the $3 trillion club as well.

A Cautionary Note

But not all the news is good. Sounding a cautionary note last week, ARK Investment head Cathie Wood warned investors that for Nvidia to deserve its rich valuation, "AI now has to play out elsewhere" and prove its value both to the companies that are developing artificial general intelligence and to the customers buying their services. Failing this, demand for AI chips will wither, and with it Nvidia's valuation.

The Role of Large Language Models

So what are the chances that software companies such as OpenAI, Microsoft, and Alphabet will make money on AI? Will payments from companies such as Apple, which is promising to include AI from OpenAI and Google on its iPhones, be enough to turn AI companies profitable?

As luck would have it, I recently had the opportunity to explore this question. I write a lot about defense stocks and I post summaries of defense contract awards on Twitter for the benefit of other investors. Of course, doing this manually is time-consuming. This led me to consider using a program like ChatGPT to automate this work.

Cost Analysis of ChatGPT

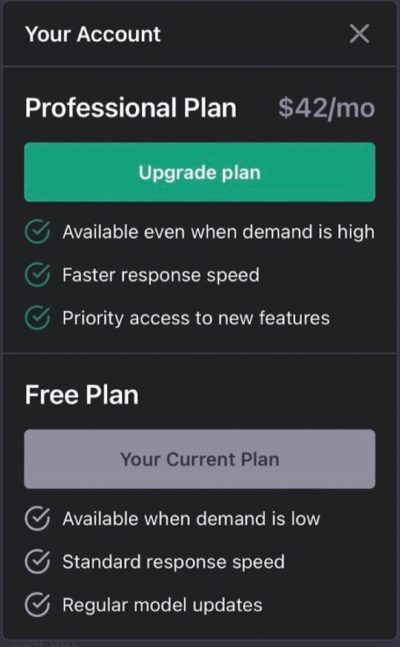

In our investigation, we discovered ChatGPT's pricing structure. The service prices its output in "tokens," which are described as "pieces of words," and sells these tokens to users in batches of 1 million. OpenAI, the company behind ChatGPT, charges between $0.02 to $15 per million tokens, depending on the specific large language model required. We opted for a model called GPT-3.5 Turbo, which costs $1.50 per million tokens.

Considering the value provided, the cost of $1.50 per million tokens seemed reasonable, given that it would generate approximately 750,000 words of text and save a significant amount of time over a year.

Energy Costs and Profitability

However, questions arose about the profitability of OpenAI and similar companies. Concerns about the energy consumption of AI and rising electricity prices were raised. To dig deeper, we asked ChatGPT about the electricity cost of answering a question.

ChatGPT provided insights into its energy costs, stating that answering a question consumed about 0.052% of the tokens, roughly costing $0.00078 for the user. On the other hand, OpenAI's CEO suggested that the company's costs could be higher, possibly in single-digit cents per query.

Despite these costs, scaled operations and competition among AI chip providers like Nvidia, Intel, and Advanced Micro Devices could drive profitability for companies offering AI services. Over time, these companies may be able to capitalize on the AI revolution.

Before investing in Nvidia, consider seeking advice from financial analysts to explore other potential investment opportunities in the market.

Disclaimer: Suzanne Frey, an executive at Alphabet, is a member of The Motley Fool’s board of directors. The Motley Fool has positions in and recommends Advanced Micro Devices, Alphabet, Apple, Microsoft, and Nvidia. The Motley Fool recommends Intel and various options related to these companies.