AI regulators turn to old laws to tackle generative tools like ChatGPT

LONDON/STOCKHOLM - As the race to develop more powerful artificial intelligence services like ChatGPT accelerates, some regulators are relying on old laws to control a technology that could upend the way societies and businesses operate.

The European Union Leading the Way

The European Union is at the forefront of drafting new AI rules that could set the global benchmark to address privacy and safety concerns that have arisen with the rapid advances in the generative AI technology behind OpenAI's ChatGPT. However, it will take several years for the legislation to be enforced.

"In absence of regulations, the only thing governments can do is to apply existing rules," said Massimiliano Cimnaghi, a European data governance expert at consultancy BIP. "If it's about protecting personal data, they apply data protection laws, if it's a threat to safety of people, there are regulations that have not been specifically defined for AI, but they are still applicable."

Enforcing Existing Rules

In April, Europe's national privacy watchdogs set up a task force to address issues with ChatGPT after Italian regulator Garante had the service taken offline, accusing OpenAI of violating the EU's GDPR, a wide-ranging privacy regime enacted in 2018. ChatGPT was reinstated after the U.S. company agreed to install age verification features and let European users block their information from being used to train the AI model.

The agency will begin examining other generative AI tools more broadly, a source close to Garante told Reuters. Data protection authorities in France and Spain also launched in April probes into OpenAI's compliance with privacy laws.

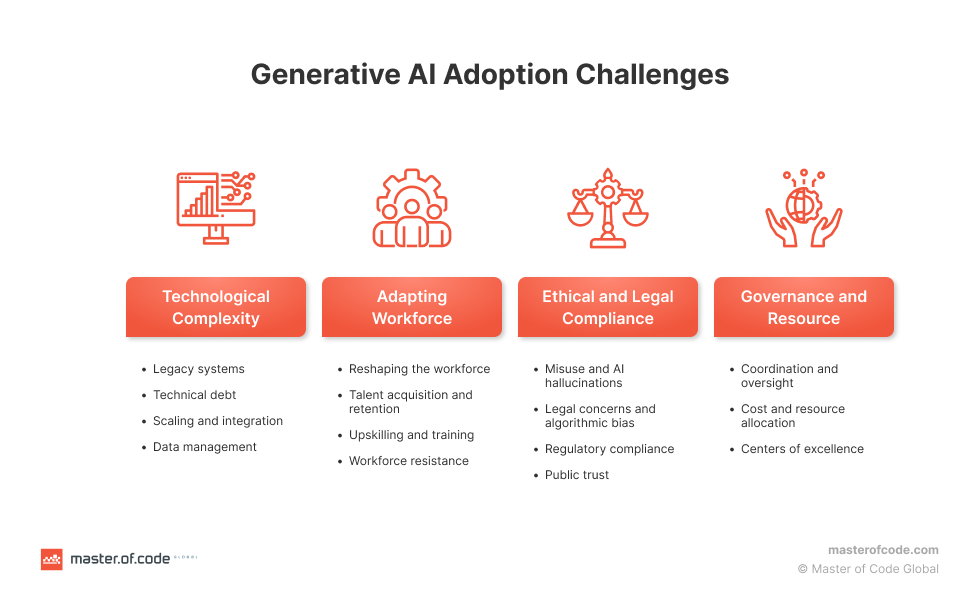

Challenges and Solutions

Generative AI models have become well known for making mistakes, or "hallucinations", spewing up misinformation with uncanny certainty. Regulators aim to apply existing rules covering everything from copyright and data privacy to two key issues: the data fed into models and the content they produce.

Agencies in the US and Europe are being encouraged to "interpret and reinterpret their mandates," according to experts. In the EU, proposals for the bloc's AI Act will force companies like OpenAI to disclose any copyrighted material used to train their models, leaving them vulnerable to legal challenges.

Adapting to Technological Advances

While regulators adapt to the pace of technological advances, some industry insiders have called for greater engagement with corporate leaders. Harry Borovick, general counsel at Luminance, a startup which uses AI to process legal documents, emphasized the importance of dialogue between regulators and companies.

As the landscape of AI regulation continues to evolve, regulators worldwide are navigating the complexities of enforcing laws designed for a different technological era to address the challenges posed by cutting-edge AI technologies like ChatGPT.