Deploying LLMs on Raspberry Pi Powered HMI: Talk with Intelligent ...

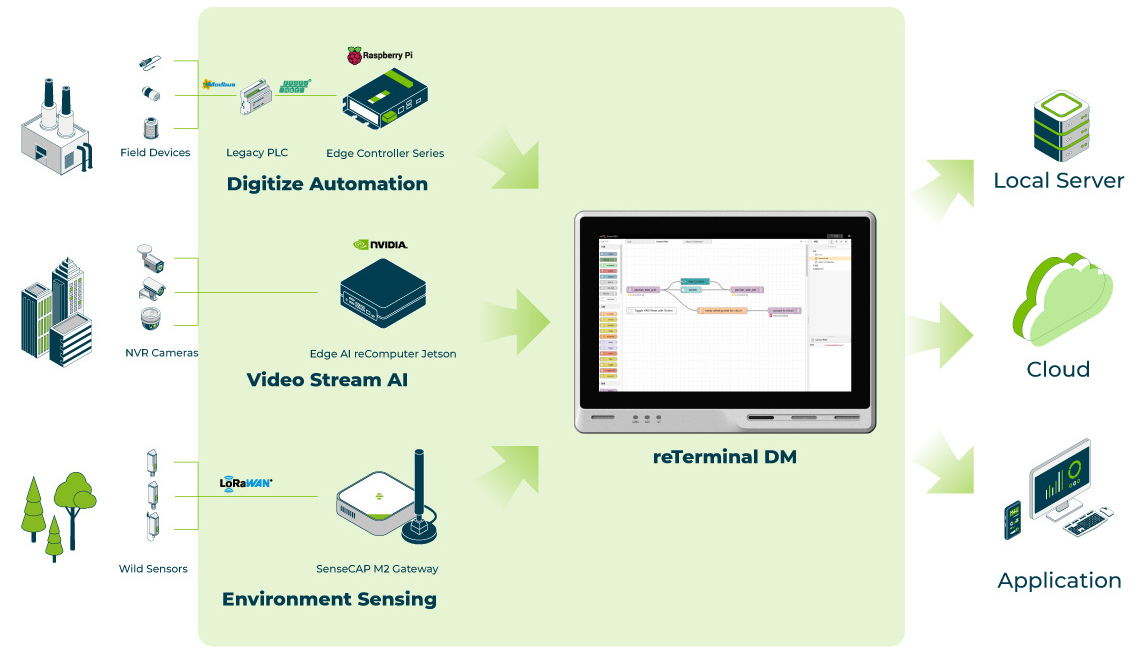

In the modern era of technological advancement, both smart factories and smart buildings represent the forefront of innovation in their respective domains. A smart facility, encompassing both manufacturing and structural management, leverages a blend of advanced technologies to optimize operations, enhance efficiency, and ensure seamless communication between systems and humans. By integrating the Internet of Things (IoT), artificial intelligence (AI), machine learning (ML), and big data analytics, these facilities create an interconnected environment that not only improves production processes and building operations but also ensures flexibility and sustainability.

The advent of Large Language Models (LLMs) has opened new frontiers in artificial intelligence, with models now comprising billions of parameters and continuously evolving to meet diverse applications. While cloud-based AI services have become prevalent, deploying LLMs locally on personal or enterprise hardware offers significant advantages, particularly regarding privacy and data control. This approach enables users and organizations to process sensitive information and perform AI-driven analyses without relying on external servers, thereby keeping proprietary or confidential data securely within their own computing environments. Local deployment addresses many of the privacy concerns associated with cloud-based services, where data may transit through or be stored on third-party servers.

Small Quantized Language Models on Raspberry Pi

With the 8GB RAM available in the reTerminal DM, deploying small quantized language models becomes feasible within this HMI environment. Deploying these small quantized language models on the reTerminal DM enhances the capabilities of the Raspberry Pi-powered HMI by enabling robust, localized AI-driven functionalities. While each model has its strengths and trade-offs in terms of size, memory requirements, and response quality, the selection should be based on the specific application needs and performance criteria.

For tasks requiring high quality and flexibility, LLaMA 2 7B Chat is suitable, while Phi-2 is preferable for faster response times. For ultra-compact and efficient edge deployments, Phi-2 stands out as an optimal choice.

Integration with Cloud-based LLM Services

If your system has internet access, you can interact with various LLM services using API keys. Some APIs are available for free, such as Google Gemini and Meta AI’s LLaMA 3. By creating a Gemini AI API key, you can gain access to the Gemini API and other related services, as well as Meta AI’s LLaMA 3. Integrating these cloud-based LLM services can enhance the capabilities of your system, providing rapid and sophisticated data analysis and decision support.

Node-RED and MariaDB Integration

Node-RED is an open-source, flow-based development tool designed for visual programming, particularly suited for integrating devices, APIs, and online services. Node-RED offers a user-friendly, browser-based interface that allows users to wire together various components and create complex workflows through a simple drag-and-drop mechanism. This visual approach significantly reduces the barrier to entry, enabling both developers and non-developers to automate processes and build IoT applications efficiently.

MariaDB, a robust, open-source relational database management system, is known for its high performance, reliability, and security. It supports a wide array of storage engines, advanced clustering, and is fully compatible with MySQL, making it a versatile solution for various database needs. Deploying MariaDB on the Raspberry Pi-powered HMI ensures efficient handling of large volumes of data and complex queries.

Enhancing User Interface with FlowFuse

FlowFuse is an innovative tool that enhances the capabilities of Node-RED by providing an intuitive set of nodes specifically designed for creating data-driven dashboards and visualizations. Their latest offering, Dashboard 2.0, simplifies the process of building interactive and visually appealing user interfaces.

Operational Technology and Information Technology Integration

Integrating Operational Technology (OT) and Information Technology (IT) services in factory operations or smart buildings offers significant advantages, particularly when leveraging cloud-based LLM APIs within existing systems. Traditional OT systems, such as factory automation, often operate in closed networks without internet connectivity. By incorporating cloud-based LLMs, these systems can enhance their capabilities without compromising security and privacy.

This setup not only manages real-time operations efficiently but also empowers decision-makers and non-technical personnel by providing easy access to advanced data insights through natural language queries. The Raspberry Pi-powered HMI, with its ability to facilitate local deployment of LLMs, ensures that sensitive information remains within the organization’s control while enabling sophisticated data analysis and decision support, ultimately driving operational excellence and innovation.