What are Small Language Models (SLM)? | IBM

Small language models (SLMs) are artificial intelligence (AI) models capable of processing, understanding and generating natural language content. Unlike their larger counterparts, SLMs are designed to be more compact and efficient, making them ideal for resource-constrained environments and scenarios where AI inferencing needs to be done offline.

Understanding Small Language Models

In terms of size, SLM parameters typically range from a few million to a few billion, as opposed to large language models (LLMs) which can have hundreds of billions or even trillions of parameters. These parameters, such as weights and biases, play a crucial role in influencing how a machine learning model behaves and performs.

Small language models are built on a neural network-based architecture known as the transformer model, which has become fundamental in the field of natural language processing (NLP). Transformers enable models to process and understand complex language patterns and relationships.

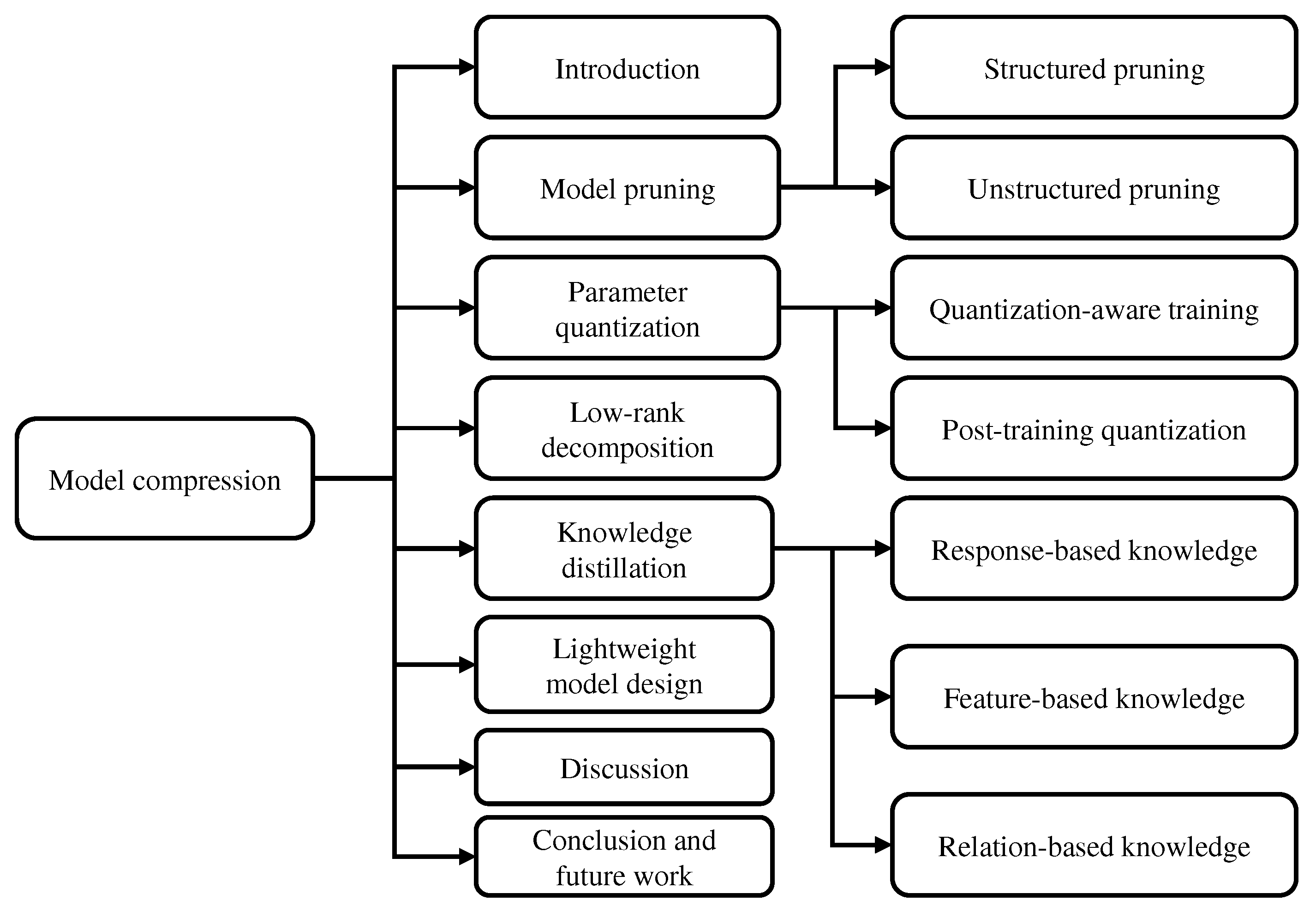

Model Compression Techniques

To build leaner models from larger ones, various model compression techniques are applied, such as pruning, quantization, low-rank factorization, and knowledge distillation. These methods help reduce the size of the model while maintaining its accuracy.

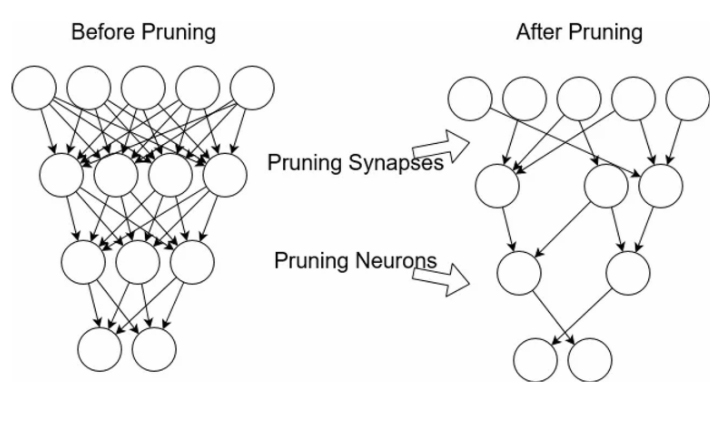

Pruning: Removes unnecessary parameters from a neural network, leading to a more streamlined model. Fine-tuning is often required after pruning to ensure optimal performance.

Quantization: Converts high-precision data to lower precision, reducing computational load and speeding up inferencing.

Low-rank factorization: Decomposes a large matrix of weights into a smaller, more efficient matrix, simplifying computations.

Knowledge distillation: Involves transferring knowledge from a larger "teacher model" to a smaller "student model," allowing the smaller model to mimic the reasoning process of the larger model.

Popular Small Language Models

Some examples of popular SLMs include DistilBERT, Gemma, GPT-4o mini, Granite, Llama, Ministral, and Phi. These models are optimized for various tasks and are designed to be more accessible and efficient than larger models.

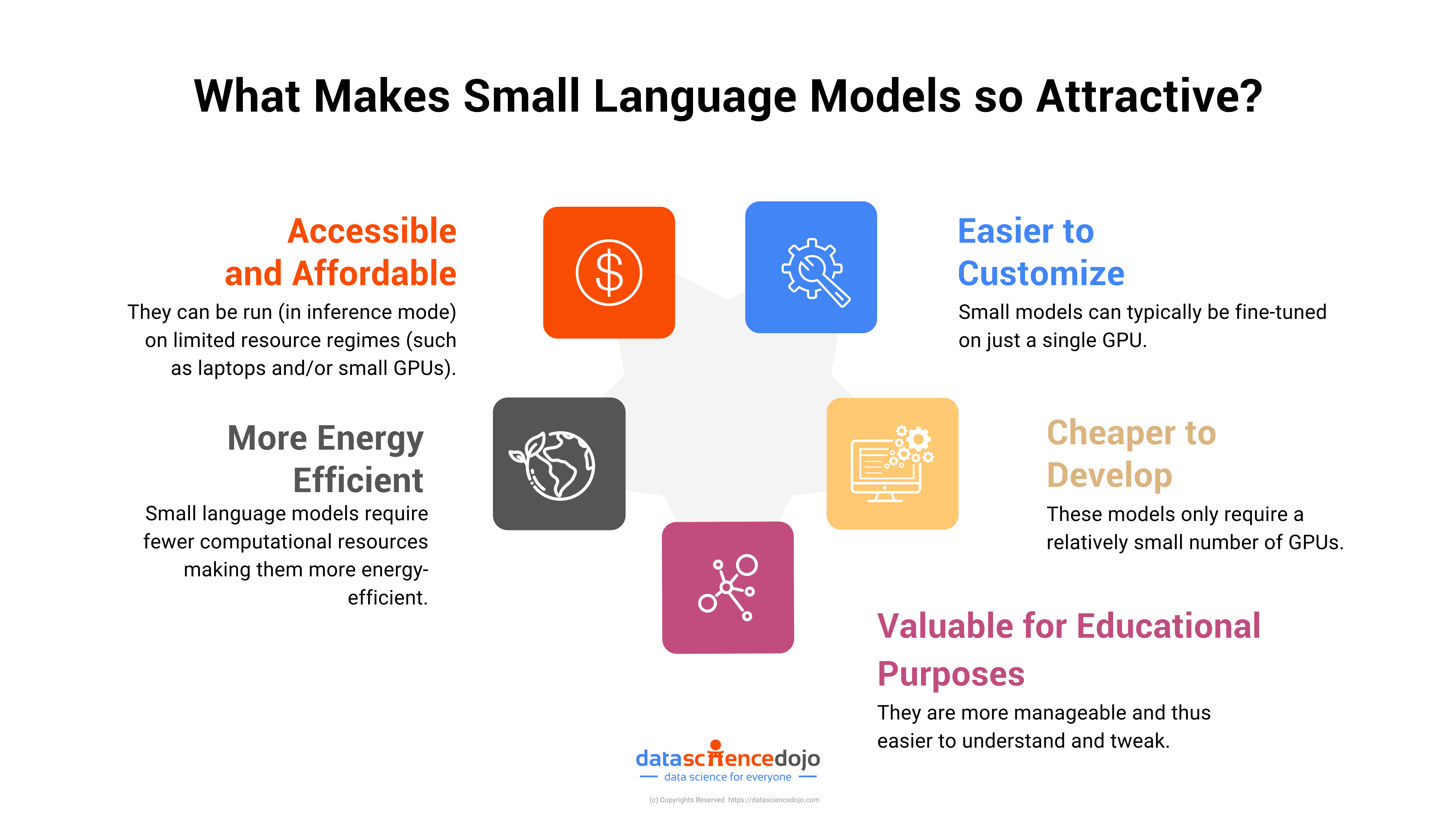

Advantages of Small Language Models

Despite their smaller size, SLMs offer several advantages:

- Accessibility: Researchers and developers can experiment with SLMs without the need for specialized equipment.

- Efficiency: SLMs are less resource-intensive, allowing for quicker training and deployment.

- Effective Performance: Small models can deliver comparable or better performance than larger models in certain tasks.

- Privacy and Security Control: The smaller size of SLMs allows for greater control over privacy and security.

By leveraging the strengths of both large and small language models, organizations can optimize their AI capabilities to handle a wide range of tasks efficiently and effectively.