Building Resilient GenAI pipeline with Open-source AI Gateway

Generative AI is rapidly gaining traction, with companies eager to integrate it into their workflows and drive product innovation. As its popularity soars, numerous LLM models and Generative AI companies are emerging. However, this surge in interest brings its own set of challenges. Companies face difficulties in managing large models on their infrastructure, caching results, handling credentials, and dealing with new-age queries (prompts). Most critically, they struggle with managing a diverse array of AI models, each with its unique structure and communication format. This complexity makes it challenging to establish failover mechanisms and efficiently switch between models, leading to significant time and resource investments.

Portkey AI Gateway offers an open-source AI Gateway that simplifies managing generative AI workflows. This powerful tool supports working with multiple large language models (LLMs) from various providers, along with different data formats (multimodal). By acting as a central hub, Portkey streamlines communication and simplifies integration, improving your application's reliability, cost-effectiveness, and accuracy.

Getting Started with PortKey AI Gateway

Getting started with PortKey AI Gateway involves very simple steps. Open the official GitHub repository and follow the provided instructions or run the below command in your terminal. Make sure NodeJs is installed on your machine. Then, access http://localhost:8787 in your browser.

Using Google PaLM and Gemini Model with PortKey Gateway

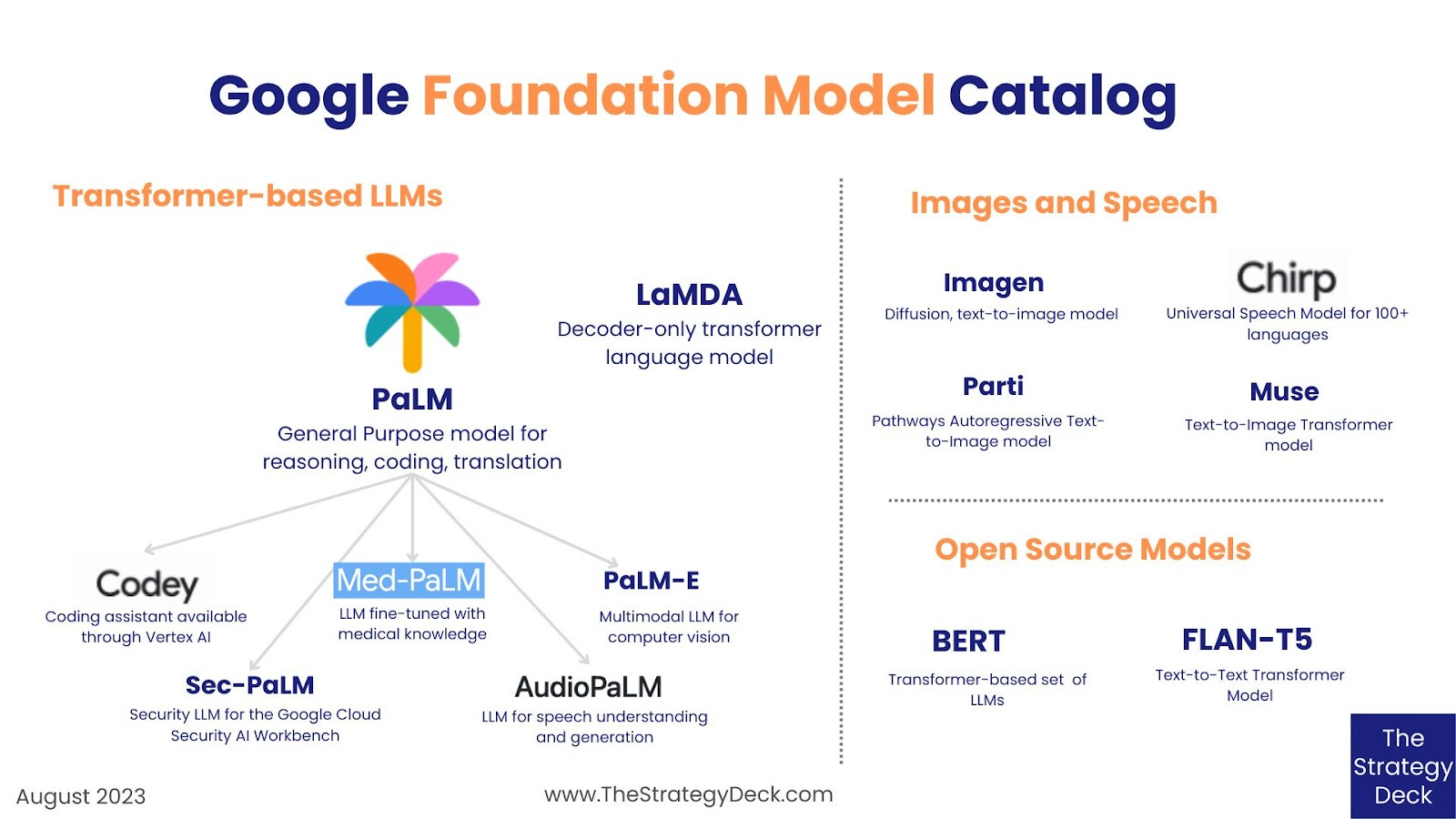

In this blog post, I will guide you on how to use Google PaLM and Gemini models with the PortKey gateway. If you already have the API key, you can skip these steps. Sign up using your Google account here and click on "Create new key" in the left navigation menu.

To call PortKey Gateway, you need your AI model API key or secret key (Google AI Studio API key) and the model name you want to test. PortKey currently supports specific AI model API versions, like Google Gemini version v1beta and Google PaLM version v1beta3.

As per the reference document here, the Google Gemini model supported for generateContent is gemini-pro. You can call different AI models like OpenAI, Ollama, etc., with just the model name and AI API key.

By utilizing PortKey AI Gateway, you can make your GenAI pipeline resilient by leveraging features like fallback mechanisms. This allows you to add a list of AI model APIs to switch to if the primary API fails. Additional capabilities such as auto-retry mechanisms, caching, and load balancing can also be configured to enhance the stability of your GenAI pipeline.

PortKey AI Gateway simplifies managing generative AI workflows by offering multi-model support and easy integration. This results in improved application performance through features like automatic failover and efficient model switching. Try PortKey AI Gateway today and enhance the resilience of your GenAI pipeline.

For more details about features and how to use PortKey AI Gateway, visit https://docs.Portkey AI Gateway/docs/product/ai-gateway-streamline-llm-integrations.

Explore the PortKey configuration and API references: