TechLetters ☕️ Designing blockchain systems with data protection ...

My take: Google quietly killed its 7-year plan to kill third-party cookies. A big strategic reversal and a blow to web privacy. Let's hope it's not a sign of impending privacy deterioration.

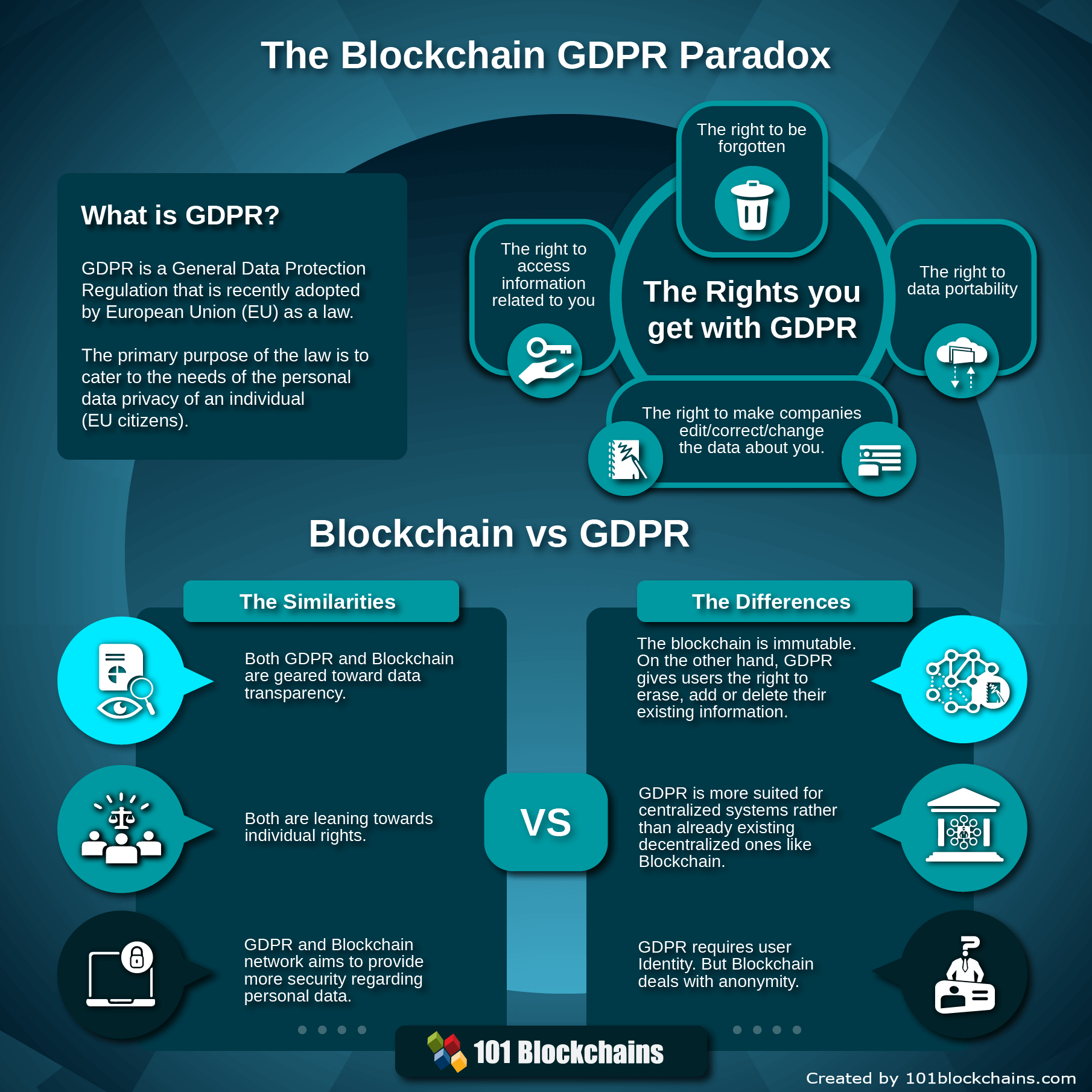

Blockchain and data protection (GDPR). My assessment.

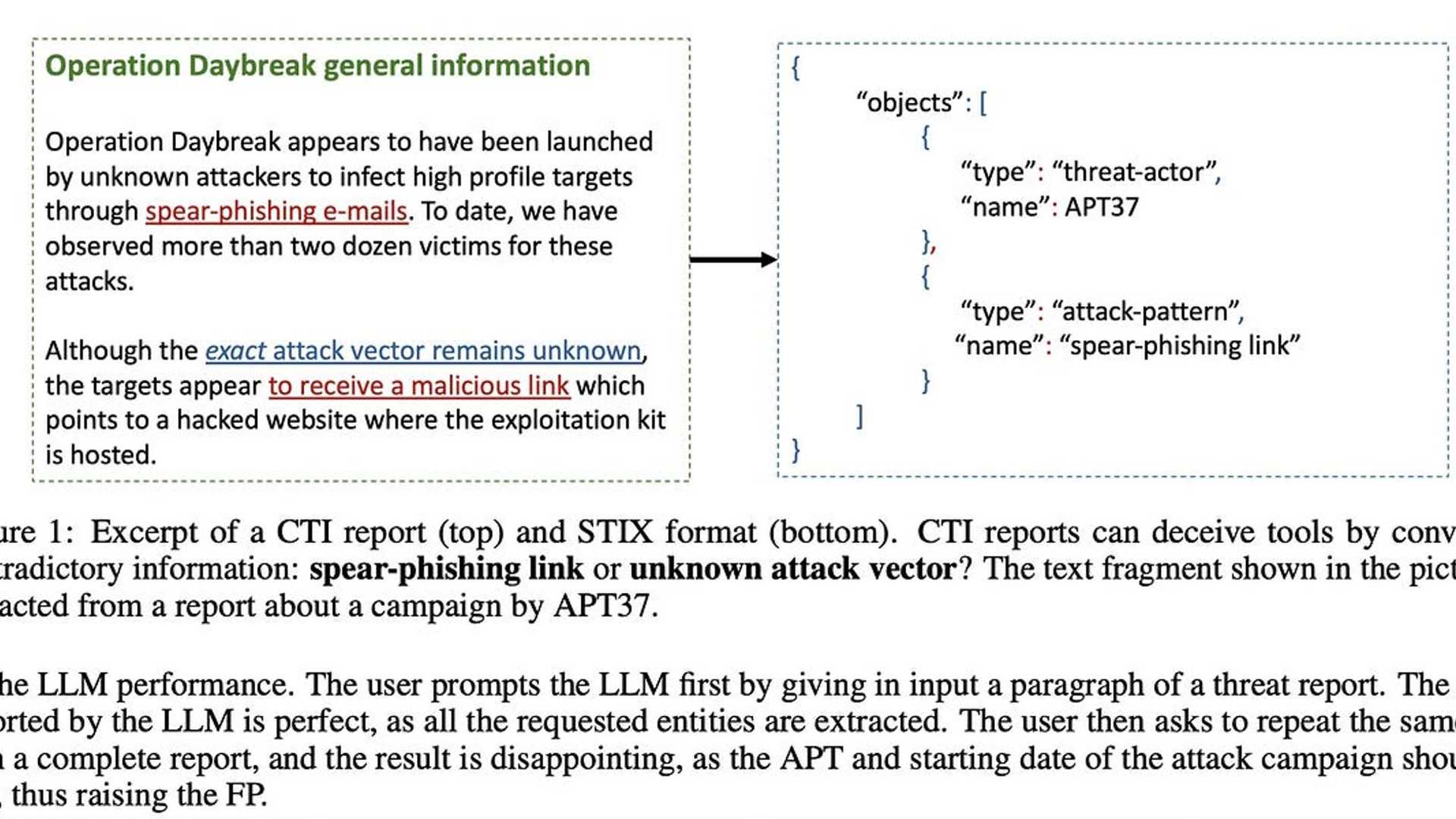

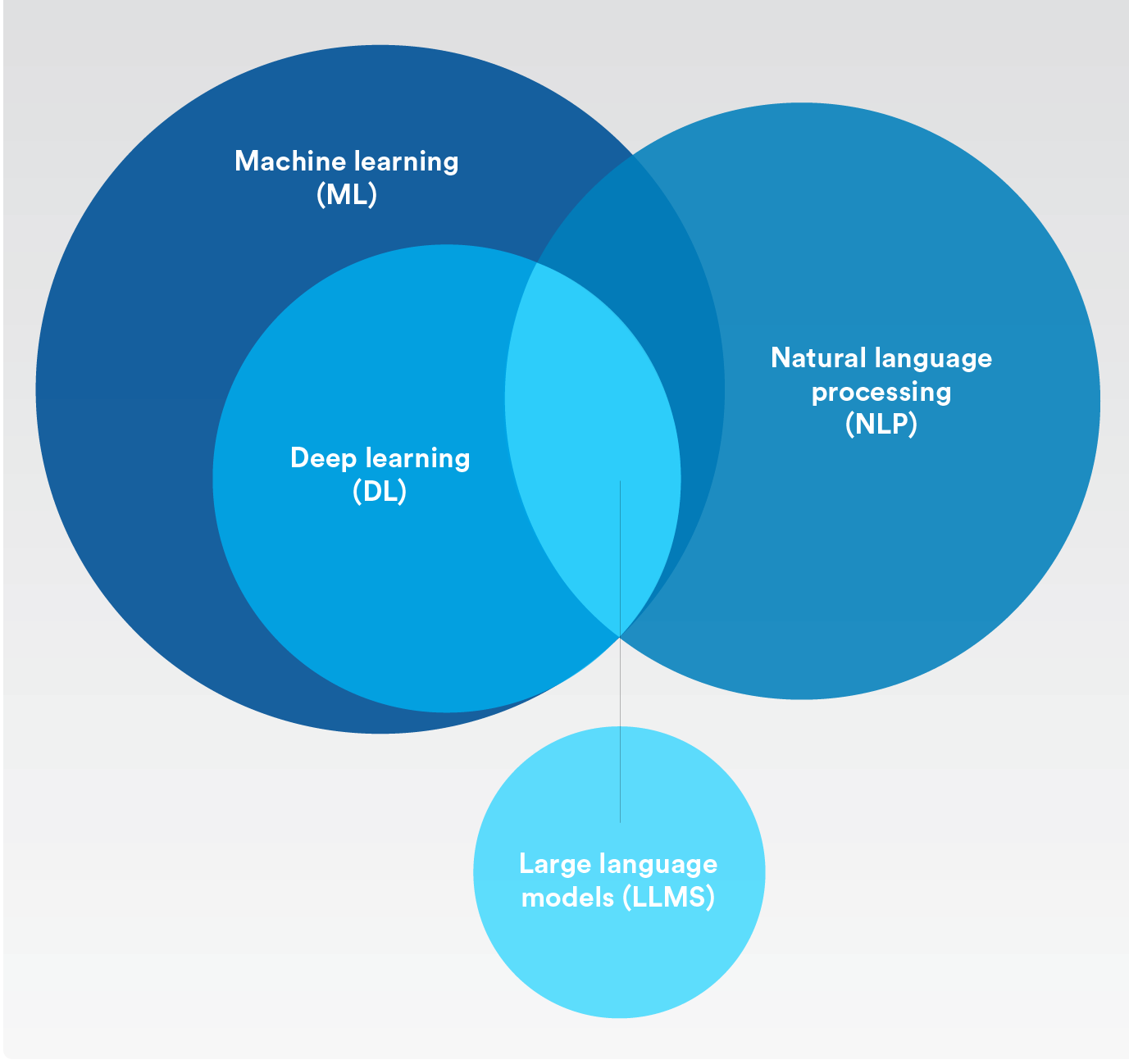

Large Language Models are not helpful in cyber threat intelligence

Making the results even worse. Is that because CTI is based on judgment, and LLMs do whatever the user primes them or requests them to decide/find? "The model might be attributing an attack campaign from a report to a threat actor that probably did not implement it, introducing false positives. The opposite case is also possible". In this sense, the use of LLMs is introducing more ambiguity or uncertainty-the exact opposite of the goals of cyber threat intelligence (or in fact counterintelligence). More info...

Security and privacy vulnerability in the emerging AI infrastructure (Model Context Protocol) in a targeted manner

The attack leads to leakage of the entire conversation history, including any sensitive data. The tool just politely ask to steal all the data. Read more...

Subscribe for free to receive new posts and support my work.

China issued wanted notices for three people...

They said engaged in cyberattacks against China on behalf of the U.S. National Security Agency allegedly during during the 9th Asian Winter Games. They also accuse University of California. So what's that about? Because the story suddenly moves from Winter Games to critical infrastructure and backdoors and "actions are believed to exploit dormant vulnerabilities intentionally left in Windows". How credible is that? Find out more...

Russia quietly rewriting reality

The one in LLMs, not through tanks or troops. By feeding disinformation and propaganda into the tools people may increasingly trust to understand the world, AI chatbots. It's gaming of the system, feeding propaganda in ways that people might never know what’s happening. Efforts to influence chatbot results are growing, as former SEO marketers now use "generative engine optimization" (GEO) to boost visibility in AI-generated responses. This is a deliberate disinformation tactic targeting the intermediary channel — here the AI chatbot — which increasingly functions as a trusted informational mediator. As these systems gain status as trusted default sources of expert knowledge, they are becoming an attractive target to carry information lines crafted by actors engaged in influence operations. Why this is so dangerous? It’s because in this model, the recipient no longer perceives the origin as “Russian propaganda claims that…”, but rather as “my trusted AI assistant says that…”. This transfer of authority lowers the threshold for critical assessment and enhances the effectiveness of propaganda. Read on...

Google's plan to phase out third-party cookies in Chrome is officially over

“the Privacy Sandbox APIs may have a different role to play in supporting the ecosystem”. That's disappointing. A bad sign for the future of privacy on the web.

Swiss government and instant messenger metadata

Instant messengers like Threema or e-mail/VPN providers like Proton are strongly against. Learn more...

Apple and Meta got the first EU Digital Markets Act fines

Apple got a €500 million penalty for App Store. Meta has been fined €200 million for 'Consent or Pay', since some services should be provided for free and without processing user data. Details here...

The Autoriteit Persoonsgegevens (AP), the Dutch Data Protection Authority issued an unusual warning

It is urging Instagram and Facebook users in the Netherlands to take immediate action if they do not want their data and posts used to train artificial intelligence (AI) models. Users who wish to object must do so before 27 May 2025. After that date, Meta will automatically begin using the data of adult users—including posts, photos, and comments—for AI training. The AP emphasizes that once data has been incorporated into an AI model, it cannot be removed. Therefore, it is crucial for users who oppose this use of their data to submit an objection as soon as possible. DPAs are analysing if this is all fine with GDPR More insights...

European Court of Justice may decide if just about any algorithms, including non-AI ones, are subject to the AI Act

The regulation about Artificial Intelligence. It would be a fascinating expansion of the regulation applications. Read more...

Quantum key distribution in Germany demonstrated across 254 km of standard telecom fibre.

With 56 dB total channel loss and a relay node positioned ~60% along the link. The system achieved 110 bit/s using optical interference at a central receiver, which acted as an untrusted quantum repeater — enabling secure long-distance communication without trusted nodes, quantum memories, or cryogenic hardware. In-depth analysis...