Google announces Gemini Robotics, a Gemini 2.0 model optimized for Robotics

Introduction to Gemini Robotics

Google DeepMind has been making significant progress in the field of AI with its Gemini, Imagen, Veo, Gemma, and AlphaFold updates. The recent development involves the introduction of Gemini Robotics, marking the entry of Google DeepMind into the robotics industry.

Gemini Robotics: A Breakthrough in AI

The Gemini Robotics is an advanced vision-language-action (VLA) model based on Gemini 2.0. It includes physical actions as a new output modality to control robots effectively. According to Google, this model excels in understanding new situations not encountered during training, outperforming other models in generalization benchmarks. Moreover, its natural language understanding spans multiple languages, enhancing interaction capabilities.

Precision and Dexterity

Google emphasizes that Gemini Robotics exhibits high dexterity, enabling it to perform intricate, multi-step tasks with precision. Tasks like origami folding and intricate object handling showcase the model's capabilities in real-world scenarios.

Gemini Robotics-ER: Enhancing Spatial Reasoning

Another model, Gemini Robotics-ER, focuses on spatial reasoning and seamlessly integrates with existing low-level controllers for roboticists. This model provides a comprehensive solution for controlling robots, including perception, spatial understanding, planning, and code generation.

Partnerships and Future Prospects

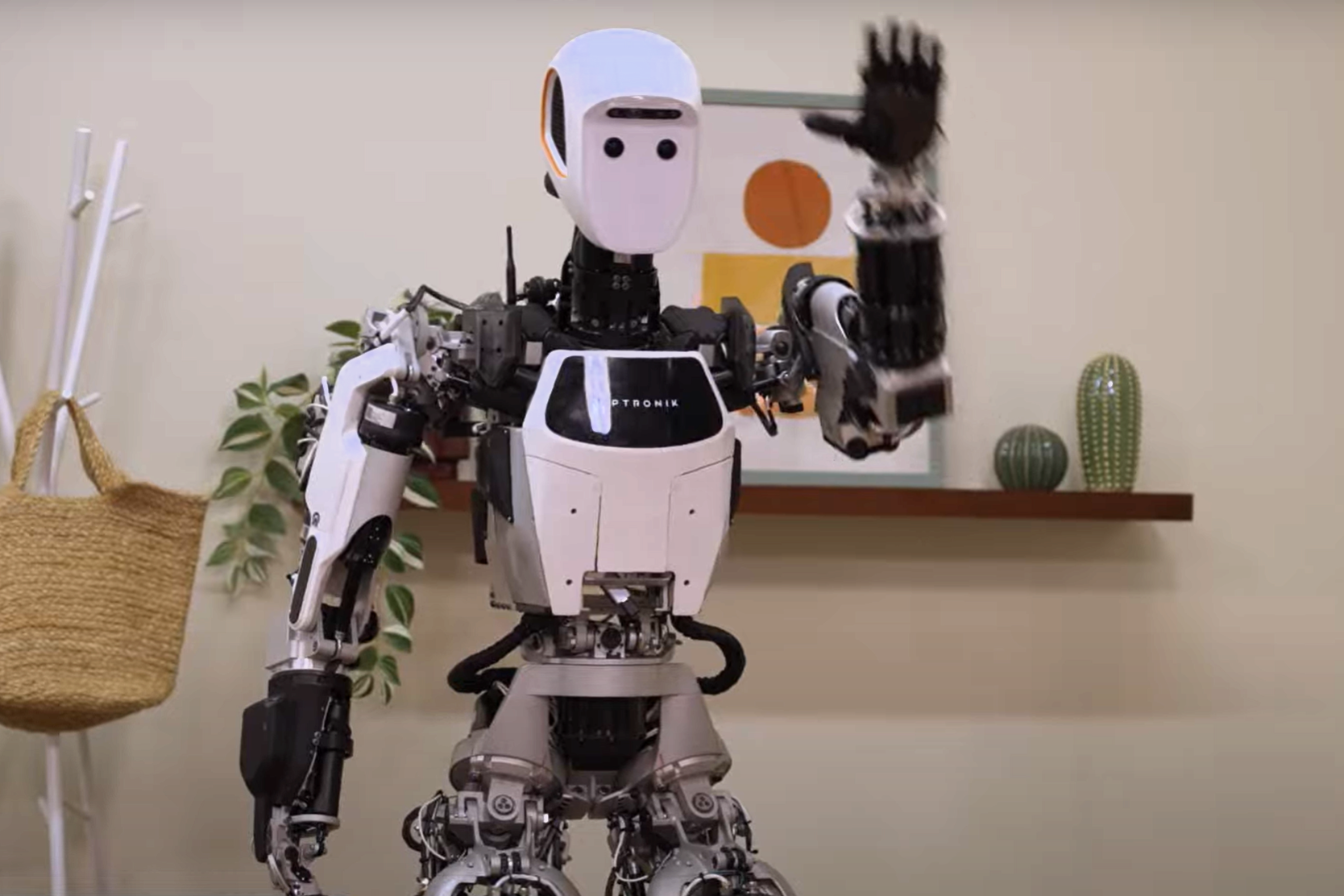

Google is collaborating with Apptronik to develop humanoid robots based on Gemini 2.0 models. Additionally, partnerships with industry leaders like Agile Robots and Boston Dynamics underscore the potential applications of Gemini Robotics-ER in diverse settings.

Conclusion

By enabling robots to comprehend and execute complex tasks with precision, Google DeepMind's Gemini Robotics sets the stage for a future where robots seamlessly integrate into various aspects of society.