Tactile Sensing in Robotics

Tactile sensing is a critical component in the field of robotics, enabling machines to perceive and interact with their surroundings effectively. In the realm of robotics, the ability to touch and feel is just as essential as the power of sight.

Challenges of Vision-Based Tactile Sensors

However, existing vision-based tactile sensors face significant hurdles. These sensors come in a variety of shapes, sizes, and with different surface characteristics, making the creation of a universal solution quite challenging. Traditional models are typically tailored to specific tasks or sensors, hindering their scalability across diverse applications. Moreover, acquiring labeled data for key properties such as force and slip is a time-consuming and resource-intensive process, further impeding the progress of tactile sensing technology.

Introducing Sparsh: The First General-Purpose Encoder

In response to these challenges, Meta AI has launched Sparsh, the pioneering general-purpose encoder for vision-based tactile sensing. Named after the Sanskrit word for "touch," Sparsh signifies a transition from sensor-specific models to a more adaptable and scalable approach. By leveraging recent advancements in self-supervised learning (SSL), Sparsh creates touch representations that are applicable across a broad spectrum of vision-based tactile sensors.

Sparsh is not reliant on task-specific labeled data; instead, it is trained on over 460,000 unlabeled tactile images collected from various sensors. This approach broadens the scope of applications for Sparsh beyond what traditional tactile models can achieve.

State-of-the-Art SSL Models

Sparsh is constructed upon cutting-edge SSL models like DINO and JEPA, which have been tailored for the tactile domain. This adaptation allows Sparsh to generalize across different sensor types such as DIGIT and GelSight, excelling in various tasks. The encoder family, pre-trained on a vast number of tactile images, serves as a foundational element, eliminating the need for manual labeling and streamlining the training process.

The Sparsh Framework

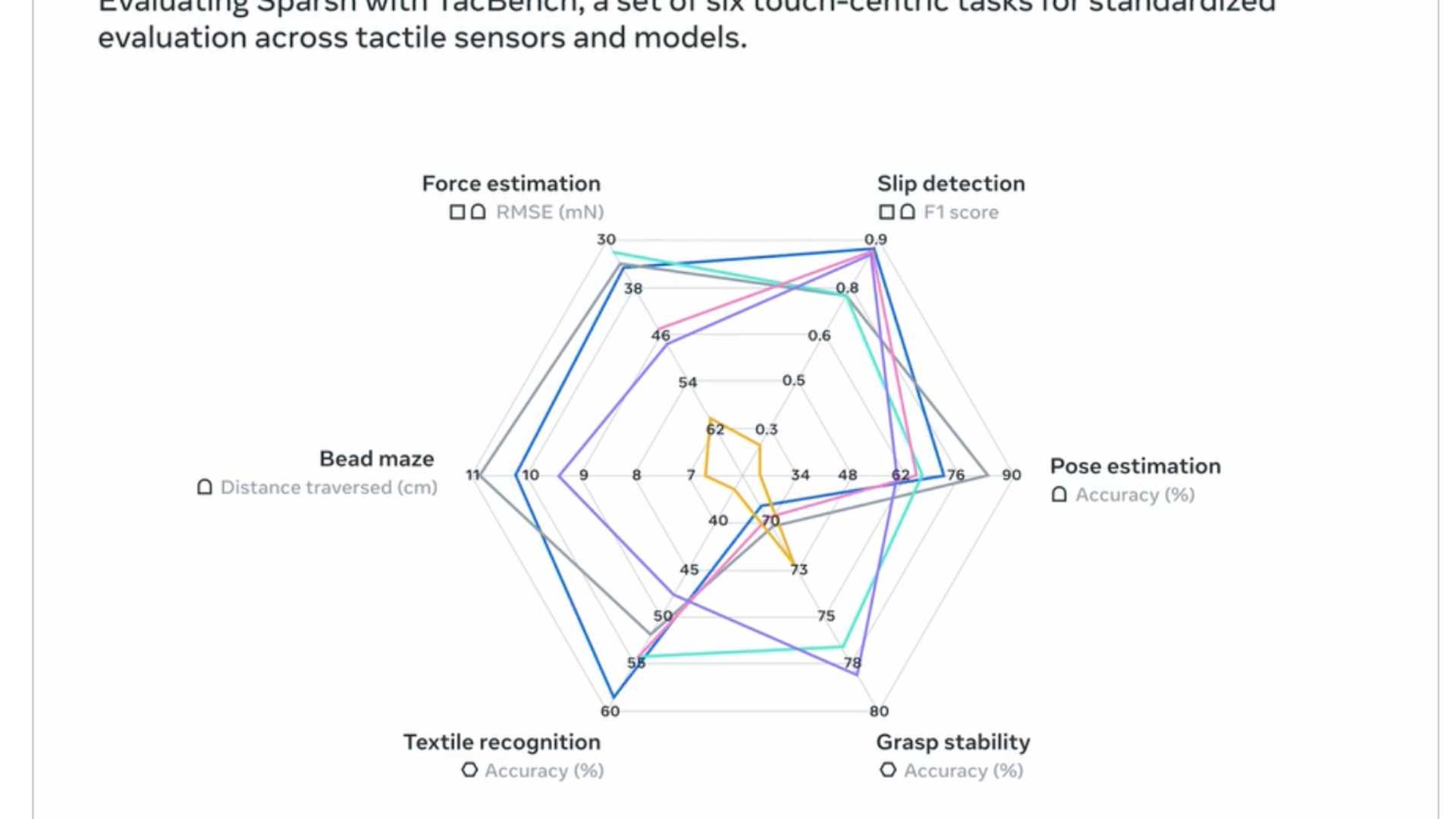

The Sparsh framework includes TacBench, a benchmark comprising six touch-centric tasks including force estimation, slip detection, pose estimation, grasp stability, textile recognition, and dexterous manipulation. Sparsh's performance on these tasks highlights its superiority over traditional sensor-specific solutions, achieving an average performance gain of 95% while reducing the labeled data requirement to 33-50%.

Implications of Sparsh

The introduction of Sparsh by Meta AI represents a significant stride towards enhancing physical intelligence through AI. By offering a range of general-purpose touch encoders, Meta aims to empower researchers to develop scalable solutions for robotics and AI. Sparsh's reliance on self-supervised learning eliminates the arduous process of collecting labeled data, paving the way for the creation of sophisticated tactile applications.

Sparsh's ability to perform well across tasks and sensors, as evidenced by its exceptional performance in the TacBench benchmark, underscores its transformative potential in various fields. From industrial robots to household automation, the integration of Sparsh can lead to advancements in physical intelligence and tactile precision, enhancing overall performance.

For more information, refer to the official paper, GitHub repository, and Models on HuggingFace.