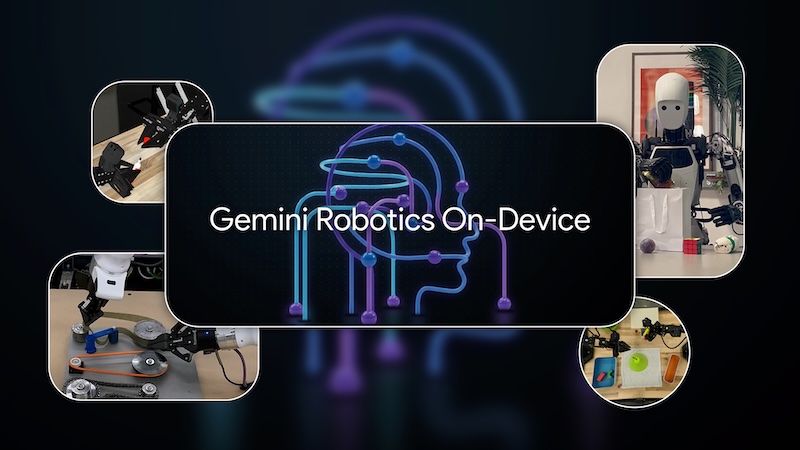

Google DeepMind has announced an on-device version of its Gemini Robotics AI model. The new vision-language-action (VLA) model is small and efficient enough to run directly on a robot, without needing an internet connection. This marks a major step forward in robotics technology.

Model Evolution

Carolina Parada, head of robotics at Google DeepMind, explained that the original Gemini Robotics model uses a hybrid approach. This allows it to operate both on-device and in the cloud. However, with this new device-only model, users can access offline features that are almost at par with those of the flagship version. The on-device model can perform several different tasks out of the box and adapt to new situations with just 50-100 demonstrations.

Versatility

Google trained the on-device model only on its ALOHA robot but was able to adapt it to different types of robots. These include Apptronik's humanoid Apollo robot and bi-arm Franka FR3 robot. Parada said, "The Gemini Robotics hybrid model is still more powerful, but we're actually quite surprised at how strong this on-device model is."

Industry Insights

Along with the launch of this innovative tech, Google is also releasing a software development kit (SDK) for developers to evaluate and fine-tune it.