AMD, Intel, Google, Microsoft, Meta and others establish industry standard

AMD, Intel, Google, Microsoft, Meta, Broadcom, Cisco, and Hewlett Packard Enterprise (HPE) have joined forces to introduce a new open industry standard known as Ultra Accelerator Link (UALink). This standard aims to facilitate high-speed, low-latency communication for next-generation AI accelerators within data centers.

By introducing the Ultra Accelerator Link (UALink), these tech giants are setting the stage for improved interconnection between AI accelerators. This initiative is expected to simplify integration efforts for system OEMs, IT professionals, and system integrators, offering enhanced flexibility and scalability in AI-connected data centers. The development of an open, high-performance environment optimized for AI workloads is a key objective of UALink.

Enabling Enhanced AI Computing

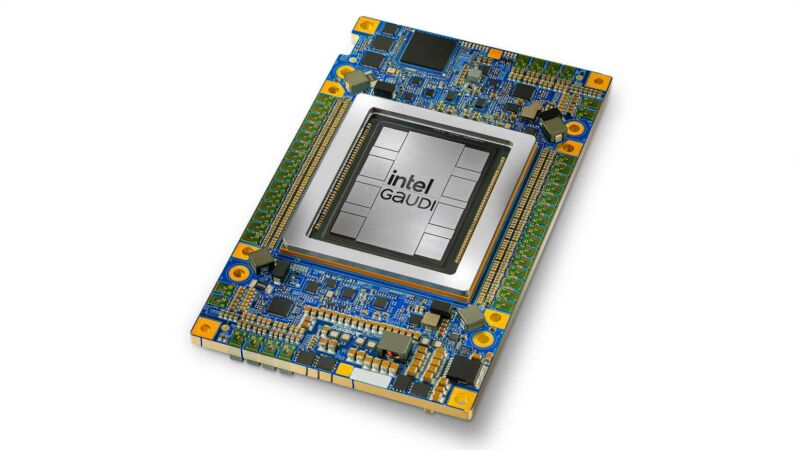

UALink's Version 1.0 is designed to support the connection of up to 1024 accelerators in an AI computing pod through a reliable, scalable, and low-latency network. This specification facilitates direct data transfer between memory components associated with accelerators such as AMD's Instinct and Intel's Gaudi, thereby enhancing the overall performance and efficiency of AI computing processes.

Establishing the UALink Consortium

The collaboration among these eight companies will lead to the formation of the UALink Consortium in the third quarter of 2024, with detailed specifications for UALink version 1.0 set to be released during that period.

'The work being undertaken by UALink companies is crucial for the evolution of AI, aiming to create a scalable accelerator fabric that is open and high-performing,' stated Forrest Norrod, Executive Vice President and General Manager of Datacenter Solutions at AMD. He emphasized AMD's commitment to contributing expertise, technology, and capabilities to drive advancements in AI technology and bolster the open AI ecosystem.

Similarly, Sachin Katti, Senior Vice President and General Manager of Intel's Network and Edge division, expressed Intel's pride in co-leading this innovative technology. Intel looks forward to fostering industry innovation and delivering customer value through the UALink standard, building upon its existing leadership in AI connectivity innovation.

Industry Responses

A statement from Tom's Hardware highlighted the involvement of key players such as AMD, Broadcom, Google, Intel, Meta, and Microsoft in developing AI accelerators, with Cisco and HPE contributing network chips and servers, respectively. Notably, these companies are focusing on standardizing their chip infrastructure by collaborating in the UALink consortium. However, NVIDIA, a dominant force in AI hardware, has its own infrastructure and thus remains uninvolved in UALink's development.

As the UALink consortium gains momentum, it represents a significant move to compete with NVIDIA and enhance the landscape of artificial intelligence computing.