Supercharge your Productivity with Visual Language Models!

Over the past year, I have been consistently impressed by ChatGPT’s ability to handle visual tasks. The advanced version, ChatGPT-4V, initially introduced these visual processing features. However, it now appears that ChatGPT-4V is no longer a separate option, likely because its capabilities have been integrated into the current ChatGPT-4o model.

My first experience with ChatGPT-4V was while assisting my daughter in preparing for a difficult physics exam, required for university admission in the Netherlands. The exam consists of five complex, multi-page problems.

While chatbots like ChatGPT are excellent at predicting the next word based on context, they typically struggle with tasks that require reasoning. Initially, I tried uploading PDF files of previous exams and asking ChatGPT to solve them, but as expected, this approach didn’t work. Then, almost by chance, I converted the PDF files to PNG images and uploaded those instead. To my surprise, ChatGPT correctly solved about 80% of the questions using just a brief prompt. Most errors were due to copying incorrect numbers from the image, but the reasoning behind the solutions was accurate.

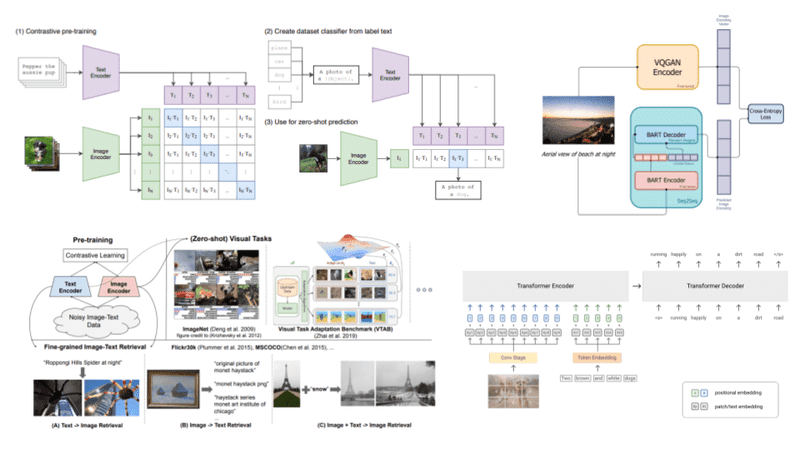

The Power of Vision-Language Models (VLMs)

Vision-Language Models (VLMs) are multimodal AI systems that combine a large language model (LLM) with a vision encoder, enabling the LLM to see. These models are versatile, context-aware, and ideal for complex tasks such as robotics, where the robot can respond to both visual and verbal commands.

VLMs are quickly becoming the go-to tool for all types of vision-related tasks due to their flexibility and natural language understanding. They are capable of tasks such as image analysis, visual Q&A, image and video summarization, and solving complex math and physics problems.

Applications of Vision-Language Models

With vast amounts of video being produced every day, it is infeasible to review and extract insights from this volume of video that is produced by all industries. VLMs can be integrated into a larger system to build visual AI agents capable of detecting specific events when prompted.

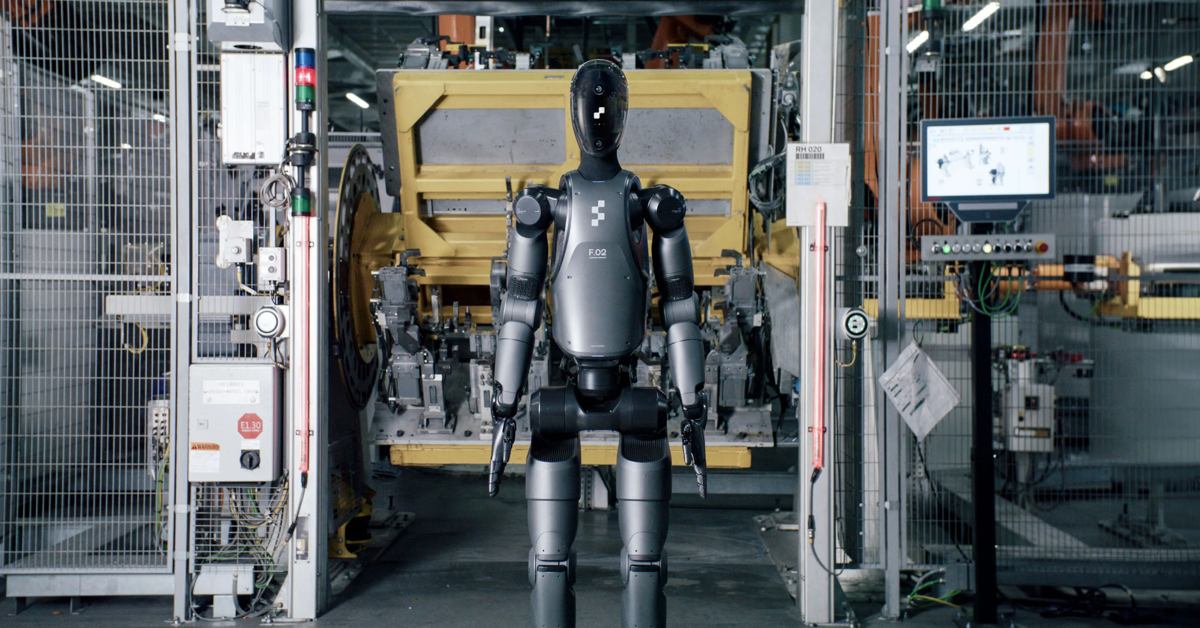

Advances in deep learning, reinforcement learning, and multimodal AI have significantly enhanced robotic perception, reasoning, and decision-making. VLMs enable robots to interpret both visual data and natural language commands, facilitating contextual understanding.

Challenges and Ethical Considerations

Implementing VLMs in robotics presents several challenges, including high costs, technical complexity, and ethical concerns. The increased automation of tasks traditionally performed by humans raises ethical issues around job displacement and data misuse.

The rise of Vision-Language Models (VLMs) is redefining the landscape of automation. But as they advance, so too must our conversation on the ethical implications. Are we ready to handle a future where machines interpret and act on visual and verbal cues, potentially outperforming human capabilities?

If you would like to read more blogs about AI go to Rainmakers SG