AI: Lessons from Amazon Alexa LLM AI retrofit. RTZ #389

Hello Computer of Star Trek fame. Voice Assistants, AI Version 2.0.

The Bigger Picture, Sunday, June 15, 2024

For the AI ‘Bigger Picture’ today, I’d like to discuss the challenges of ‘grafting’ new AI driven ‘Voice Assistants’ onto existing Voice Assistants like Apple Siri, Amazon Alexa, Google Assistant and others.

This is timely, right after we’ve seen what Apple has in plan for early but now late Siri service. Both by itself, in partnership with OpenAI, and potentially others. And it’ll all take a bit longer than we like.

AI Driven 'Voice Assistance' Augmentation

As I’ve discussed in these pages before, for billions of mainstream users worldwide, the next eighteen months plus are going to see AI driven ‘Voice Assistance’ augmenting the text-driven ‘Chatbot’ AI assistance that’s become all the rage for the last eighteen months, thanks to OpenAI’s ChatGPT.

New multimodal AI Voice capabilities on everything from OpenAI’s market-leading GPT-4o (Omni), to Anthropic’s Claude, to Google’s Gemini and many others, will be eager to answer user prompts and queries in as natural and engaging voices as possible. And be ‘Agentic’ to boot, offering personalized ‘smart agent’ ‘reasoning’ AI assistance along the way.

Distribution Challenges

But the key for all of the pure multimodal LLM AI chatbots will be DISTRIBUTION. The next question is how to connect them to the hundreds of millions plus mainstream users already using the previous generation of ‘Voice Assistants’ out there already in vast numbers. All distributed out there with tens of billions in investments already, by Amazon for Alexa/Echo, Apple for Siri/Homepods, Google for Google Assistant/Nest and many others.

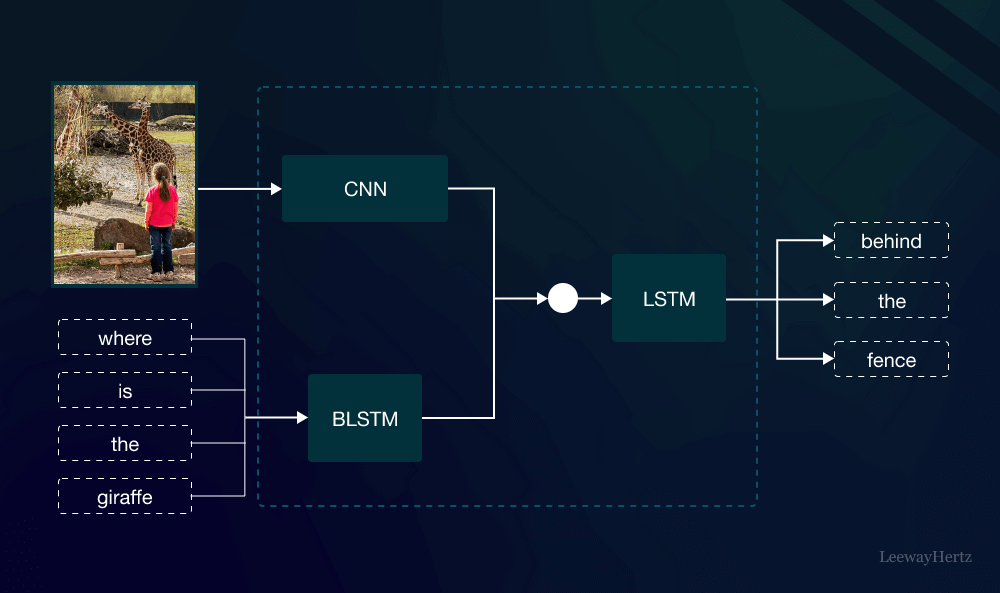

Those were all built using older ML (machine learning) technologies, the ‘old AI’ tech before the current LLM (Large Language Model)/Generative AI of OpenAI ChatGPT fame.

Amazon Alexa alone has over half a billion Alexa units in home worldwide. Apple’s existing Siri service sees over a billion and a half user queries a day from Apple users worldwide, both via iPhones, and Apple devices like Homepods, Carplay, and other Apple wearables.

Challenges with AI Retrofit

It’s not easy to just do a ‘brain transplant’ onto existing Voice Assistants with LLM/Generative AI. As the Verge explains in “Amazon is reportedly way behind on its new Alexa”:

“According to Fortune, the more conversational, smarter voice assistant Amazon demoed last year isn’t close to ready and may never be.”

“In the voice assistant arms race, the frontrunner may be about to finish last. On the heels of Apple revealing a new “Apple Intelligence”-powered Siri at its WWDC 2024 conference, a new report from Fortune indicates that Amazon’s Alexa — arguably the most capable of the current voice assistants — is struggling with its own generative AI makeover:”

... none of the sources Fortune spoke with believe Alexa is close to accomplishing Amazon’s mission of being “the world’s best personal assistant,” let alone Amazon founder Jeff Bezos’ vision of creating a real-life version of the helpful Star Trek computer. Instead, Amazon’s Alexa runs the risk of becoming a digital relic with a cautionary tale — that of a potentially game-changing technology that got stuck playing the wrong game.

As Fortune itself explains in its detailed “How Amazon blew Alexa’s shot to dominate AI”:

“The new Alexa LLM, the company said, would soon be available as a free preview on Alexa-powered devices in the US. Rohit Prasad, Amazon’s SVP and Alexa leader said the news marked a “massive transformation of the assistant we love,” and called the new Alexa a “super agent.” It was clear the company wanted to refute perceptions that the existing Alexa lacked smarts.”