OpenAI Is Looking Beyond ChatGPT For Smarter AI: But Why ...

Artificial intelligence companies like OpenAI are seeking to overcome unexpected delays and challenges in the pursuit of ever-bigger large language models by developing training techniques that use more human-like ways for algorithms to think. A dozen AI scientists, researchers, and investors told Reuters they believe that these techniques, which are behind OpenAI's recently released o1 model, could reshape the AI arms race, and have implications for the types of resources that AI companies have an insatiable demand for, from energy to types of chips.

The Limitations of Scaling Up

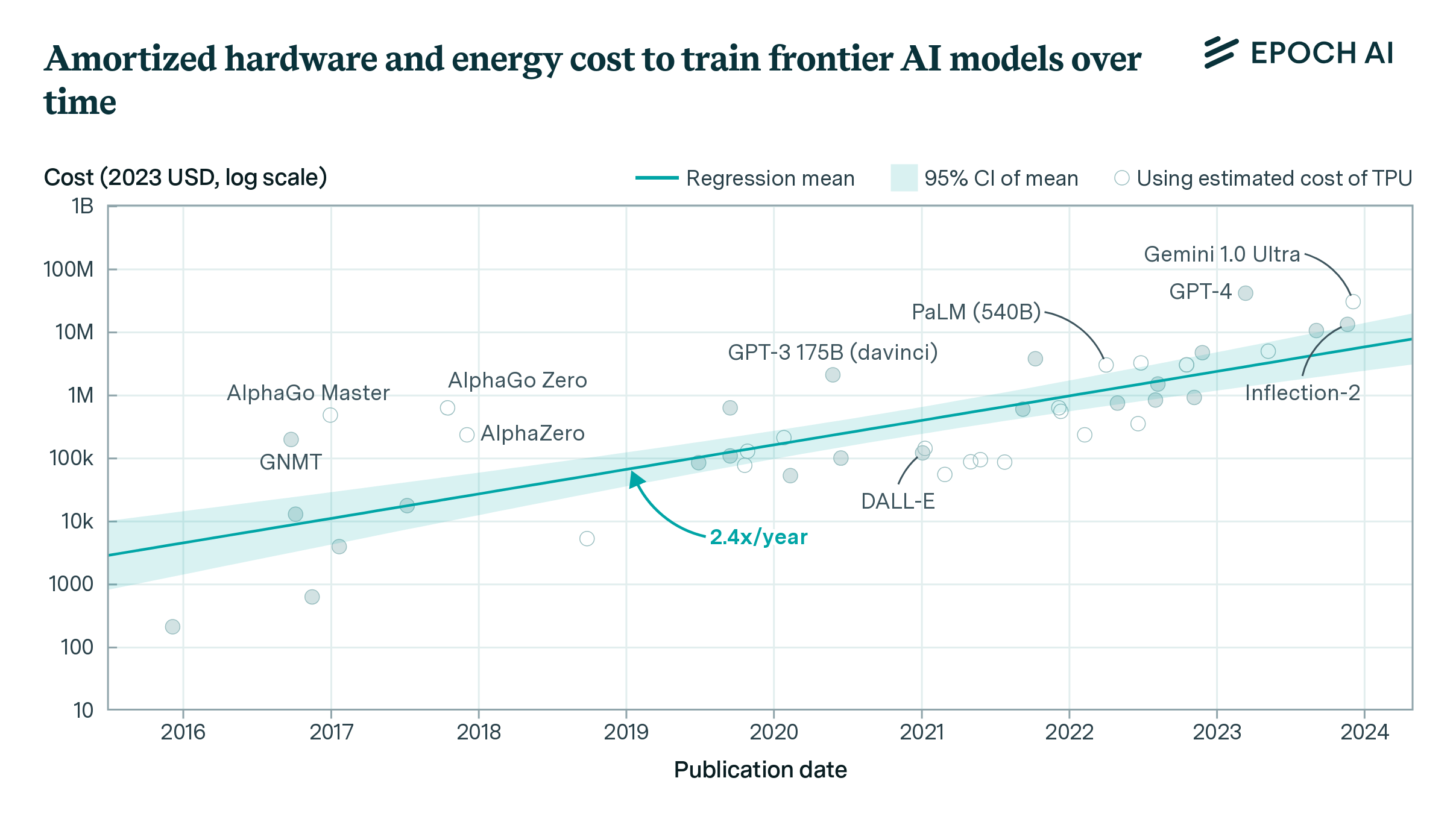

After the release of the viral ChatGPT chatbot two years ago, technology companies, whose valuations have benefited greatly from the AI boom, have publicly maintained that “scaling up" current models through adding more data and computing power will consistently lead to improved AI models. But now, some of the most prominent AI scientists are speaking out on the limitations of this “bigger is better" philosophy. Ilya Sutskever, co-founder of AI labs Safe Superintelligence (SSI) and OpenAI, told Reuters recently that results from scaling up pre-training have plateaued.

Sutskever declined to share more details on how his team is addressing the issue, other than saying SSI is working on an alternative approach to scaling up pre-training. Behind the scenes, researchers at major AI labs have been running into delays and disappointing outcomes in the race to release a large language model that outperforms OpenAI’s GPT-4 model, which is nearly two years old.

Overcoming Challenges

To overcome these challenges, researchers are exploring “test-time compute," a technique that enhances existing AI models during the “inference" phase. OpenAI has embraced this technique in their newly released model known as "o1," which can think through problems in a multi-step manner, similar to human reasoning. The O1 model also involves using data and feedback curated from PhDs and industry experts.

The Competitive Landscape

At the same time, researchers at other top AI labs, from Anthropic, xAI, and Google DeepMind, have also been working to develop their own versions of the technique. This shift could alter the competitive landscape for AI hardware, thus far dominated by insatiable demand for Nvidia’s AI chips.

OpenAI and Microsoft Face New Suit Over AI Training Methods

Prominent venture capital investors are taking notice of the transition and weighing the impact on their expensive bets. Demand for Nvidia’s AI chips has fueled its rise to becoming the world’s most valuable company, surpassing Apple. Nvidia could face more competition in the inference market as the technique behind the o1 model gains traction.

Overall, the AI industry is witnessing a shift towards new techniques that focus on enhancing existing models rather than just scaling up. This shift not only impacts the development of AI models but also the demand for AI hardware, signaling a new era in the AI arms race.