Alibaba's Qwen3 Outperforms OpenAI's o1 and o3-mini, on Par With Google's Gemini 2.5 Pro Models

Chinese giant Alibaba has released the Qwen3 family of open-weight AI models. The Qwen3 family includes variants with different sizes, ranging from 0.6B to 235B parameters. These models can be deployed locally using tools like Ollama and LM Studio or accessed through a web browser via Qwen Chat.

Performance Comparison

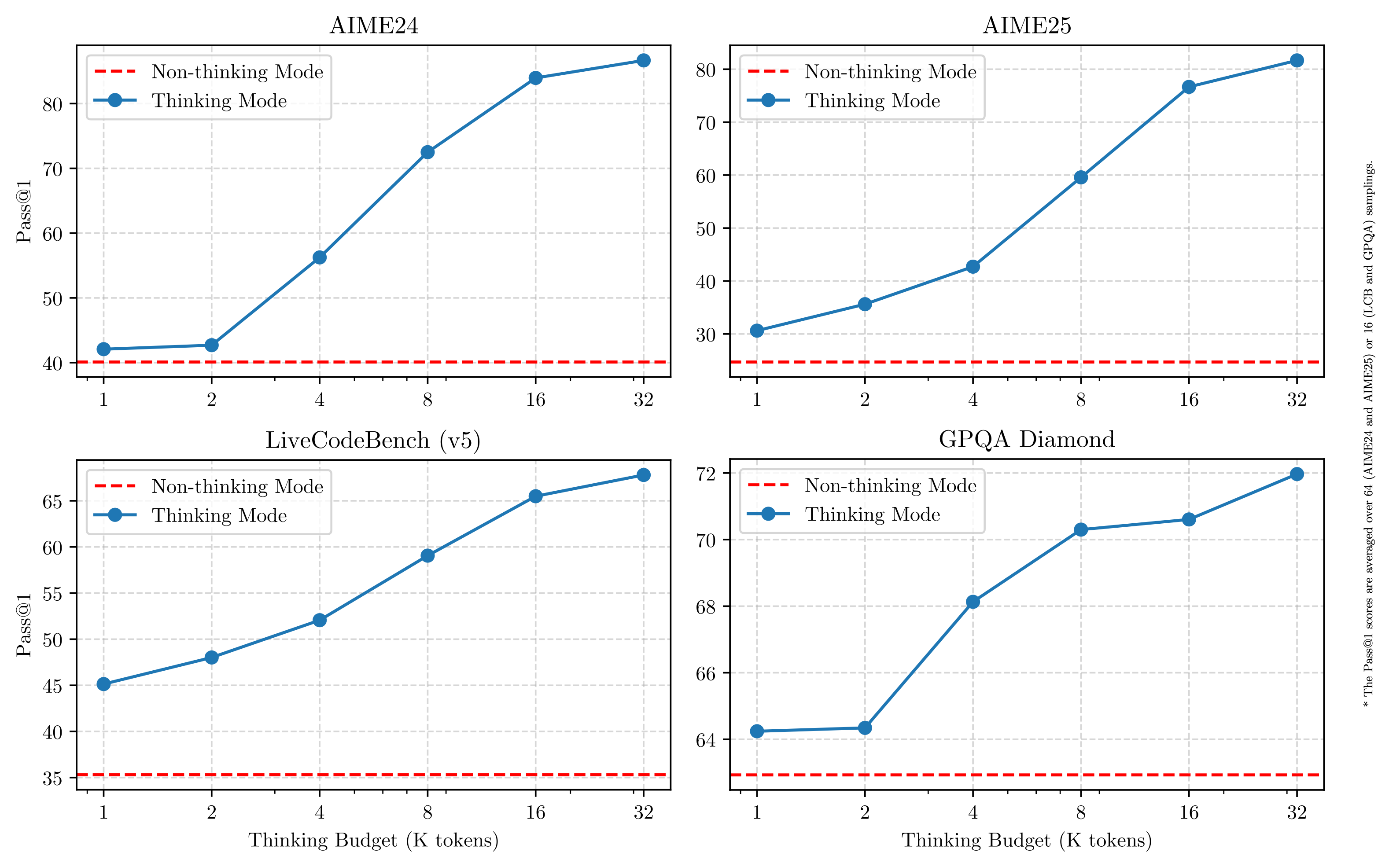

Qwen3's 235B parameter model has shown remarkable performance on various benchmarks, outperforming OpenAI's o1 and o3-mini models in mathematical and programming tasks. It also demonstrates performance parity with Google's Gemini 2.5 Pro models on several benchmarks. However, it falls slightly behind OpenAI's newly released o4-mini model in certain benchmarks.

In coding benchmarks, Qwen3's 235B model scored 70.7%, while the o4-mini model scored 80%. Similarly, on the AIME 2024 math benchmark, OpenAI's o4-mini model scored 94%, just slightly higher than Qwen3's 235B model which scored 85.7%.

Benchmark scores for other newly released models can be found on Artificial Analysis. Additionally, other variants of the Qwen-3 models have shown improvements over their predecessors, with the 30B parameter variant outperforming DeepSeek-V3 and OpenAI's GPT-4o models on benchmarks.

Expert Opinion

Simon Willison, co-creator of the Django Web Framework, shared his thoughts on the Qwen3 release in a blog post. He praised the coordination of the release across the LLM ecosystem, highlighting how well the models work with popular LLM serving frameworks. Willison noted the efforts made by Alibaba to ensure compatibility with various systems and devices.

Willison also commented on the diverse sizes of the Qwen3 models, mentioning that smaller variants like the 0.6B and 1.7B models can run smoothly on devices like iPhones, while larger models like the 32B variant can fit on standard laptops with room to spare.

Recent Updates

The Qwen3 family of models succeeds the Qwen2.5 models. Recently, Alibaba introduced the QwQ 32 billion parameter model, which showed comparable performance to DeepSeek-R1 despite its smaller size.

Furthermore, the company launched the QwQ-Max-Preview model, an iteration of the Qwen2.5 Max specializing in mathematics and coding tasks.