Can ChatGPT reason? This Apple AI study might have the answer

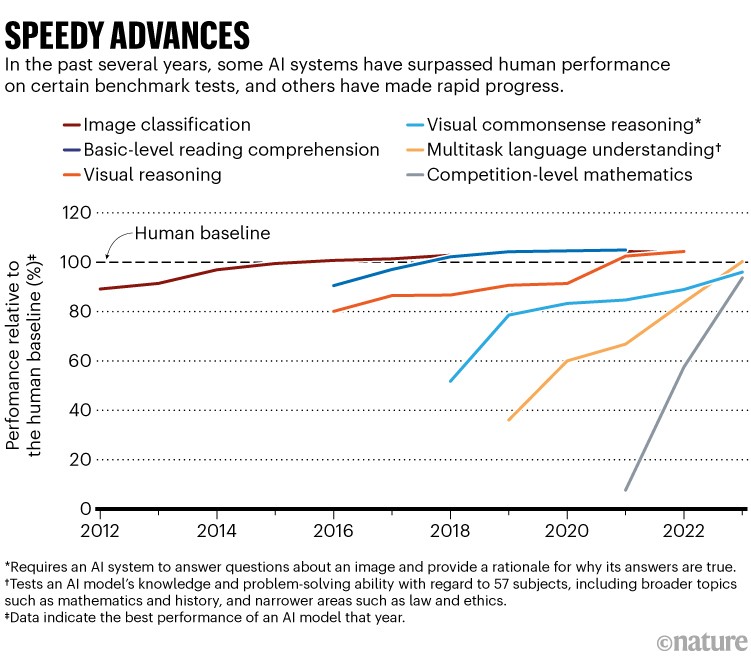

Companies like OpenAI and Google have been touting the advancements in generative AI experiences, suggesting that a breakthrough is on the horizon. The recent upgrade of ChatGPT, known as o1-preview, aims to showcase the next-gen experience. This upgraded version, available to ChatGPT Plus and premium subscribers, is claimed to have the ability to reason. The promise of such an AI tool is its potential usefulness in tackling complex questions that demand intricate reasoning.

However, a new AI paper from Apple researchers challenges this notion, suggesting that AI models like ChatGPT o1 and other generative models might not actually possess reasoning capabilities. Instead, these models are believed to be pattern matchers, drawing solutions from their training datasets. While they excel at providing answers to problems, it is mainly due to recognizing similar patterns and predicting outcomes based on past exposure.

Apple's AI Study on Reasoning Performance

The study conducted by Apple researchers, available for reference here, explores the limitations of various Large Language Models (LLMs) in terms of reasoning. The experiments involved testing open-source models like Llama, Phi, Gemma, and Mistral, as well as proprietary models including ChatGPT o1-preview, o1 mini, and GPT-4o.

The key findings of the study indicate that LLMs struggle when it comes to genuine logical reasoning, as they tend to replicate the reasoning steps witnessed during their training. Apple introduced the GSM-Symbolic benchmark, comprising over 8,000 grade-school math word problems, to evaluate the reasoning performance of AI models. Simple alterations to math problems, such as changing characters' names, relationships, and numbers, significantly impacted the models' reasoning abilities.

Challenging the AI Models

One of the tests involved adding inconsequential statements to math problems, which should not affect the overall solution. Surprisingly, the inclusion of irrelevant information led to performance drops of up to 65% across different models. Even ChatGPT o1-preview struggled, experiencing a 17.5% drop in performance compared to the baseline.

The study also questioned the reasoning capabilities of AI models like ChatGPT o1 and others, emphasizing the need for further research and development in this area.

Notably, Apple's research raises questions about the true understanding of mathematical concepts by these AI models. Through various experiments and benchmarks like GSM-NoOp, Apple aimed to test the reasoning capabilities of LLMs and explore their limitations.

Implications and Future Directions

Apple's study does not aim to discredit competitors but rather seeks to evaluate the current state of genAI technology in terms of reasoning abilities. While Apple has not proposed an alternative to ChatGPT with improved reasoning capabilities, the research opens avenues for refining the training methods of LLMs, particularly in fields that require precision and accuracy.

Going forward, it will be intriguing to observe how industry players like OpenAI, Google, and Meta respond to Apple's findings and whether they can address the challenges posed in reasoning performance. The insights from Apple's study could potentially shape the future development of AI models, emphasizing the importance of incorporating reasoning capabilities effectively.