DeepSeek's Latest AI Release Sparks Debate Over True Open ...

DeepSeek, a company renowned for its open-source contributions, has recently unveiled its latest AI system update, DeepSeek-V3/R1. This release has generated significant buzz in the AI community due to its impressive technical advancements. However, the extent of its transparency has come under scrutiny, raising questions about the authenticity of its open-source claims. While the company has achieved remarkable technical milestones in this latest version, a closer look reveals that selective disclosures and notable omissions are challenging its proclaimed commitment to genuine open-source transparency.

Technical Advancements and Milestones

The DeepSeek-V3/R1 update showcases several groundbreaking technical feats, capturing the attention of both AI enthusiasts and professionals. Among these are advanced cross-node Expert Parallelism and the ability to overlap communication with computation, which stand out as significant engineering milestones. The system’s efficiency is particularly notable, with each H800 GPU node handling up to 73.7k tokens per second. These capabilities indicate substantial strides in AI technology and emphasize DeepSeek’s potential to impact various applications significantly.

Moreover, the system’s high throughput metrics, which suggest it can manage billions of tokens daily, underline its efficiency and effectiveness. This level of performance demonstrates the company’s technical prowess and potential applicability in numerous high-demand environments.

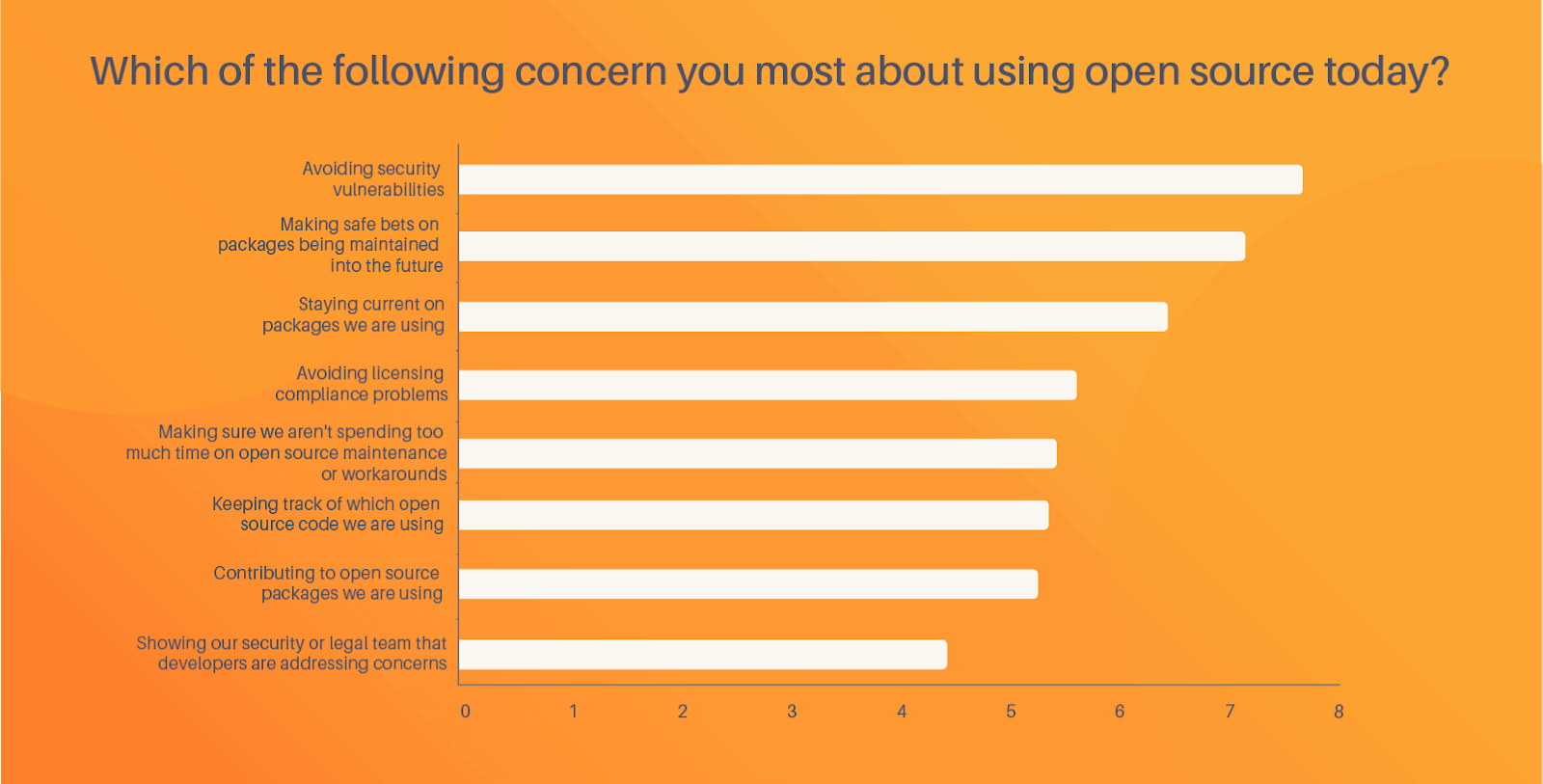

Transparency Concerns

Despite the impressive technical metrics presented by DeepSeek, the release has raised significant concerns about the level of transparency being offered. Key components of the system, such as bespoke load-balancing algorithms critical to understanding system performance, remain undisclosed. This selective transparency has led to skepticism within the AI community, hindering a complete understanding of the system and its inner workings. Additionally, the lack of comprehensive documentation further complicates efforts for independent verification, undermining confidence in the system’s reported metrics.

Moreover, without detailed documentation on data usage and training procedures, it is challenging to assess the ethical robustness and overall integrity of the system. Important details about datasets, filtering processes, and bias mitigation steps are conspicuously absent, preventing an in-depth evaluation of the system’s claims.

Open-Source Integrity Debate

DeepSeek’s positioning as a leader in the open-source community is called into question by its selective openness in the release of DeepSeek-V3/R1. While it has indeed released parts of the infrastructure and some model weights under permissive licenses, the restrictions placed on commercial use through a custom model license contradict the brand’s image as an open-source pioneer.

This paradox is reflective of a broader industry trend known as “open-washing,” where companies present an illusion of transparency by disclosing only selective information while keeping critical elements undisclosed. This practice not only erodes trust but also undermines the principles of genuine open-source transparency.

Comparison with Industry Practices

DeepSeek’s approach to open-source transparency is not unique; it mirrors similar practices observed in industry giants like Meta. Meta has also faced scrutiny for limited data transparency in its AI endeavors.

However, one notable distinction is Meta’s provision of comprehensive model cards and ethical guidelines that offer a framework for evaluating the ethical viability of their AI systems. These efforts add a layer of accountability and transparency that is currently missing from DeepSeek’s latest release.

The Call for Complete Transparency

Within the AI community, comprehensive transparency is increasingly viewed as a cornerstone for trust and collaboration. Genuine transparency involves a candid and forthright dialogue about both the achievements and limitations of a system. By selectively sharing information, DeepSeek’s approach undermines the narrative of open innovation and accountability.

To truly lead in open-source innovation, it is imperative for companies like DeepSeek to adopt a balanced approach. This would involve not only showcasing their technological feats but also providing a full account of their ethical and data considerations. Complete transparency would require open dialogue about the limitations and challenges faced during development, not just the successes.