OpenAI Shifts Its Focus To Superintelligence - ControlF5 Software

OpenAI CEO Sam Altman recently shared that the company is setting its sights on achieving superintelligence, signaling a significant shift in its ambitions. In a personal blog post, Altman expressed confidence that OpenAI already understands how to build artificial general intelligence (AGI) and is now ready to advance toward more sophisticated systems.

“We love our current products, but we are here for the glorious future,” Altman wrote. “Superintelligent tools could massively accelerate scientific discovery and innovation beyond what we can achieve on our own, leading to greater prosperity and abundance.”

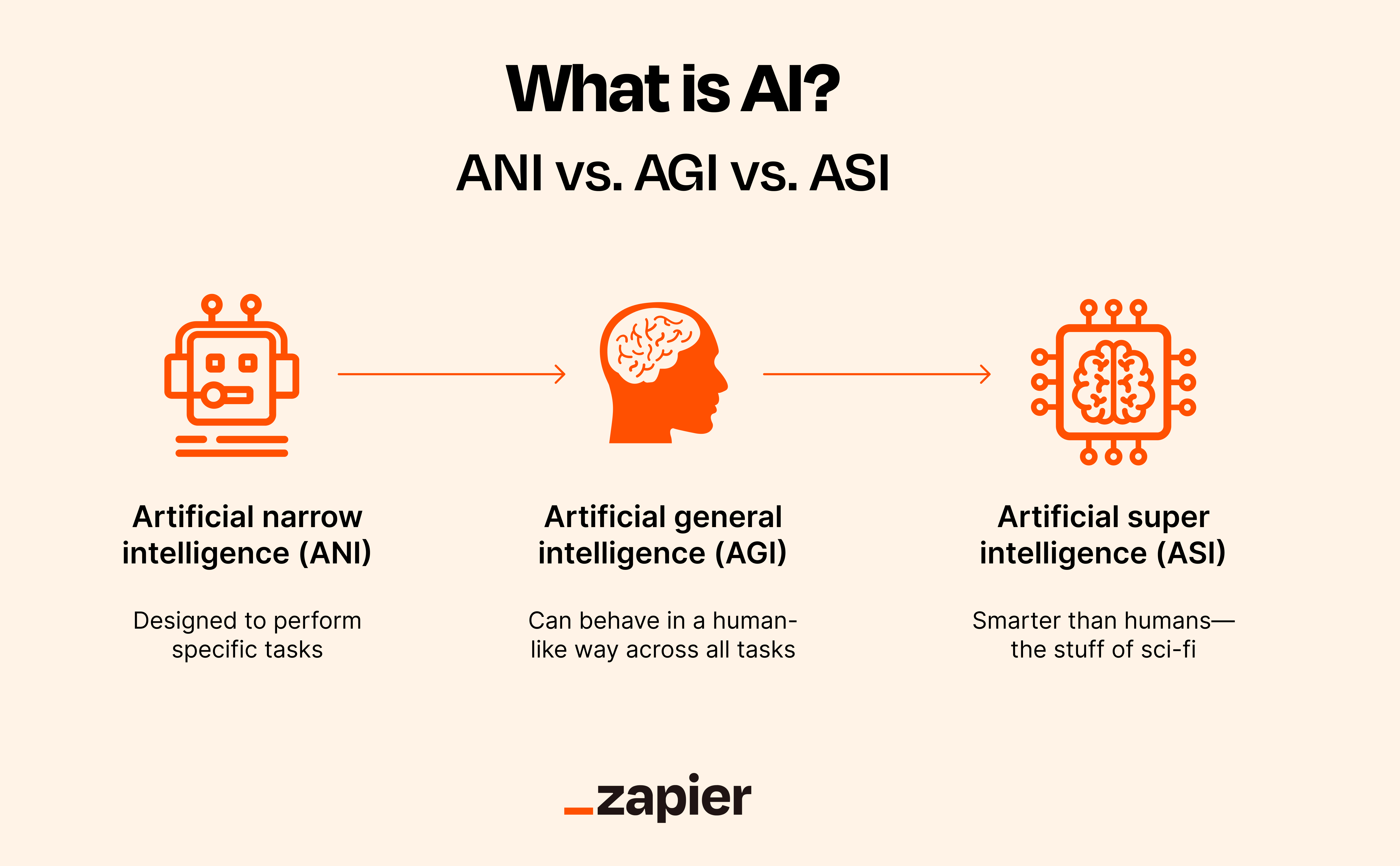

Artificial General Intelligence (AGI) Definition

Artificial general intelligence (AGI) is a concept that remains somewhat abstract. However, OpenAI defines it as “highly autonomous systems that outperform humans at most economically valuable tasks.” Meanwhile, Microsoft, one of OpenAI’s primary investors, uses a more profit-driven benchmark for AGI: systems that can generate at least $100 billion in revenue. According to an agreement between the two companies, once OpenAI achieves this milestone, Microsoft will lose exclusive access to its technology.

Altman’s blog post didn’t clarify which definition he was referring to, but it seems more likely he had OpenAI’s original AGI definition in mind. He hinted that autonomous AI agents could soon play a transformative role in the workforce, fundamentally altering how companies operate.

Challenges and Optimism

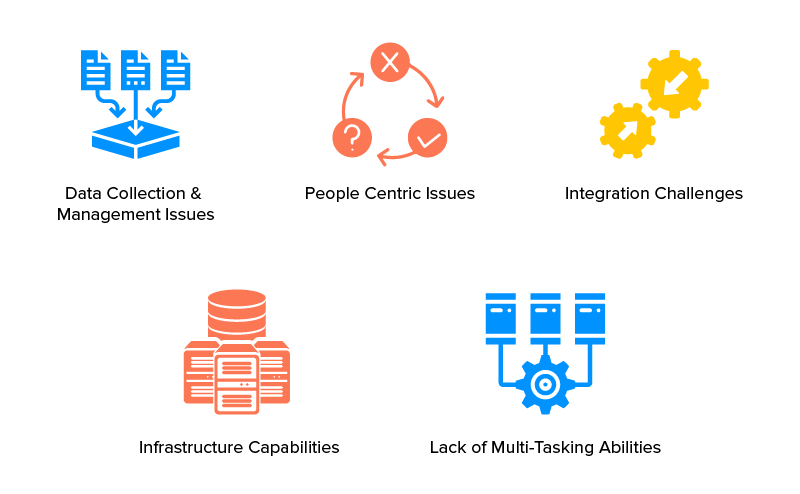

Despite Altman’s optimism, today’s AI systems still face significant limitations. They can “hallucinate” — generating incorrect or nonsensical outputs — and often make errors that humans easily spot. Additionally, these systems are expensive to run, posing practical challenges for widespread deployment.

Altman appears confident that these issues can be addressed in the near future. However, he also acknowledged that timelines for AI advancements can be unpredictable.

AI Safety Concerns

As OpenAI turns its attention to superintelligence, questions around AI safety remain crucial. The company has previously acknowledged that safely transitioning to a world with superintelligent AI is “far from guaranteed.” In a blog post from July 2023, OpenAI admitted it doesn’t yet have a reliable way to control superintelligent systems or prevent them from going rogue.

“Humans won’t be able to reliably supervise AI systems much smarter than us,” the post noted. “Our current alignment techniques will not scale to superintelligence.”

Company Decisions and Criticisms

Despite these concerns, OpenAI has made some controversial decisions regarding its internal structure. The company recently disbanded several teams focused on AI safety, including those dedicated to ensuring the safe development of superintelligent systems. This move has led to the departure of key safety researchers, who cited OpenAI’s increasing focus on commercial success as a reason for leaving.

Future Challenges

In response to concerns that OpenAI may not be focusing enough on AI safety, Altman defended the company’s track record. Given the high stakes involved in developing superintelligence, many believe that OpenAI must balance its pursuit of innovation with a stronger commitment to safety.

As the company navigates this complex path, the world will be watching to see whether OpenAI’s vision of a future shaped by superintelligent systems will come with the safeguards needed to ensure such advancements benefit humanity as a whole.