Naveen Rao Congratulates MosaicML Team for Forbes AI 50 List

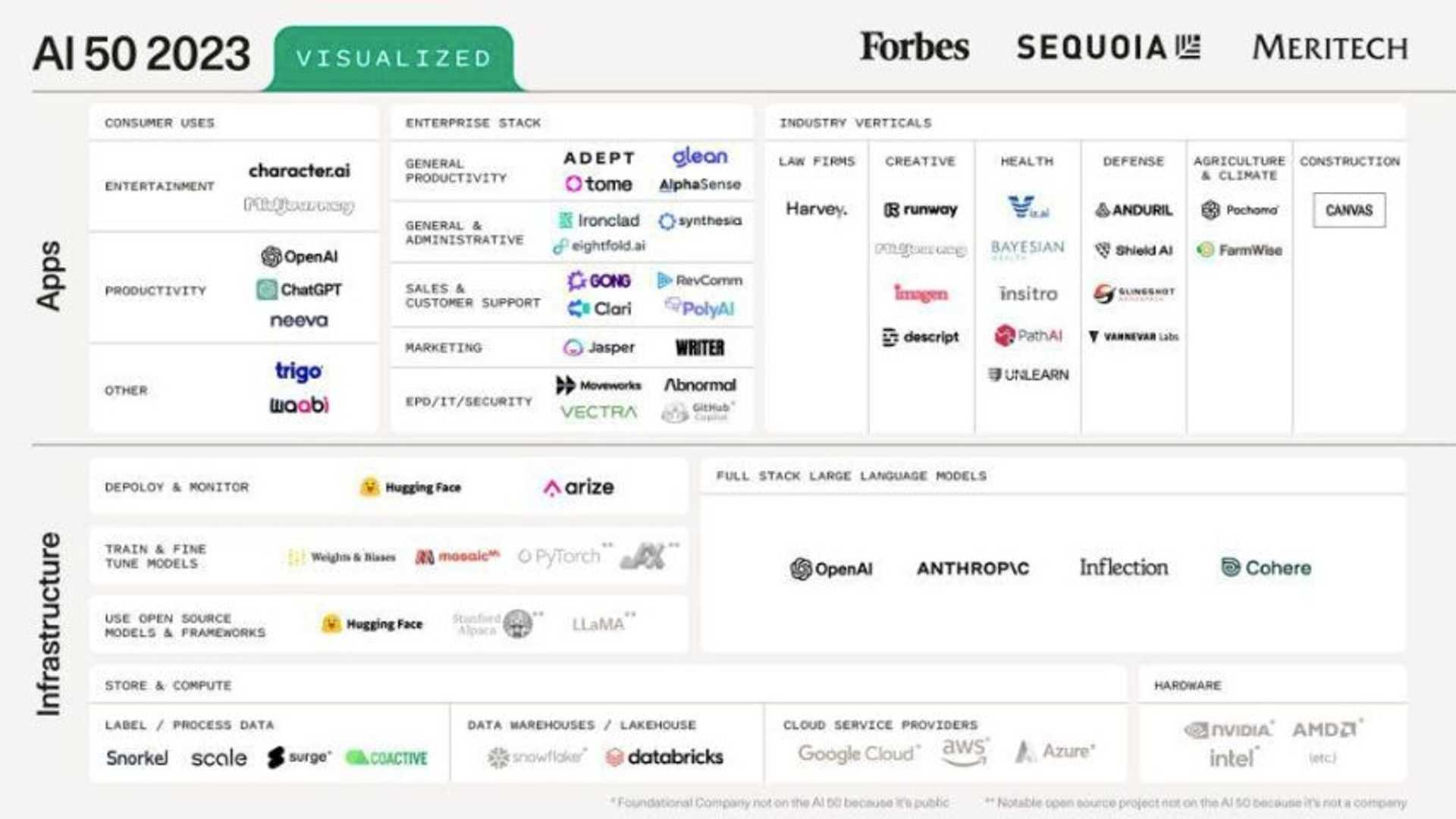

Building the components of the new generative AI world is hard work, and MosaicML team has been working hard to make it possible. Naveen Rao, CEO and co-founder at MosaicML, congratulated the team for being included in this year's Forbes AI 50 List.

The MosaicML platform empowers you to train, fine-tune, and deploy generative AI with full data privacy and total model ownership. The team's effort has been recognized by Forbes and Naveen Rao thanked their customers, community, and partners for traveling with them on this journey.

MosaicML Releases MPT-7B: A Family of Open-Source LLMs

MosaicML has introduced MPT, a new family of open-source commercially usable LLMs. Trained on 1T tokens of text+code, MPT models match and - in many ways - surpass LLaMa-7B. This release includes four models: MPT-Base, Instruct, Chat, and StoryWriter.

The release is intended to demonstrate their tools for training, fine-tuning, and serving custom LLMs. MosaicML aims to make building industrial strength custom models easy using their tools that were used by Replit to train their SOTA code generation model last week.

MosaicML Inference Released

MosaicML has released the MosaicML Inference to make large models accessible to all. For organizations concerned about data privacy and cost, building and hosting your own model can provide a secure, cost-effective solution. The Inference can be used to build your own and host it in your VPC or use one of their curated open source models.

H100 GPU's Speedup Performance

MosaicML has received early access to the new H100 GPU, thanks to their friends at CoreWeave and NVIDIA. With faster memory (HBM3), support for FP8, and the new Transformer Engine library, it set a new bar for accelerating transformer-based models for both training and inference.

Results showed a 3x speedup training 7+ billion parameter GPT models when comparing H100 to A100, which despite the higher pricing, the overall cost to train with H100 is lower thanks to the massive speedup.

MosaicML Open Sourced a Recipe for Training Stable Diffusion 2 Base Model

Training your own diffusion models can be expensive and complex. MosaicML open-sourced a recipe for training Stable Diffusion 2 Base model for just under $50k, taking about a week to train from scratch. By training your own diffusion models, you can avoid the risk of violating intellectual property laws.