Fine-Tuning OPT-350M For Extractive Summarization – CyberIQs

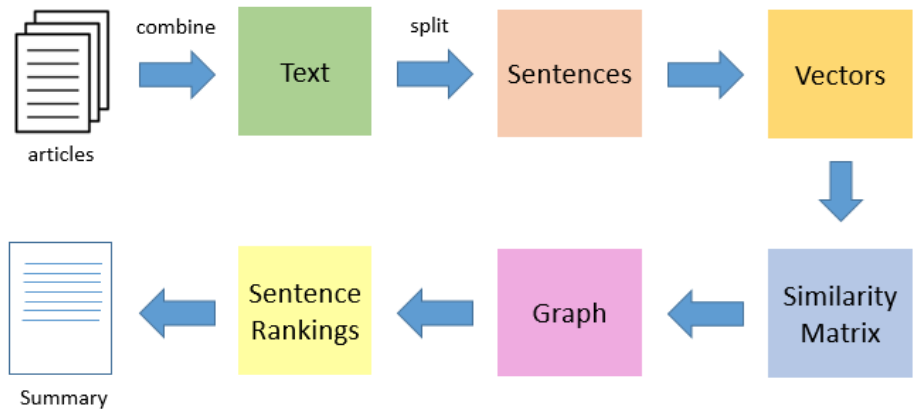

In this article, we will discuss the process of fine-tuning the OPT-350M language model specifically for extractive summarization. The OPT (Open Pretrained Transformers) series of language models, developed by Meta AI, is known for its next token prediction capabilities and is available in various model sizes.  One of its key advantages is its open availability, making it comparable to models like GPT-3.

One of its key advantages is its open availability, making it comparable to models like GPT-3.

To delve deeper into the fine-tuning process of OPT-350M for extractive summarization, you can refer to this article.

For more relevant news, visit News. To learn more About Us, or to Contact Us, refer to the provided links.

For more relevant news, visit News. To learn more About Us, or to Contact Us, refer to the provided links.

Make sure to review our Cookie Policy, Disclaimer, and Privacy Statement for more information.

Make sure to review our Cookie Policy, Disclaimer, and Privacy Statement for more information.