Towards Understanding Large Language Models: Fine-Tuning

Large language models, or LLMs, have emerged as prominent technologies in recent times. Tools like Chat GPT, Character.ai, and Bard are just a few examples of the rapid advancements in this field. These models have seamlessly integrated into our daily routines and show no signs of slowing down. Today, the key lies in creating customized tools that cater to specific requirements.

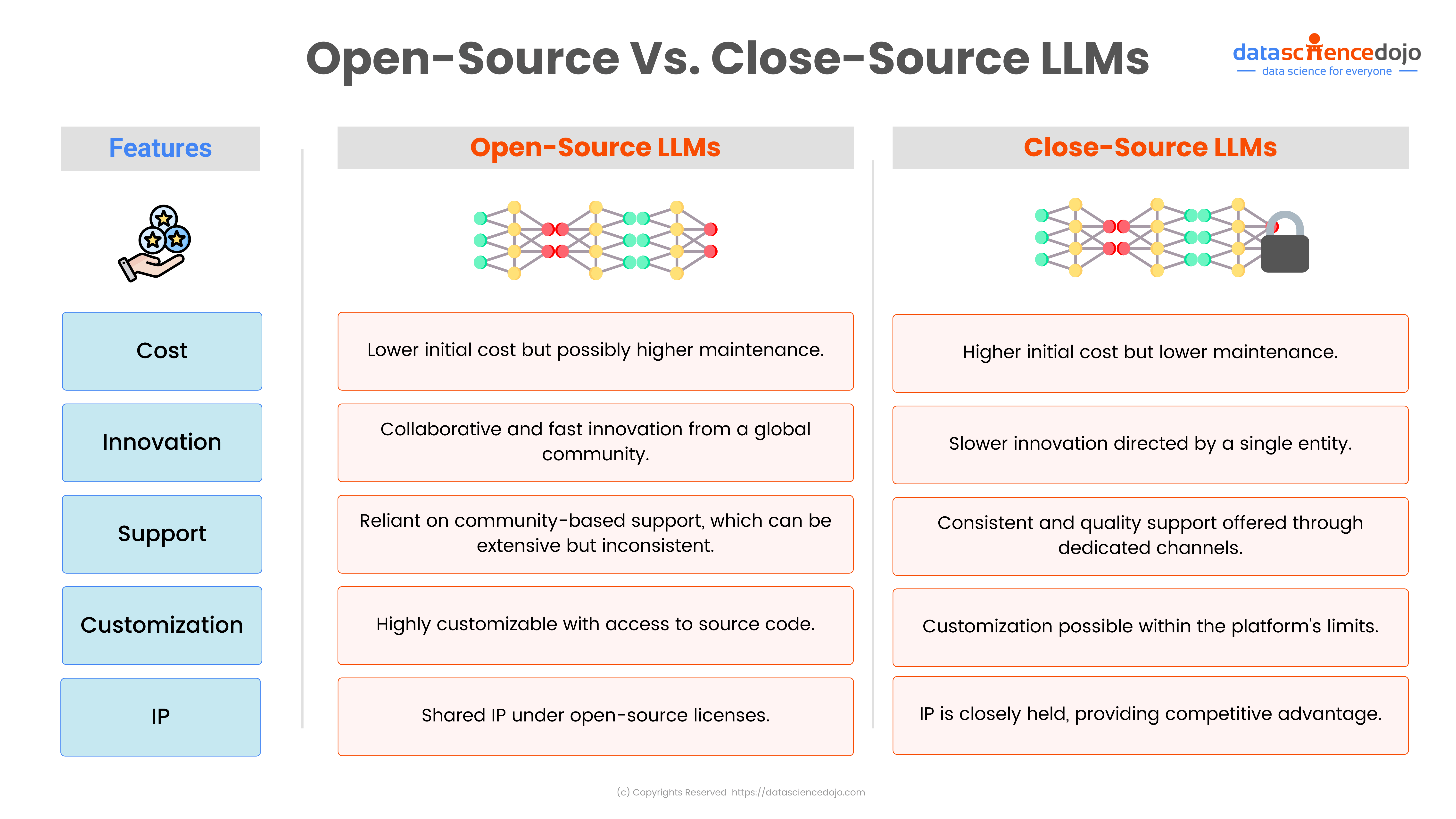

One of the remarkable aspects of computer science and data science is the vibrant open-source community. This community comprises individuals who are dedicated to developing and sharing innovative solutions for everyone to benefit from. LLMs have their own community that actively contributes by sharing open-source algorithms and models. Notable recent examples include Phi2 from Microsoft and Mixtral 8x7b. Leveraging these open-source models empowers users to create tools that align with their unique needs without having to start from scratch.

Generative AI

Computer Science and Applied Mathematics Engineer, sharing thoughts and opinions. https://www.linkedin.com/in/mohamed-mamoun-berrada/

The focus of this article is to delve into the concept of fine-tuning, a technique that plays a pivotal role in this process. By the end of this article, readers will gain a comprehensive understanding of this technique and its significance in optimizing large language models.