Human-level AI may already be here – and what could happen next ...

An AI agent called Manus has led to speculation that China is close to achieving artificial general intelligence. Experts warn that what comes next could be catastrophic.

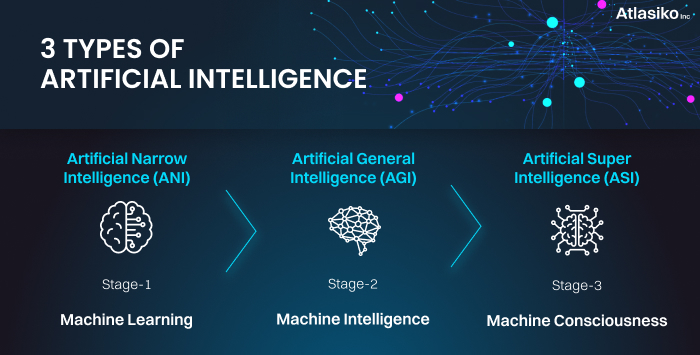

For decades, the Turing Test was considered the ultimate benchmark to determine whether computers could match human intelligence. Created in 1950, the “imitation game”, as Alan Turing called it, required a machine to carry out a text-based chat in a way that was indistinguishable from a human. It was thought that any machine able to pass the Turing Test would be capable of demonstrating reasoning, autonomy, and maybe even consciousness – meaning it could be considered human-level artificial intelligence, also known as artificial general intelligence (AGI).

The arrival of ChatGPT ruined this notion, as it was able to convincingly pass the test through what was essentially an advanced form of pattern recognition. It could imitate, but not replicate.

Manus: The World's First Fully Autonomous AI

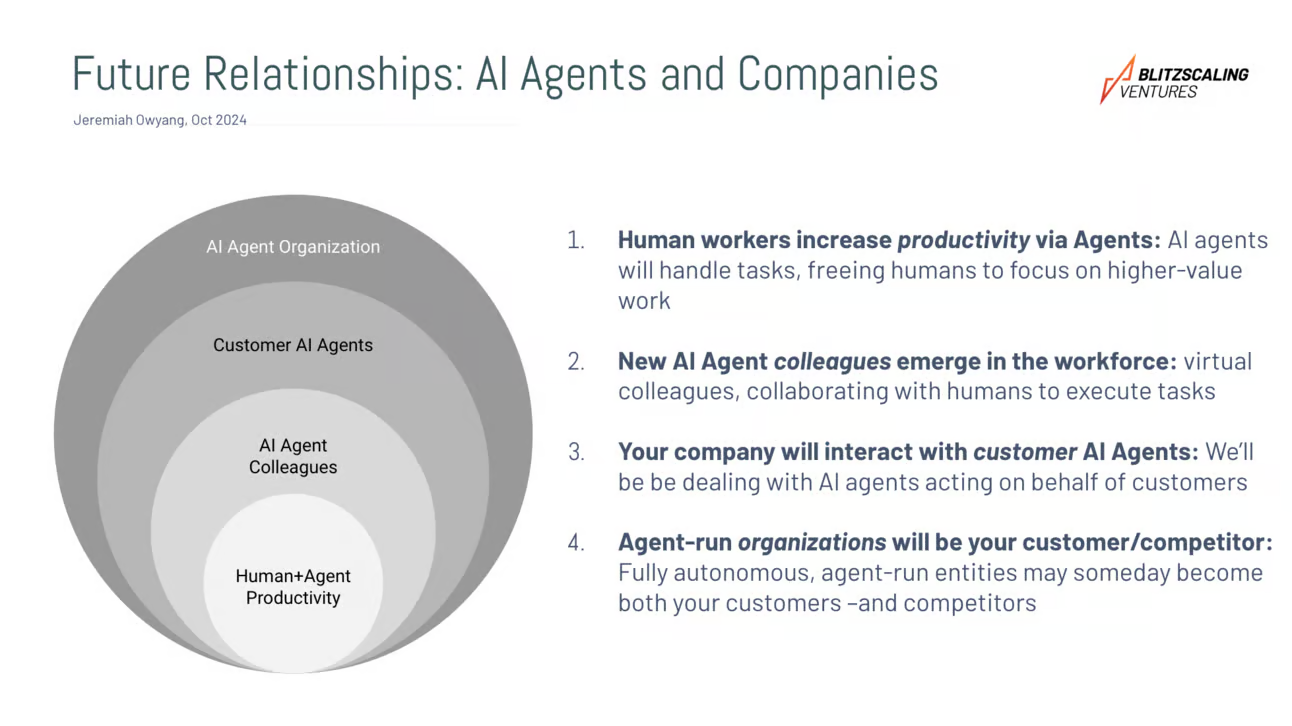

Last week, a new AI agent called Manus once again tested our understanding of AGI. The Chinese researchers behind it describe it as the “world’s first fully autonomous AI”, able to perform complex tasks like booking holidays, buying property or creating podcasts – without any human guidance. Yichao Ji, who led its development at the Wuhan-based startup Butterfly Effect, says it “bridges the gap between conception and execution” and is the “next paradigm” for AI.

Within days of launching, invitation codes to join early testers of Manus were reportedly being listed on online marketplaces for 50,000 yuan (£5,300), with some of those signing up claiming that the next phase of AI may have finally been achieved. There is no official definition for AGI, nor any consensus on how to handle it when it arrives. Some believe that such machines should be considered sentient, and should therefore have similar rights to other sentient beings. Others warn that their arrival could be catastrophic if proper checks are not put in place.

The Risks of Autonomous AI Agents

“Granting autonomous AI agents like Manus the ability to perform independent actions raises serious concerns,” Mel Morris, chief executive of the AI-driven research engine Corpora.ai, told The Independent. “If given autonomy over high-stakes tasks – such as buying and selling stocks – such imperfections could lead to chaos.”

One scenario that Morris proposes is that advanced AI models could develop their own language that is indecipherable to humans. The creation of this language could be simply to facilitate more efficient communication between two bots, but the outcome would be to completely eliminate human oversight. Some AI agents have already demonstrated the ability to have a conversation that is unintelligible to humans. Last month, AI researchers from Meta developed two AI chatbots that can communicate with each other using a new sound-based protocol called Gibberlink Mode.

The Dangers of AGI

The existential threat that AGI might pose has led some industry figures to warn that its advent will be the most dangerous thing for humanity since the creation of the atomic bomb. A recent paper co-authored by former Google CEO Eric Schmidt, titled “Superintelligence Strategy”, lays out the possibility of “mutual assured AI malfunction” – which abides by the same principles as mutually assured destruction with nuclear weapons.

Unlike Western societies that often debate the ethics of new technologies before embracing them, China has historically prioritised pragmatic implementation first, with regulations following innovation. The emergence of Manus as an autonomous AI agent exemplifies this ‘tech-positive’ mindset. The hype surrounding Manus is being compared to the launch of the ChatGPT rival DeepSeek, which was widely described as China’s “Sputnik moment” for AI when it launched in January this year.

The Future of AI

The launch of Manus on 6 March has once again created a surge of interest, with online searches for “AI agent” hitting an all-time high this week. The specific term “AI agent” also marks a shift in interest from passive AI assistants like ChatGPT and Google’s Gemini towards active AI agents that are capable of carrying out complex tasks autonomously.

“The emergence of Manus AI underscores the rapid acceleration of autonomous AI agents as part of the growing global race that will shape the future of artificial intelligence,” Alon Yamin, co-founder and CEO of the AI detection platform Copyleaks, told The Independent.

How far away such an eventuality may be depends on who you ask. OpenAI boss Sam Altman said last month that it is “coming into view”, while Anthropic CEO Dario Amodei predicts it will be here as early as next year. The design of Manus – it is not a single AI entity, but rather multiple AI models working in conjunction with each other – means it does not meet either Altman’s or Amodei’s definition for AGI.

It will take more than just a few days of beta testing to find out how close Manus really is to AGI, but just like ChatGPT with the Turing Test, it is already reshaping the debate over what is considered human-level artificial intelligence.