OpenAI approves more than new $200 monthly subscription | FTM

OpenAI has recently introduced a new subscription plan for its AI-powered chatbot platform, which comes at a significant cost. The key question that arises is whether this expensive package caters to all the needs of the users. The launch of this new subscription plan was confirmed through leaked information from OpenAI. The company has unveiled the Chat GPT pro, which offers a $200-per-month subscription package. Users who opt for this plan will enjoy unlimited access to all OpenAI models, including the full versions of its o1 “reasoning” model.

According to Jason Wei, a member of OpenAI’s technical staff, who made the announcement during a live-streaming press conference, "We believe that the target audience for ChatGPT Pro will be the power users of ChatGPT. These are individuals who are already pushing the models to their limits in tasks such as math, programming, and writing."

New Features and Capabilities

OpenAI's latest model, o1, introduces advanced functions aimed at enhancing the user experience. One of the key advantages of this model is its ability to analyze and interpret data in a more efficient manner. By leveraging the reasoning, planning, and action sequence functions, the o1 model can provide accurate and timely responses to user queries.

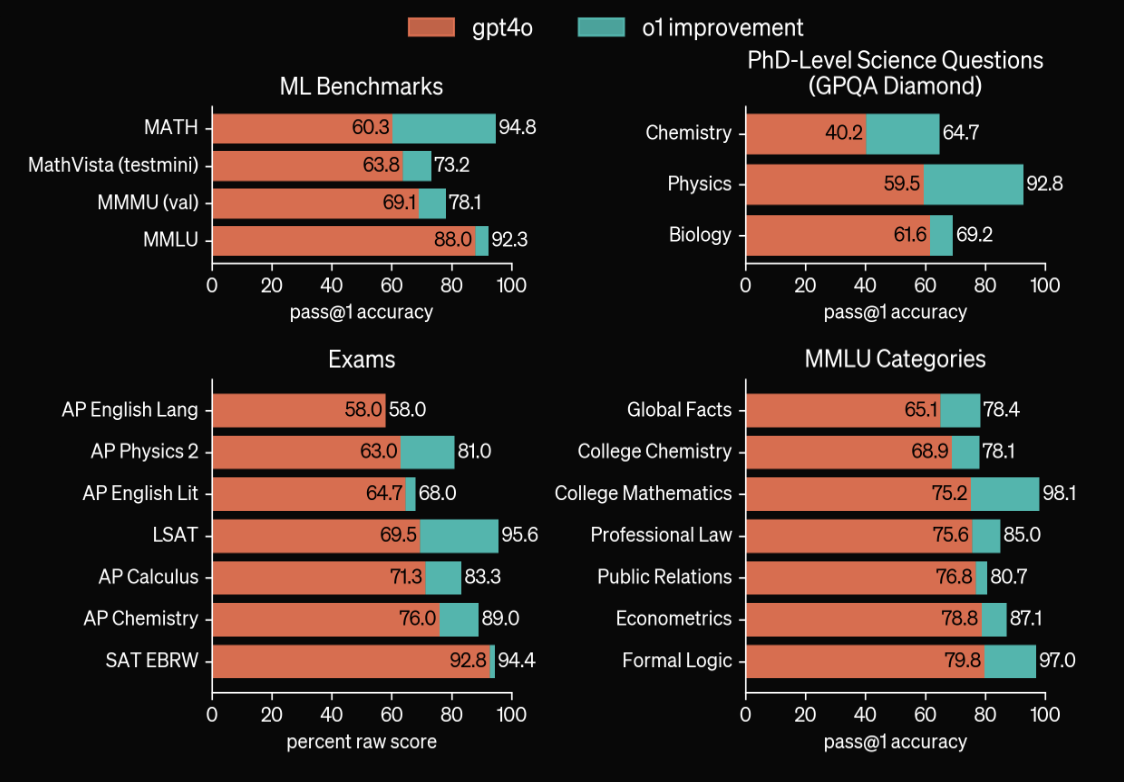

Moreover, OpenAI released a preview of the o1 model in September, which showcased improved performance and functionality compared to previous versions. Users can expect the new model to be "more concise in its thinking," leading to enhanced response times. Internal testing conducted by OpenAI indicates that o1 significantly reduces "major errors" when tackling challenging real-world questions.

Additionally, the o1 model allows for reasoning about uploaded images, a feature that was not present in earlier versions. This capability enables the model to deliver faster responses and minimize errors. According to OpenAI's testing, o1 reduces "major errors" on difficult real-world questions by 34% compared to previous versions, as demonstrated across multiple benchmarks, including the MLE Bench.

For more information, you can visit the original article here.