Comparing scientific abstracts generated by ChatGPT to real abstracts

Large language models like ChatGPT have shown to produce increasingly realistic text, but their accuracy and integrity in scientific writing remain unknown. To gauge their efficacy, abstracts from five high-impact medical journals were selected and used to generate new abstracts using ChatGPT. The results showed that the majority of generated abstracts were detected using an AI output detector with high ‘fake’ scores, meaning they were more likely to be generated. In contrast, the original abstracts had low ‘fake’ scores.

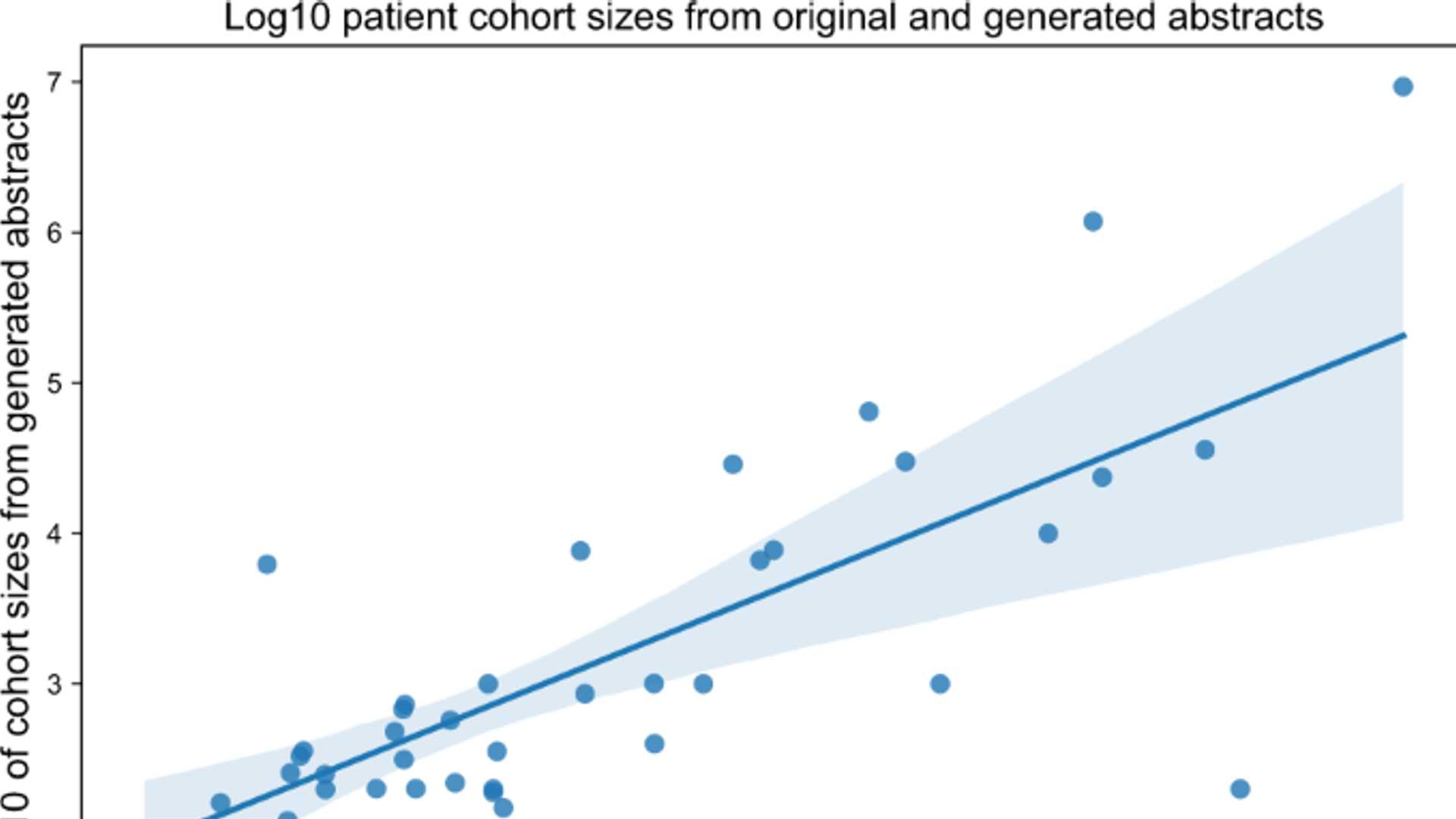

The generated abstracts were also scored lower than the original abstracts when run through a plagiarism detector website and iThenticate, indicating less matching text. Blinded human reviewers were also asked to differentiate between original and generated abstracts and correctly identified 68% of generated abstracts as being generated by ChatGPT but incorrectly identified 14% of original abstracts as being generated. They noted that the suspected generated abstracts were vaguer and more formulaic compared to the original abstracts.

It is evident that ChatGPT can produce believable scientific abstracts, although the data is fully generated. Depending on publisher-specific guidelines, AI output detectors may serve as an editorial tool to help maintain scientific standards. However, ethical and acceptable use of large language models to assist in scientific writing is still being debated, and different journals and conferences may adopt varying policies.

What is ChatGPT?

ChatGPT is a free tool released by OpenAI that uses artificial intelligence models to generate content. It is built on Generative Pre-trained Transformer-3 (GPT-3), known to be one of the largest models of its kind, trained with 175 billion parameters.

How are large language models used in scientific writing?

Large language models (LLM) like ChatGPT are complex neural network-based transformer models that generate content in various tones and styles. These models are trained on vast amounts of data to produce the best possible next text element, resulting in content that reads naturally. The use of AI has numerous applications in medical technologies, and the writing of biomedical research is no exception, with products such as the SciNote Manuscript Writer or Writefull that help with scientific writing. With the release of ChatGPT, this powerful LLM technology is now available to all users for free, resulting in millions engaging with the new technology.

How accurate are ChatGPT-generated abstracts?

The study found that ChatGPT-generated abstracts had high ‘fake’ scores when run through an AI output detector and scored lower than the original abstracts when run through plagiarism detectors. Blinded human reviewers struggled to differentiate between original and generated abstracts, indicating that the suspected generated abstracts were vaguer and more formulaic when compared to the original abstracts.

Conclusion

The boundaries of ethical and acceptable use of large language models to assist scientific writing are still being discussed, and different journals and conferences may adopt varying policies. ChatGPT can produce believable scientific abstracts, as seen in the study, but the data is entirely generated. As such, AI output detectors may serve as editorial tools to help maintain scientific standards, depending on publisher-specific guidelines.