Azure Functions at Build 2024 – addressing customer feedback with...

Azure Functions is Azure’s primary serverless service used in production by hundreds of thousands of customers who run trillions of executions on it monthly. It was first released in early 2016 and since then we have learnt a lot from our customers on what works and where they would like to see more. Taking all this feedback into consideration, the Azure Functions team has worked hard to improve the experience across the stack from the initial getting started experience all the way to running at very high scale while at the same time adding features to help customers build AI apps. Please see this link for a list of all the capabilities we have released in this year’s Build conference. Taking everything into account, this is one of the most significant set of releases in Functions history.

New SKU of Functions - Flex Consumption

We are releasing a new SKU of Functions, Flex Consumption. This SKU addresses a lot of the feedback that we have received over the years on the Functions Consumption plans - including faster scale, more instance sizes, VNET support, higher instance limits and much more. We have looked at each part of the stack and made improvements at all levels. There are many new capabilities including:

Introducing Legion

To enable Flex Consumption, we have created a brand-new purpose-built backend internally called Legion. To host customer code, Legion relies on nested virtualization on Azure VMSS. This gives us the Hyper-V isolation that is a pre-requisite for hostile multi-tenant workloads. Legion was built right from the outset to support scaling to thousands of instances with VNET injection. Efficient use of subnet IP addresses by use of kernel level routing was also a unique achievement in Legion.

Pool Groups for Cold Start

For all languages, functions have a strict goal for cold start. To achieve this cold start metric for all languages and versions, and to support functions image update for all these variants, we had to create a construct called Pool Groups that allows functions to specify all the parameters of the pool, as well as networking and upgrade policies. All this work led us to a solid, scalable and fast infrastructure on which to build Flex Consumption on.

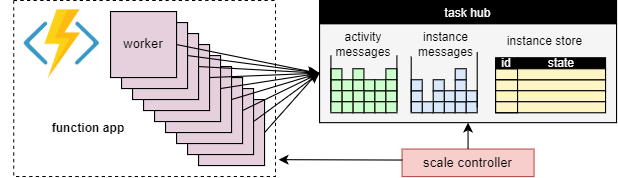

Networking Features and Scale Controller

Flex Consumption also introduces networking features to limit access to the Function app and to be able to trigger on event sources which are network restricted. Since these event sources are network restricted the multi-tenant scaling component scale controller that monitors the rate of events to determine to scale out or scale in cannot access them. In the Elastic Premium plan in which we scale down to 1 instance – we solved this by that instance having access to the network restricted event source and then communicating scale decisions to the scale controller. However, in the Flex Consumption plan we wanted to scale down to 0 instances. To solve this, we implemented a small scaling component we call “Trigger Monitor” that is injected into the customers VNET. This component is now able to access the network restricted event source. The scale controller now communicates with this component to get scaling decisions.

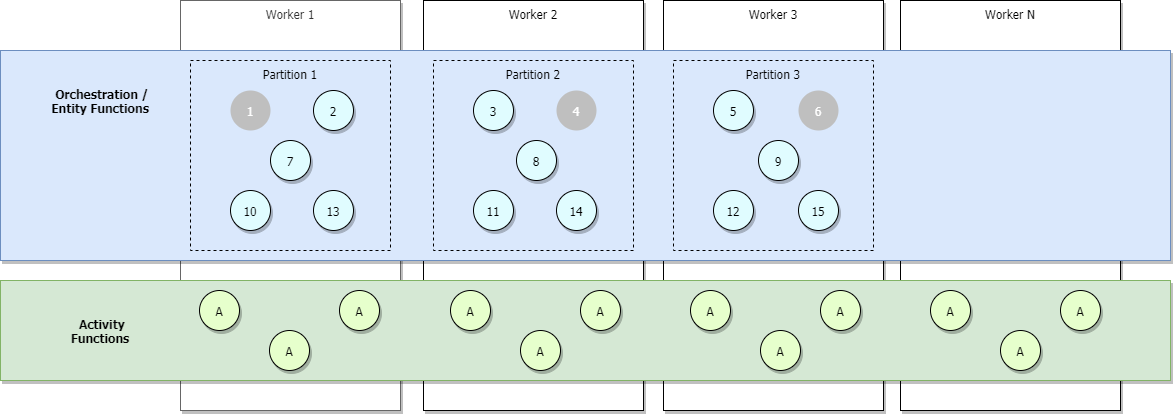

Scaling and Concurrency

When scaling Http based workloads on Function apps our previous implementation used an internal heuristic to decide when to scale out. This heuristic was based on Front End servers: pinging the workers that are currently running customers workload and deciding to scale based on the latency of the responses. This implementation used SQL Azure to track workers and assignments for these workers. In Flex Consumption we have rewritten this logic where now scaling is based on user configured concurrency. User configured concurrency gives customers flexibility in deciding based on the language and workload what concurrency they want to set per instance.

Target Based Scaling for Non-Http Apps

Similar to Http apps, we have also enabled Non-Http based apps to scale based on concurrency. We refer to this as Target Based Scaling. From an implementation perspective we have moved to have various extensions implement scaling logic within the extension and the scale controller hosts these extensions. This unifies the scaling logic in one place and unifies all scaling based on concurrency.

Control Plane and Performance Optimizer

One more change that we are making directionally based on feedback from our customers is to move from using AppSettings for various configuration properties to moving them to the Control Plane. For Public Preview we are doing this for the areas of Deployment, Scaling, Language. This is an example configuration which shows the new Control Plane properties. By GA we will move other properties as well. Customers have always asked us how to configure their Function apps for optimum throughput. Till now we have just given them guidance to run performance tests on their own. Now they have another option, we are introducing native Integration with Azure Load Testing. A new performance optimizer is now available that enables you to decide the right configuration for your App by helping you to create and run tests by specifying different memory and Http concurrency configurations.

Functions on Azure Container Apps

At Build we are also announcing GA of Functions running on Azure Container Apps. This new SKU allows customers to run their apps using the Azure Functions programming model and event driven triggers alongside other microservices or web applications co-located on the same environment. It allows a customer to leverage common networking resources and observability for all their applications. Furthermore, this allows Functions customers wanting to leverage frameworks (like Dapr) and compute options like GPU’s which are only available on Container Apps environments. We had to keep this SKU consistent with other Function SKUs/plans, even though it ran and scaled on a different platform (Container Apps).

Enhanced Developer Productivity

The Azure Functions team values developer productivity and our VSCode integration and Core Tools are top-notch and one of the main advantages in experience over other similar products in this category. However, we are always striving to enhance this experience. We are launching a new getting started experience using VSCode for the Web for Azure Functions. This experience allows developers to write, debug, test and deploy their function code directly from their browser using VS Code for the Web, which is connected to a container-based-compute. This is the same exact experience that a developer would have locally. This container comes ready with all the required dependencies and supports the rich features offered by VS Code, including extensions. This experience can also be used for function apps that already have code deployed to them as well.

To build this functionality we built an extension that launches VS Code for the Web.