Gemini 1.5 Pro, capable of inputting 2 million tokens, is now open to all developers

On June 28, 2024, Gemini 1.5 Pro, which allows the use of a context window of 2 million tokens, was released to all developers. On the same day, Gemma 2, a large-scale language model with parameter sizes of 9 billion (9B) and 27 billion (27B), was also released and became available through Google.

Google is also concerned about the increasing input costs associated with expanding the context window and has introduced a cost-reduction system.

Efficient Performance with Gemma 2

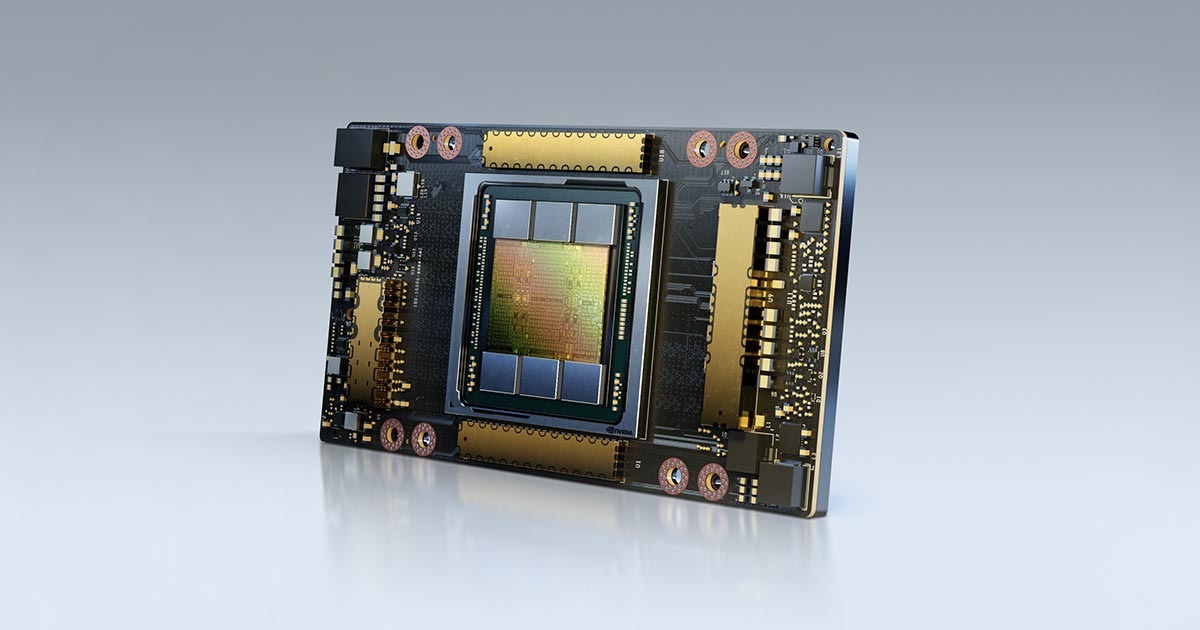

In addition, the 27B Gemma 2 is designed to efficiently run inference on a single Google Cloud TPU host, NVIDIA A100 80GB Tensor Core GPU, or NVIDIA H100 Tensor Core GPU, significantly reducing costs while maintaining high performance. This makes it even more accessible, and Google says that 'it will be possible to introduce AI to fit any budget.'

In addition, the Gemma 2 model is now available in Google AI Studio. Google said, 'Try Gemma 2 at full accuracy in Google AI Studio, or on your home computer with NVIDIA RTX or GeForce RTX via Hugging Face Transformers.'

Gemma 2 is provided under a commercially available license.

Related Posts:

<< Next Meta releases Meta Large Language Model Compiler, a commercially available large-scale language model that can compile and optimize codePrev >>Microsoft stops distribution of 'Windows Update that causes some devices to stop booting' in Software