InfoQ AI, ML and Data Engineering Trends Report - September 2024

A monthly overview of things you need to know as an architect or aspiring architect. View an example. We protect your privacy.

Facilitating the Spread of Knowledge and Innovation in Professional Software Development

Jeremy Ruston explores what made the BBC Micro attractive and what can be learned from it today. Pia Nilsson, Mike Lewis explain how the Backstage plugin system brings disparate pieces of functionality together, and walk through examples of how Backstage can be extended and interconnected. InfoQ editorial staff and friends of InfoQ are discussing the current trends in the domain of AI, ML and Data Engineering as part of the process of creating our annual trends report. In this podcast Shane Hastie, Lead Editor for Culture & Methods spoke to Michael Gray of ClearBank about engineering culture and leadership. Michael Friedrich is exploring how teams face varying levels of inefficiency in their DevSecOps processes, hindering progress and innovation. He highlights common issues like excessive debugging time and inefficient workflows, while also demonstrating how Artificial Intelligence (AI) can be a powerful tool to streamline these processes and boost efficiency.

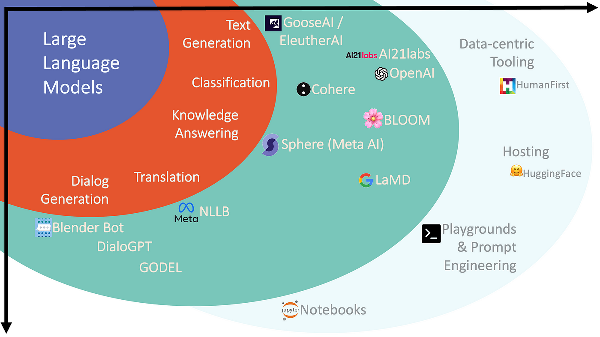

The InfoQ Trends Reports offer InfoQ readers a comprehensive overview of emerging trends and technologies in the areas of AI, ML, and Data Engineering. This report summarizes the InfoQ editorial team’s podcast with external guests to discuss the trends in AI and ML and what to look out for in the next 12 months. In conjunction with the report and trends graph, our accompanying podcast features insightful discussions of these trends.

AI and ML Trends

AI technologies have seen significant innovations since the InfoQ team discussed the trends report last year.

Changes Since Last Year

Here are some highlights of what has changed since last year’s report:

Let’s first start with new topics added to the Innovators category. Retrieval Augmented Generation (RAG) techniques will become crucial for corporations who want to use LLMs without sending them to cloud-based LLM providers. RAG will also be useful for applicable use cases of LLMs at scale.

Another new entrant in the Innovators category is the AI-integrated hardware, which includes AI-enabled GPU infrastructure and AI-powered PCs, mobile phones, and edge computing devices. This is going to see significant development in the next 12 months.

LLM-based solutions have challenges in the areas of infrastructure setup and management costs. So, a new type of language model called Small Language Models (SLMs) will be explored and adopted. SLMs are also perfect for edge computing related use cases to run on small devices. Companies like Microsoft have released Phi-3 and other SLMs that the community can start trying out immediately to compare the cost and benefits of using an SLM versus an LLM.

With the tremendous changes in Generative AI technologies and the recent release of the latest versions of LLMs from tech companies like OpenAI (GPT-4o), Meta (LLAMA3), and Google (Gemma), we think "Generative AI / Large Language Models (LLMs)" topic is now ready to be promoted from Innovators category to Early Adopters category.

Another topic moving into this category is Synthetic Data Generation, as more and more companies are starting to use it heavily in model training.

AI coding assistants will also see more adoption, especially in corporate application development settings. So, this topic is being promoted to the Early Majority category.

Image recognition techniques are also used in several industrial organizations for use cases like defect inspection and detection to help with preventive maintenance and minimize or eliminate machine failures.

ChatGPT was rolled out in November 2022. Since then, Generative AI and LLM technologies have been moving at the maximum speed in terms of innovation, and they don’t seem to be slowing down any time soon.

All the major players in the technology space have been very busy releasing their AI products.

Earlier this year, at the Google I/O Conference, Google announced several new developments, including Google Gemini updates and "Generative AI in Search," which will significantly change the search as we know it.

Around the same time, OpenAI released GPT-4o, the "omni" model that can work with audio, vision, and text in real time.

LLAMA3 was also released by Meta at the same time with their recent release of LLAMA version 3.1, which is based on 405 billion parameters.

Open-source LLM solutions like OLLAMA are getting a lot of attention.

Language Model Evolution

Another major trend in language model evolution is the new small language models. These specialized language models offer many of the same capabilities found in LLMs but are smaller, trained on smaller amounts of data, and use fewer memory resources. Small language models include Phi-3, TinyLlama, DBRX, and Instruct.

With several LLM options available, how do we compare different language models to choose the best model for different data or workloads in our applications? LLM evaluation is integral to successfully adopting AI technologies in companies. There are LLM comparison sites and public leaderboards like Huggingface's Chatbot Arena, Stanford HELM, Evals fr