Sam Altman suggests that OpenAI still doesn't fully understand how its large language models (LLMs) work. The company has raised significant funding to develop AI technologies, with its ChatGPT chatbot utilizing LLMs to generate humanlike conversational dialogue. However, Altman admitted at the International Telecommunication Union (ITU) AI for Good Global Summit in Geneva, Switzerland, that interpretability remains a challenge for OpenAI.

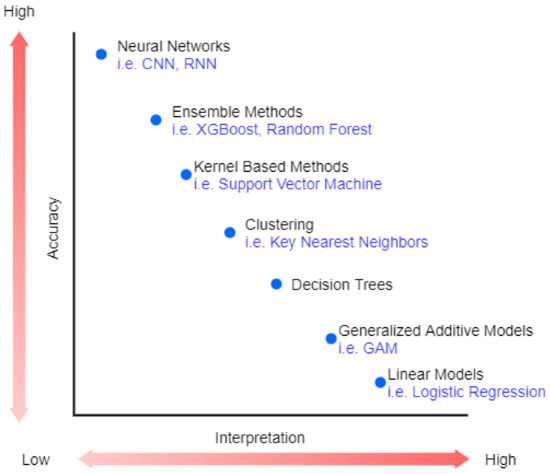

Struggles with Interpretability

Altman noted that OpenAI has not yet solved interpretability, indicating a lack of clarity on how the AI models function. Despite this, he believes the systems are safe and robust, emphasizing the need for a deeper understanding of the inner workings of these models. Altman highlighted the difficulty in comprehending the thought process at a neuron-by-neuron level, underscoring the challenge in replicating a human mind's explanation process.

While addressing concerns about releasing more advanced models, Altman emphasized the importance of ensuring the safety and reliability of such systems. The inability to fully explain AI chatbots' responses raises valid safety and privacy concerns, highlighting the ongoing challenges in the AI space.

Moving Towards Understanding

Altman emphasized the significance of unraveling the complexities of AI models to enhance transparency and verify safety claims. Understanding the mechanisms behind these models can lead to improved trust in AI technologies and help address potential risks associated with their deployment.

Despite the existing limitations, Altman remains optimistic about the future of AI technologies. The integration of ChatGPT into Apple devices signifies a step forward in expanding the reach of AI-powered solutions, paving the way for broader applications across various industries.

Unlock Your Trading Potential: Trade like Experts with SEBI registered creators, Learn from Courses & Webinars by India's Finest Finance Experts. Invest Now