Microsoft MAIA 100 AI Accelerator for Azure - ServeTheHome

A few months ago, Microsoft Azure shared insights on the MAIA AI accelerator in a blog post. The MAIA 100 is a custom AI accelerator designed by Microsoft, with a focus on optimizing performance for running OpenAI models while also reducing costs compared to using NVIDIA GPUs.

Key Specifications

Microsoft's MAIA 100 utilizes TSMC CoWoS-S technology, featuring a TSMC 5nm part and 64GB of HBM2E memory. Unlike NVIDIA and AMD, Microsoft's choice of HBM2E ensures a unique supply chain advantage. Additionally, the accelerator boasts a large 500MB L1/L2 cache, 12x 400GbE network bandwidth, and operates at 700W TDP. In production for inference workloads, each accelerator consumes 500W.

Architecture Overview

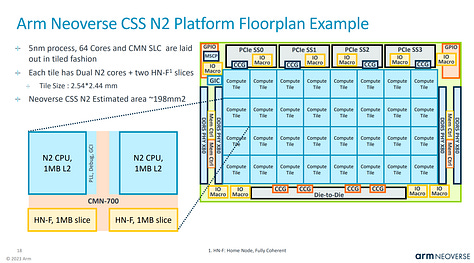

The MAIA 100 accelerator comprises clusters, with each cluster containing four tiles. The SoC houses 16 clusters, with additional features such as image decoders and confidential compute capabilities. Microsoft has also integrated a variety of data types, including support for 9-bit and 6-bit compute.

Interconnect and Software

The MAIA 100 accelerator utilizes an Ethernet-based interconnect utilizing a custom RoCE-like protocol, diverging from traditional options like InfiniBand. The company is involved in the Ultra Ethernet Consortium, aligning with its Ethernet-centric approach. On the software side, the Maia SDK offers an asynchronous programming model supporting programming via Triton or the Maia API, providing varying levels of control for developers.

Tools and Capabilities

The Maia SDK includes tools such as Maia-SMI for system management, paralleling tools like nvidia-smi. Notably, the SDK offers an out-of-box experience with PyTorch models, streamlining the process of integrating Maia into existing workflows.

Microsoft's detailed unveiling of the MAIA 100 accelerator sheds light on its technical prowess and strategic decisions. Despite its lower HBM capacity compared to NVIDIA's offerings, the MAIA 100's power efficiency and unique design present a compelling alternative in the AI accelerator market. As the industry navigates power constraints, Microsoft's focus on cost-effectiveness may prove advantageous in the long run.