AI: Google Gemini AI with Voice ready for its 'close-up'. RTZ #449 ...

ListenShare... beginning of mainstream multimodal, voice AI ramps. Multimodal AIs, which I’ve long written about, are ready for their mainstream close-up in this AI Tech Wave. And first out of the gate at mainstream scale is Google Gemini on Android smartphones. With Google’s rollout of its latest AI powered Pixel smartphones, watches and earbuds, it looks like unified multimodal AIs powered by the user’s personal data, may finally be here for mainstream rollout. And the star as I mentioned above, is Google Gemini on Android (And soon to come also on iOS devices by Apple of course).

Multimodal voice AIs

Multimodal voice AIs are something I’ve written about for a while now, with OpenAI showing off its voice-driven GPT-4 Omni, Apple giving a glimpse of its AI revamped Siri, and several tantalizing voice-driven, multimodal AI demos of these highly anthropomorphizing technologies. Not to mention Apple’s vision of its ‘Apple Intelligence’, that leverages its unique branding as the ‘Privacy and Trust’ AI custodian of over two billion users’ personal data across a range of Apple hardware, operating systems, and applications/services.

But Google’s presentation early this week was notable less for its latest hardware devices, and more for its live demos of its Google Gemini Advanced that can interact via Voice, while leveraging over two billion users’ data across Google Apps and platforms like Gmail, Docs, Drive, Chrome, Android, and YouTube. And of course Google’s powerful integrated tech stack from its custom AI TPU silicon chips to massive AI data centers to serve up these multimodal voice AIs at scale.

Google Beats Apple And OpenAI to the Punch

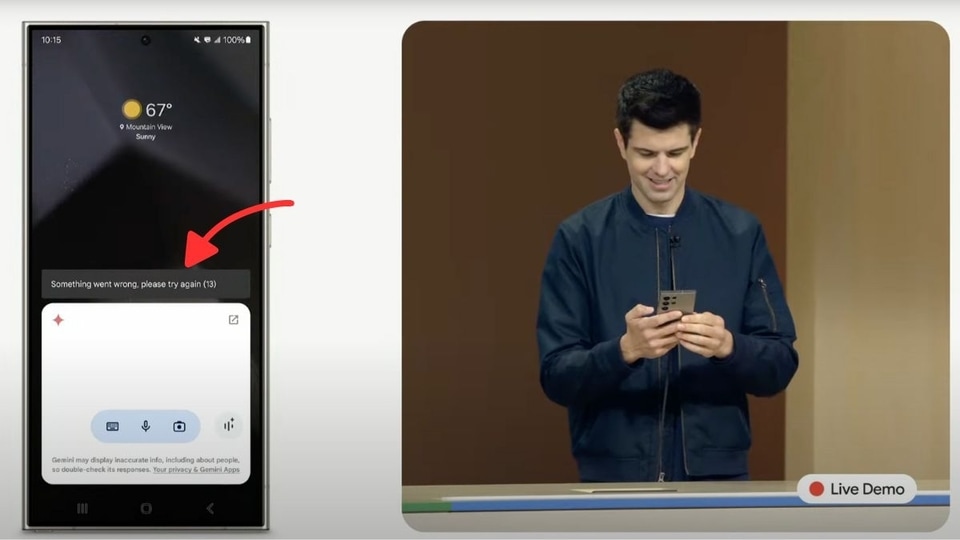

In tech, hype can only carry you so far. That’s one lesson from Google’s new product presentation on Tuesday when it announced a flurry of updates to its devices that competitors like Apple and OpenAI have been talking about (but not shipping) for months.

Google is also announced that its Pixel Buds Pro 2 earbuds would allow users to converse with their AI assistants, even when the phone is locked and in their pockets. Google’s voice assistant is able to remember context across hours-long conversations and eventually plans to extend the voice assistant into video, meaning that users could essentially have video calls with Gemini.

Google's Gemini Live AI Sounds So Human

The whole rollout impressed the WSJ’s Joanna Stern enough to write “Google’s Gemini Live AI Sounds So Human, I Almost Forgot It Was a Bot”. “I’m not saying I prefer talking to Google’s Gemini Live over a real human. But I’m not not saying that either.”

Does it help that the chatty new artificial-intelligence bot says I’m a great interviewer with a good sense of humor? Maybe. But it’s more that it actually listens, offers quick answers and doesn’t mind interruptions.

All of the above pieces are worth reading in full, including the video rollout, to get a fuller sense of the groundbreaking technology.