Meta launches AI that can check how other models work

Meta’s self-taught evaluator is part of a new round of AI innovations developed by the company’s research division team, following its introduction in an August paper. Technology and social media platform Meta has announced the release of several new AI models, including the self-taught evaluator, which can train other AI, without the need for human input, in the hope that it would improve efficiency and scalability for enterprises using large language models (LLM).

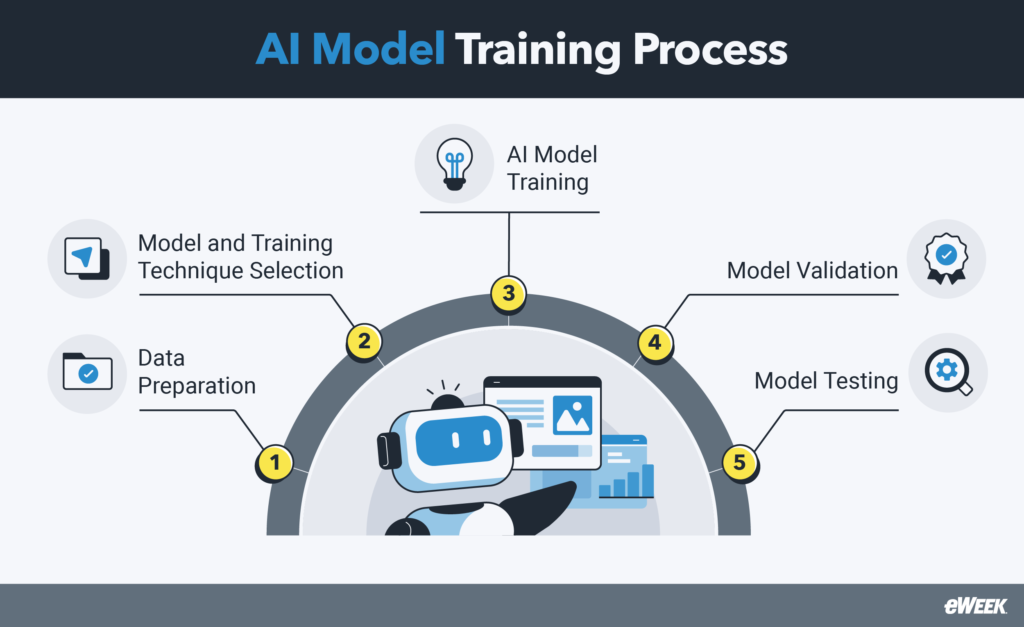

The tool was first introduced in a paper released in August, where it was stated that the technology uses the same ‘chain of thought’ method previously seen in recent Open AI models, to better generate reliable response and judgements. With human involvement in LLM evaluation often considered slow and more costly, the self-taught evaluator aims to create a training approach that eliminates the need for human-labelled data. The AI is prompted to break down difficult problems into manageable, practical steps, improving the accuracy of responses on a wide range of complex topics – for example science, coding, and maths.

Related:

- Meta introduces generative AI video advertising tools

- Instagram Launches ‘Best Practices’ Tips Element

Meta’s research is part of a growing trend in which techniques incorporate LLMs as part of the automation and improvement process. Jason Weston, a Meta research scientist, told Reuters, “we hope, as AI becomes more and more super-human, that it will get better and better at checking its work, so that it will actually be better than the average human”. “The idea of being self-taught and able to self-evaluate is basically crucial to the idea of getting to this sort of super-human level of AI,” he said.

In other Meta-related news, earlier this month the EU’s highest court passed judgement in favor of Max Schrems, a privacy campaigner who stated that Facebook misused data about his sexual orientation to target him with personalized ads. The court agreed that the company had unlawfully processed Schrems personal data for the purpose of targeting him with specific advertisements. Under the EU data protection law, sensitive information in relation to sexual orientation, race/ethnicity, or health status carries strict processing requirements.

Recent Updates:

- TikTok owner sacks intern for allegedly sabotaging AI project

- Meta to discontinue platform for creating AR filters for Instagram

- Meta Adds New Labels to Business Chats in Messenger

- Meta to let users to create custom AI characters

This website uses cookies to improve your experience. We'll assume you're ok with this, but you can opt-out if you wish. AcceptRead More